The Keras Python library makes creating deep learning models fast and easy.

The sequential API allows you to create models layer-by-layer for most problems. It is limited in that it does not allow you to create models that share layers or have multiple inputs or outputs.

The functional API in Keras is an alternate way of creating models that offers a lot more flexibility, including creating more complex models.

In this tutorial, you will discover how to use the more flexible functional API in Keras to define deep learning models.

After completing this tutorial, you will know:

- The difference between the Sequential and Functional APIs.

- How to define simple Multilayer Perceptron, Convolutional Neural Network, and Recurrent Neural Network models using the functional API.

- How to define more complex models with shared layers and multiple inputs and outputs.

Kick-start your project with my new book Deep Learning With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Nov/2017: Added note about hanging dimension for input layers.

- Update Nov/2018: Added missing flatten layer for CNN, thanks Konstantin.

- Update Nov/2018: Added description of the functional API Python syntax.

Tutorial Overview

This tutorial is divided into 7 parts; they are:

- Keras Sequential Models

- Keras Functional Models

- Standard Network Models

- Shared Layers Model

- Multiple Input and Output Models

- Best Practices

- NEW: Note on the Functional API Python Syntax

1. Keras Sequential Models

As a review, Keras provides a Sequential model API.

If you are new to Keras or deep learning, see this step-by-step Keras tutorial.

The Sequential model API is a way of creating deep learning models where an instance of the Sequential class is created and model layers are created and added to it.

For example, the layers can be defined and passed to the Sequential as an array:

|

1 2 3 |

from keras.models import Sequential from keras.layers import Dense model = Sequential([Dense(2, input_dim=1), Dense(1)]) |

Layers can also be added piecewise:

|

1 2 3 4 5 |

from keras.models import Sequential from keras.layers import Dense model = Sequential() model.add(Dense(2, input_dim=1)) model.add(Dense(1)) |

The Sequential model API is great for developing deep learning models in most situations, but it also has some limitations.

For example, it is not straightforward to define models that may have multiple different input sources, produce multiple output destinations or models that re-use layers.

2. Keras Functional Models

The Keras functional API provides a more flexible way for defining models.

It specifically allows you to define multiple input or output models as well as models that share layers. More than that, it allows you to define ad hoc acyclic network graphs.

Models are defined by creating instances of layers and connecting them directly to each other in pairs, then defining a Model that specifies the layers to act as the input and output to the model.

Let’s look at the three unique aspects of Keras functional API in turn:

1. Defining Input

Unlike the Sequential model, you must create and define a standalone Input layer that specifies the shape of input data.

The input layer takes a shape argument that is a tuple that indicates the dimensionality of the input data.

When input data is one-dimensional, such as for a multilayer Perceptron, the shape must explicitly leave room for the shape of the mini-batch size used when splitting the data when training the network. Therefore, the shape tuple is always defined with a hanging last dimension when the input is one-dimensional (2,), for example:

|

1 2 |

from keras.layers import Input visible = Input(shape=(2,)) |

2. Connecting Layers

The layers in the model are connected pairwise.

This is done by specifying where the input comes from when defining each new layer. A bracket notation is used, such that after the layer is created, the layer from which the input to the current layer comes from is specified.

Let’s make this clear with a short example. We can create the input layer as above, then create a hidden layer as a Dense that receives input only from the input layer.

|

1 2 3 4 |

from keras.layers import Input from keras.layers import Dense visible = Input(shape=(2,)) hidden = Dense(2)(visible) |

Note the (visible) after the creation of the Dense layer that connects the input layer output as the input to the dense hidden layer.

It is this way of connecting layers piece by piece that gives the functional API its flexibility. For example, you can see how easy it would be to start defining ad hoc graphs of layers.

3. Creating the Model

After creating all of your model layers and connecting them together, you must define the model.

As with the Sequential API, the model is the thing you can summarize, fit, evaluate, and use to make predictions.

Keras provides a Model class that you can use to create a model from your created layers. It requires that you only specify the input and output layers. For example:

|

1 2 3 4 5 6 |

from keras.models import Model from keras.layers import Input from keras.layers import Dense visible = Input(shape=(2,)) hidden = Dense(2)(visible) model = Model(inputs=visible, outputs=hidden) |

Now that we know all of the key pieces of the Keras functional API, let’s work through defining a suite of different models and build up some practice with it.

Each example is executable and prints the structure and creates a diagram of the graph. I recommend doing this for your own models to make it clear what exactly you have defined.

My hope is that these examples provide templates for you when you want to define your own models using the functional API in the future.

3. Standard Network Models

When getting started with the functional API, it is a good idea to see how some standard neural network models are defined.

In this section, we will look at defining a simple multilayer Perceptron, convolutional neural network, and recurrent neural network.

These examples will provide a foundation for understanding the more elaborate examples later.

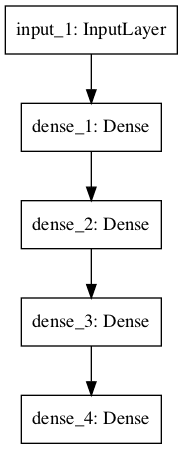

Multilayer Perceptron

In this section, we define a multilayer Perceptron model for binary classification.

The model has 10 inputs, 3 hidden layers with 10, 20, and 10 neurons, and an output layer with 1 output. Rectified linear activation functions are used in each hidden layer and a sigmoid activation function is used in the output layer, for binary classification.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# Multilayer Perceptron from keras.utils import plot_model from keras.models import Model from keras.layers import Input from keras.layers import Dense visible = Input(shape=(10,)) hidden1 = Dense(10, activation='relu')(visible) hidden2 = Dense(20, activation='relu')(hidden1) hidden3 = Dense(10, activation='relu')(hidden2) output = Dense(1, activation='sigmoid')(hidden3) model = Model(inputs=visible, outputs=output) # summarize layers print(model.summary()) # plot graph plot_model(model, to_file='multilayer_perceptron_graph.png') |

Running the example prints the structure of the network.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) (None, 10) 0 _________________________________________________________________ dense_1 (Dense) (None, 10) 110 _________________________________________________________________ dense_2 (Dense) (None, 20) 220 _________________________________________________________________ dense_3 (Dense) (None, 10) 210 _________________________________________________________________ dense_4 (Dense) (None, 1) 11 ================================================================= Total params: 551 Trainable params: 551 Non-trainable params: 0 _________________________________________________________________ |

A plot of the model graph is also created and saved to file.

Multilayer Perceptron Network Graph

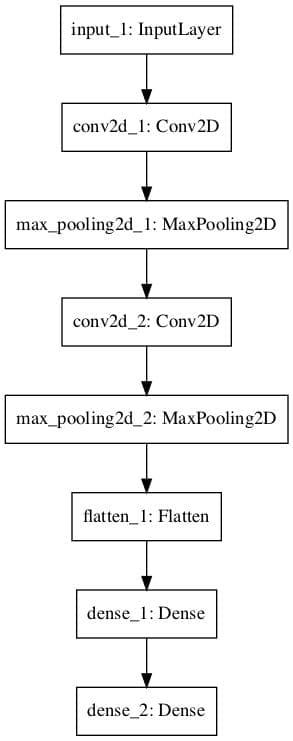

Convolutional Neural Network

In this section, we will define a convolutional neural network for image classification.

The model receives black and white 64×64 images as input, then has a sequence of two convolutional and pooling layers as feature extractors, followed by a fully connected layer to interpret the features and an output layer with a sigmoid activation for two-class predictions.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

# Convolutional Neural Network from keras.utils import plot_model from keras.models import Model from keras.layers import Input from keras.layers import Dense from keras.layers import Flatten from keras.layers.convolutional import Conv2D from keras.layers.pooling import MaxPooling2D visible = Input(shape=(64,64,1)) conv1 = Conv2D(32, kernel_size=4, activation='relu')(visible) pool1 = MaxPooling2D(pool_size=(2, 2))(conv1) conv2 = Conv2D(16, kernel_size=4, activation='relu')(pool1) pool2 = MaxPooling2D(pool_size=(2, 2))(conv2) flat = Flatten()(pool2) hidden1 = Dense(10, activation='relu')(flat) output = Dense(1, activation='sigmoid')(hidden1) model = Model(inputs=visible, outputs=output) # summarize layers print(model.summary()) # plot graph plot_model(model, to_file='convolutional_neural_network.png') |

Running the example summarizes the model layers.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) (None, 64, 64, 1) 0 _________________________________________________________________ conv2d_1 (Conv2D) (None, 61, 61, 32) 544 _________________________________________________________________ max_pooling2d_1 (MaxPooling2 (None, 30, 30, 32) 0 _________________________________________________________________ conv2d_2 (Conv2D) (None, 27, 27, 16) 8208 _________________________________________________________________ max_pooling2d_2 (MaxPooling2 (None, 13, 13, 16) 0 _________________________________________________________________ flatten_1 (Flatten) (None, 2704) 0 _________________________________________________________________ dense_1 (Dense) (None, 10) 27050 _________________________________________________________________ dense_2 (Dense) (None, 1) 11 ================================================================= Total params: 35,813 Trainable params: 35,813 Non-trainable params: 0 _________________________________________________________________ |

A plot of the model graph is also created and saved to file.

Convolutional Neural Network Graph

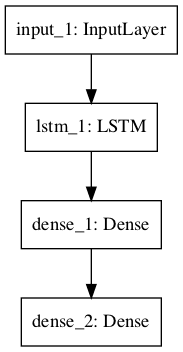

Recurrent Neural Network

In this section, we will define a long short-term memory recurrent neural network for sequence classification.

The model expects 100 time steps of one feature as input. The model has a single LSTM hidden layer to extract features from the sequence, followed by a fully connected layer to interpret the LSTM output, followed by an output layer for making binary predictions.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# Recurrent Neural Network from keras.utils import plot_model from keras.models import Model from keras.layers import Input from keras.layers import Dense from keras.layers.recurrent import LSTM visible = Input(shape=(100,1)) hidden1 = LSTM(10)(visible) hidden2 = Dense(10, activation='relu')(hidden1) output = Dense(1, activation='sigmoid')(hidden2) model = Model(inputs=visible, outputs=output) # summarize layers print(model.summary()) # plot graph plot_model(model, to_file='recurrent_neural_network.png') |

Running the example summarizes the model layers.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) (None, 100, 1) 0 _________________________________________________________________ lstm_1 (LSTM) (None, 10) 480 _________________________________________________________________ dense_1 (Dense) (None, 10) 110 _________________________________________________________________ dense_2 (Dense) (None, 1) 11 ================================================================= Total params: 601 Trainable params: 601 Non-trainable params: 0 _________________________________________________________________ |

A plot of the model graph is also created and saved to file.

Recurrent Neural Network Graph

4. Shared Layers Model

Multiple layers can share the output from one layer.

For example, there may be multiple different feature extraction layers from an input, or multiple layers used to interpret the output from a feature extraction layer.

Let’s look at both of these examples.

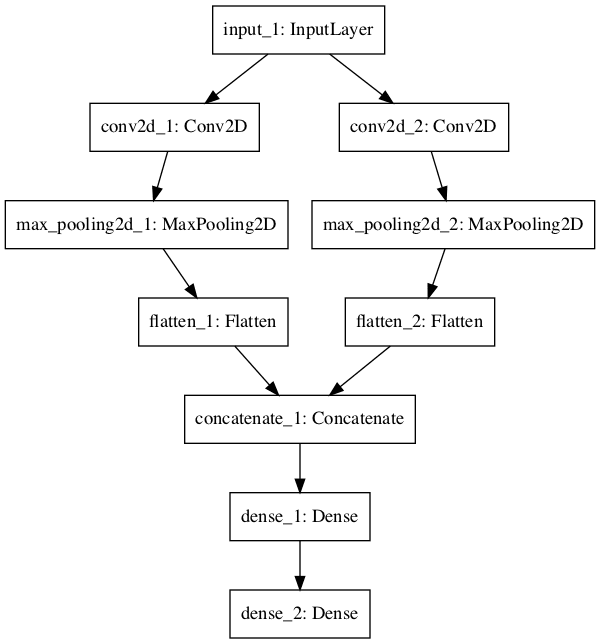

Shared Input Layer

In this section, we define multiple convolutional layers with differently sized kernels to interpret an image input.

The model takes black and white images with the size 64×64 pixels. There are two CNN feature extraction submodels that share this input; the first has a kernel size of 4 and the second a kernel size of 8. The outputs from these feature extraction submodels are flattened into vectors and concatenated into one long vector and passed on to a fully connected layer for interpretation before a final output layer makes a binary classification.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

# Shared Input Layer from keras.utils import plot_model from keras.models import Model from keras.layers import Input from keras.layers import Dense from keras.layers import Flatten from keras.layers.convolutional import Conv2D from keras.layers.pooling import MaxPooling2D from keras.layers.merge import concatenate # input layer visible = Input(shape=(64,64,1)) # first feature extractor conv1 = Conv2D(32, kernel_size=4, activation='relu')(visible) pool1 = MaxPooling2D(pool_size=(2, 2))(conv1) flat1 = Flatten()(pool1) # second feature extractor conv2 = Conv2D(16, kernel_size=8, activation='relu')(visible) pool2 = MaxPooling2D(pool_size=(2, 2))(conv2) flat2 = Flatten()(pool2) # merge feature extractors merge = concatenate([flat1, flat2]) # interpretation layer hidden1 = Dense(10, activation='relu')(merge) # prediction output output = Dense(1, activation='sigmoid')(hidden1) model = Model(inputs=visible, outputs=output) # summarize layers print(model.summary()) # plot graph plot_model(model, to_file='shared_input_layer.png') |

Running the example summarizes the model layers.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

____________________________________________________________________________________________________ Layer (type) Output Shape Param # Connected to ==================================================================================================== input_1 (InputLayer) (None, 64, 64, 1) 0 ____________________________________________________________________________________________________ conv2d_1 (Conv2D) (None, 61, 61, 32) 544 input_1[0][0] ____________________________________________________________________________________________________ conv2d_2 (Conv2D) (None, 57, 57, 16) 1040 input_1[0][0] ____________________________________________________________________________________________________ max_pooling2d_1 (MaxPooling2D) (None, 30, 30, 32) 0 conv2d_1[0][0] ____________________________________________________________________________________________________ max_pooling2d_2 (MaxPooling2D) (None, 28, 28, 16) 0 conv2d_2[0][0] ____________________________________________________________________________________________________ flatten_1 (Flatten) (None, 28800) 0 max_pooling2d_1[0][0] ____________________________________________________________________________________________________ flatten_2 (Flatten) (None, 12544) 0 max_pooling2d_2[0][0] ____________________________________________________________________________________________________ concatenate_1 (Concatenate) (None, 41344) 0 flatten_1[0][0] flatten_2[0][0] ____________________________________________________________________________________________________ dense_1 (Dense) (None, 10) 413450 concatenate_1[0][0] ____________________________________________________________________________________________________ dense_2 (Dense) (None, 1) 11 dense_1[0][0] ==================================================================================================== Total params: 415,045 Trainable params: 415,045 Non-trainable params: 0 ____________________________________________________________________________________________________ |

A plot of the model graph is also created and saved to file.

Neural Network Graph With Shared Inputs

Shared Feature Extraction Layer

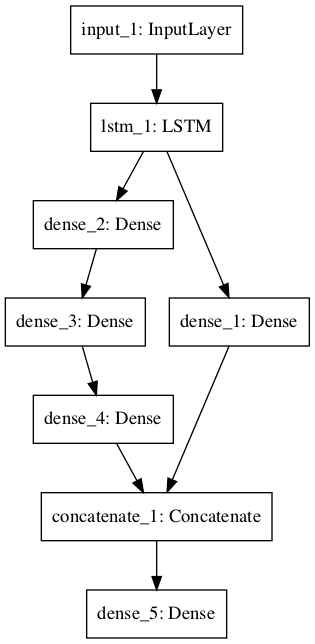

In this section, we will use two parallel submodels to interpret the output of an LSTM feature extractor for sequence classification.

The input to the model is 100 time steps of 1 feature. An LSTM layer with 10 memory cells interprets this sequence. The first interpretation model is a shallow single fully connected layer, the second is a deep 3 layer model. The output of both interpretation models are concatenated into one long vector that is passed to the output layer used to make a binary prediction.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

# Shared Feature Extraction Layer from keras.utils import plot_model from keras.models import Model from keras.layers import Input from keras.layers import Dense from keras.layers.recurrent import LSTM from keras.layers.merge import concatenate # define input visible = Input(shape=(100,1)) # feature extraction extract1 = LSTM(10)(visible) # first interpretation model interp1 = Dense(10, activation='relu')(extract1) # second interpretation model interp11 = Dense(10, activation='relu')(extract1) interp12 = Dense(20, activation='relu')(interp11) interp13 = Dense(10, activation='relu')(interp12) # merge interpretation merge = concatenate([interp1, interp13]) # output output = Dense(1, activation='sigmoid')(merge) model = Model(inputs=visible, outputs=output) # summarize layers print(model.summary()) # plot graph plot_model(model, to_file='shared_feature_extractor.png') |

Running the example summarizes the model layers.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

____________________________________________________________________________________________________ Layer (type) Output Shape Param # Connected to ==================================================================================================== input_1 (InputLayer) (None, 100, 1) 0 ____________________________________________________________________________________________________ lstm_1 (LSTM) (None, 10) 480 input_1[0][0] ____________________________________________________________________________________________________ dense_2 (Dense) (None, 10) 110 lstm_1[0][0] ____________________________________________________________________________________________________ dense_3 (Dense) (None, 20) 220 dense_2[0][0] ____________________________________________________________________________________________________ dense_1 (Dense) (None, 10) 110 lstm_1[0][0] ____________________________________________________________________________________________________ dense_4 (Dense) (None, 10) 210 dense_3[0][0] ____________________________________________________________________________________________________ concatenate_1 (Concatenate) (None, 20) 0 dense_1[0][0] dense_4[0][0] ____________________________________________________________________________________________________ dense_5 (Dense) (None, 1) 21 concatenate_1[0][0] ==================================================================================================== Total params: 1,151 Trainable params: 1,151 Non-trainable params: 0 ____________________________________________________________________________________________________ |

A plot of the model graph is also created and saved to file.

Neural Network Graph With Shared Feature Extraction Layer

5. Multiple Input and Output Models

The functional API can also be used to develop more complex models with multiple inputs, possibly with different modalities. It can also be used to develop models that produce multiple outputs.

We will look at examples of each in this section.

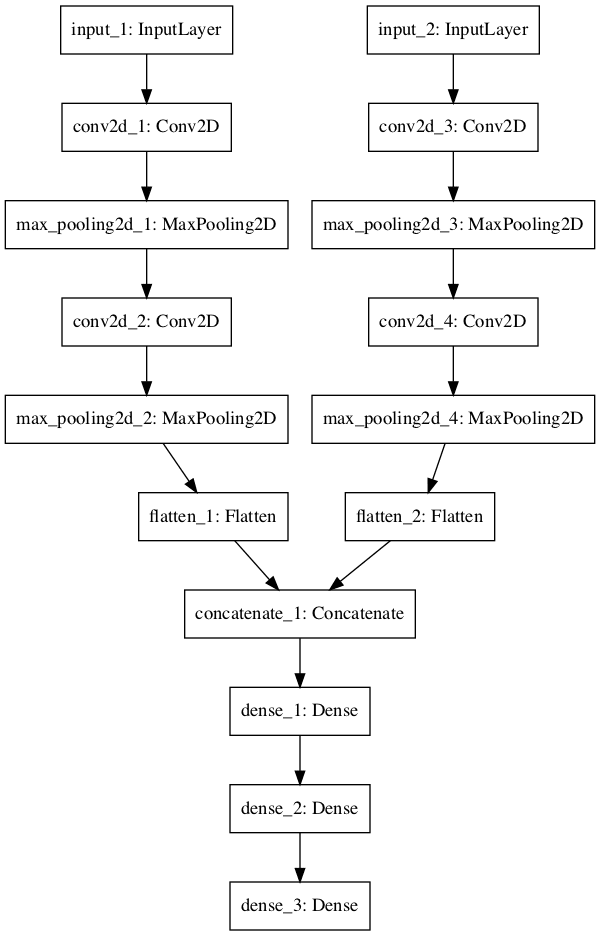

Multiple Input Model

We will develop an image classification model that takes two versions of the image as input, each of a different size. Specifically a black and white 64×64 version and a color 32×32 version. Separate feature extraction CNN models operate on each, then the results from both models are concatenated for interpretation and ultimate prediction.

Note that in the creation of the Model() instance, that we define the two input layers as an array. Specifically:

|

1 |

model = Model(inputs=[visible1, visible2], outputs=output) |

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

# Multiple Inputs from keras.utils import plot_model from keras.models import Model from keras.layers import Input from keras.layers import Dense from keras.layers import Flatten from keras.layers.convolutional import Conv2D from keras.layers.pooling import MaxPooling2D from keras.layers.merge import concatenate # first input model visible1 = Input(shape=(64,64,1)) conv11 = Conv2D(32, kernel_size=4, activation='relu')(visible1) pool11 = MaxPooling2D(pool_size=(2, 2))(conv11) conv12 = Conv2D(16, kernel_size=4, activation='relu')(pool11) pool12 = MaxPooling2D(pool_size=(2, 2))(conv12) flat1 = Flatten()(pool12) # second input model visible2 = Input(shape=(32,32,3)) conv21 = Conv2D(32, kernel_size=4, activation='relu')(visible2) pool21 = MaxPooling2D(pool_size=(2, 2))(conv21) conv22 = Conv2D(16, kernel_size=4, activation='relu')(pool21) pool22 = MaxPooling2D(pool_size=(2, 2))(conv22) flat2 = Flatten()(pool22) # merge input models merge = concatenate([flat1, flat2]) # interpretation model hidden1 = Dense(10, activation='relu')(merge) hidden2 = Dense(10, activation='relu')(hidden1) output = Dense(1, activation='sigmoid')(hidden2) model = Model(inputs=[visible1, visible2], outputs=output) # summarize layers print(model.summary()) # plot graph plot_model(model, to_file='multiple_inputs.png') |

Running the example summarizes the model layers.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

____________________________________________________________________________________________________ Layer (type) Output Shape Param # Connected to ==================================================================================================== input_1 (InputLayer) (None, 64, 64, 1) 0 ____________________________________________________________________________________________________ input_2 (InputLayer) (None, 32, 32, 3) 0 ____________________________________________________________________________________________________ conv2d_1 (Conv2D) (None, 61, 61, 32) 544 input_1[0][0] ____________________________________________________________________________________________________ conv2d_3 (Conv2D) (None, 29, 29, 32) 1568 input_2[0][0] ____________________________________________________________________________________________________ max_pooling2d_1 (MaxPooling2D) (None, 30, 30, 32) 0 conv2d_1[0][0] ____________________________________________________________________________________________________ max_pooling2d_3 (MaxPooling2D) (None, 14, 14, 32) 0 conv2d_3[0][0] ____________________________________________________________________________________________________ conv2d_2 (Conv2D) (None, 27, 27, 16) 8208 max_pooling2d_1[0][0] ____________________________________________________________________________________________________ conv2d_4 (Conv2D) (None, 11, 11, 16) 8208 max_pooling2d_3[0][0] ____________________________________________________________________________________________________ max_pooling2d_2 (MaxPooling2D) (None, 13, 13, 16) 0 conv2d_2[0][0] ____________________________________________________________________________________________________ max_pooling2d_4 (MaxPooling2D) (None, 5, 5, 16) 0 conv2d_4[0][0] ____________________________________________________________________________________________________ flatten_1 (Flatten) (None, 2704) 0 max_pooling2d_2[0][0] ____________________________________________________________________________________________________ flatten_2 (Flatten) (None, 400) 0 max_pooling2d_4[0][0] ____________________________________________________________________________________________________ concatenate_1 (Concatenate) (None, 3104) 0 flatten_1[0][0] flatten_2[0][0] ____________________________________________________________________________________________________ dense_1 (Dense) (None, 10) 31050 concatenate_1[0][0] ____________________________________________________________________________________________________ dense_2 (Dense) (None, 10) 110 dense_1[0][0] ____________________________________________________________________________________________________ dense_3 (Dense) (None, 1) 11 dense_2[0][0] ==================================================================================================== Total params: 49,699 Trainable params: 49,699 Non-trainable params: 0 ____________________________________________________________________________________________________ |

A plot of the model graph is also created and saved to file.

Neural Network Graph With Multiple Inputs

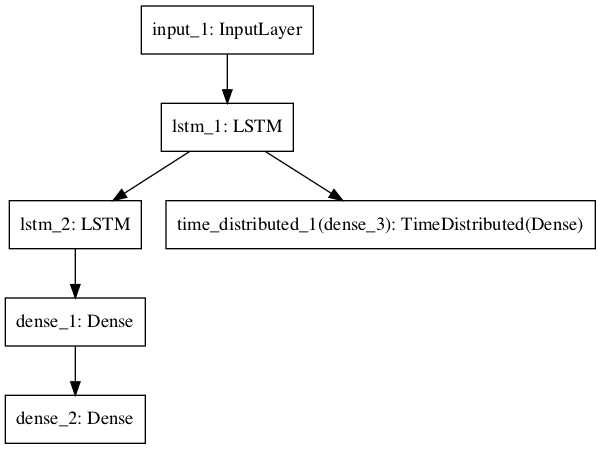

Multiple Output Model

In this section, we will develop a model that makes two different types of predictions. Given an input sequence of 100 time steps of one feature, the model will both classify the sequence and output a new sequence with the same length.

An LSTM layer interprets the input sequence and returns the hidden state for each time step. The first output model creates a stacked LSTM, interprets the features, and makes a binary prediction. The second output model uses the same output layer to make a real-valued prediction for each input time step.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

# Multiple Outputs from keras.utils import plot_model from keras.models import Model from keras.layers import Input from keras.layers import Dense from keras.layers.recurrent import LSTM from keras.layers.wrappers import TimeDistributed # input layer visible = Input(shape=(100,1)) # feature extraction extract = LSTM(10, return_sequences=True)(visible) # classification output class11 = LSTM(10)(extract) class12 = Dense(10, activation='relu')(class11) output1 = Dense(1, activation='sigmoid')(class12) # sequence output output2 = TimeDistributed(Dense(1, activation='linear'))(extract) # output model = Model(inputs=visible, outputs=[output1, output2]) # summarize layers print(model.summary()) # plot graph plot_model(model, to_file='multiple_outputs.png') |

Running the example summarizes the model layers.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

____________________________________________________________________________________________________ Layer (type) Output Shape Param # Connected to ==================================================================================================== input_1 (InputLayer) (None, 100, 1) 0 ____________________________________________________________________________________________________ lstm_1 (LSTM) (None, 100, 10) 480 input_1[0][0] ____________________________________________________________________________________________________ lstm_2 (LSTM) (None, 10) 840 lstm_1[0][0] ____________________________________________________________________________________________________ dense_1 (Dense) (None, 10) 110 lstm_2[0][0] ____________________________________________________________________________________________________ dense_2 (Dense) (None, 1) 11 dense_1[0][0] ____________________________________________________________________________________________________ time_distributed_1 (TimeDistribu (None, 100, 1) 11 lstm_1[0][0] ==================================================================================================== Total params: 1,452 Trainable params: 1,452 Non-trainable params: 0 ____________________________________________________________________________________________________ |

A plot of the model graph is also created and saved to file.

Neural Network Graph With Multiple Outputs

6. Best Practices

In this section, I want to give you some tips to get the most out of the functional API when you are defining your own models.

- Consistent Variable Names. Use the same variable name for the input (visible) and output layers (output) and perhaps even the hidden layers (hidden1, hidden2). It will help to connect things together correctly.

- Review Layer Summary. Always print the model summary and review the layer outputs to ensure that the model was connected together as you expected.

- Review Graph Plots. Always create a plot of the model graph and review it to ensure that everything was put together as you intended.

- Name the layers. You can assign names to layers that are used when reviewing summaries and plots of the model graph. For example: Dense(1, name=’hidden1′).

- Separate Submodels. Consider separating out the development of submodels and combine the submodels together at the end.

Do you have your own best practice tips when using the functional API?

Let me know in the comments.

7. Note on the Functional API Python Syntax

If you are new or new-ish to Python the syntax used in the functional API may be confusing.

For example, given:

|

1 2 3 |

... dense1 = Dense(32)(input) ... |

What does the double bracket syntax do?

What does it mean?

It looks confusing, but it is not a special python thing, just one line doing two things.

The first bracket “(32)” creates the layer via the class constructor, the second bracket “(input)” is a function with no name implemented via the __call__() function, that when called will connect the layers.

The __call__() function is a default function on all Python objects that can be overridden and is used to “call” an instantiated object. Just like the __init__() function is a default function on all objects called just after instantiating an object to initialize it.

We can do the same thing in two lines:

|

1 2 3 4 |

# create layer dense1 = Dense(32) # connect layer to previous layer dense1(input) |

I guess we could also call the __call__() function on the object explicitly, although I have never tried:

|

1 2 3 4 |

# create layer dense1 = Dense(32) # connect layer to previous layer dense1.__call_(input) |

Further Reading

This section provides more resources on the topic if you are looking go deeper.

- The Sequential model API

- Getting started with the Keras Sequential model

- Getting started with the Keras functional API

- Model class Functional API

Summary

In this tutorial, you discovered how to use the functional API in Keras for defining simple and complex deep learning models.

Specifically, you learned:

- The difference between the Sequential and Functional APIs.

- How to define simple Multilayer Perceptron, Convolutional Neural Network, and Recurrent Neural Network models using the functional API.

- How to define more complex models with shared layers and multiple inputs and outputs.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Thank you

I have been waiting fir this tutorial

Thanks, I hope it helps!

I’m using a keras API and I’m using the shared layers with 2 inputs and one output and have a problem with the fit model

model.fit([train_images1, train_images2],

batch_size=batch_size,

epochs=epochs,

validation_data=([test_images1, test_images2]))

I have this error :

ValueError: Error when checking model input: the list of Numpy arrays that you are passing to your model is not the size the model expected. Expected to see 2 array(s), but instead got the following list of 1 arrays: [array([[[[0.2 , 0.5019608 , 0.8862745 , …, 0.5686275 ,

0.5137255 , 0.59607846],

[0.24705882, 0.3529412 , 0.31764707, …, 0.5803922 ,

0.57254905, 0.6 ],

Perhaps double check the data has the shape expected by each model head.

could you fix it?

You haven’t provided the output in the fit method, that’s why its asking for 2 numpy arrays.

Fathi, could you fix ??

You’re awesome Jason!

I just can’t wait to see more from you on this wonderful blog, where did you hide all of this 😉

Can you please write a book where you implement more of the functional API?

I’m sure the book will be a real success

Best regards

Thabet

I agree with Thabet Ali, need a book on advance functional API part.

What aspects of the functional API do you need more help with Tom?

Thanks!

What problems are you having with the functional API?

I would like to know more on how to implement autoencoders on multi input time series signals with a single output categorical classification, using the functional API

Thanks.

LSTMs, for example, can take multiple time series directly and don’t require parallel input models.

Why do you want to use autoencoders for time series?

Yes I think it’s because the output sequence is shorter than the input sequence

Like seen in this dataset:

https://archive.ics.uci.edu/ml/datasets/human+activity+recognition+using+smartphones

Where there are three time series inputs and 5 different classes as output

Hi Jason, are you asking for a wish list? 🙂

– Autoencoders / Anomaly detection with Keras’s API

Thanks!

Thanks for the suggestion!

Yes, totally agree.

Jason, thank you for very interest blog. Beautiful article which can open new doors.

Thanks Alexander.

Dr. Brownlee,

How do you study mathematics behind so many algorithms that you implement?

regards

Leon

I read a lot 🙂

Can I consider the initial_state of an LSTM layer as an input branch of the architecture? Say that for each data I have a sequence s1, s2, s3 and a context feature X. I define an LSTM with 128 neurons, for each batch I want to map X to a 128 dimensional feature through a Dense(128) layer and set the initial_state for that batch training, meanwhile the sequence s1, s2,s3 is fed to the LSTM as the input sequence.

Sorry Alex, I’m not sure I follow. If you have some ideas, perhaps design an experiment to test them?

Thank you for the awesome tutorial.

For anyone wants to directly visualize in Jupyter Notebooks use the following lines.

from IPython.display import SVG

from keras.utils.vis_utils import model_to_dot

SVG(model_to_dot(model).create(prog=’dot’, format=’svg’))

Awesome, thanks for the tip!

Thanks for this blog. It is really helpful and well explained.

Thanks Luis, I’m glad to hear that. I appreciate your support!

Hi Jason – I am wondering if the code should be:

visible = Input(image_file, shape=( height, width, color channels))

if not, I wonder how the code references the image in question….

Surprised no one else asked this…

YOU ROCK JASON!

In section 1 you write that “the shape tuple is always defined with a hanging last dimension”, but when you define the convolutional network, you define it as such:

visible = Input(shape=(64,64,1))

without any hanging last dimension. Am I missing something here?

Yes, I was incorrect. This is only the case for 1D input. I have updated the post, thanks.

You are still incorrect. There is no “hanging last dimension”, even in the case of 1D input. It’s a “trailing comma” used by Python to disambiguate 0- or 1-tuples from parenthesis.

Thanks for your note.

Jason, I’ve been reading about keras and AI for some time and I always find your articles more clear and straightforward than all the other stuff on the net 🙂

Thanks!

Thanks Franek.

Jason, for some reasons I need to know the output tensors of the layers in my model. I’ve tried to experiment with the layer.get_output, get_output_et etc following the keras documentation, but I always fail to get anything sensible.

I tried to look for this subject on your blog, but I couldn’t find anything. Are you planning to write a post on this? 🙂 That would really help!

Sorry, I don’t have good advice for getting the output tensor. Perhaps post to the keras google group or slack channel?

See here:

https://machinelearningmastery.com/get-help-with-keras/

Hi Jason, yet another great post.

Using one of your past posts I created an LSTM that, using multiple time series, predicts several step ahead of a specific time series. Currently I have this structure:

where:

– x_tr has (440, 7, 6) dimensions (440 samples, 7 past time steps, 6 variables/time series)

– y_tr has (440, 21) dimensions, that is 440 samples and 21 ahead predicted values.

Now, I’d like to extend my network so that it predicts the (multi-step ahead) values of two time series. I tried this code:

where y1 and y2 both have (440, 21) dimensions, but I have this error:

“Error when checking target: expected dense_4 to have 3 dimensions, but got array with shape (440, 21)”.

How should I reshape y1 and y2 so that they fit with the network?

Sorry, I cannot debug your code for you, perhaps post to stack overflow?

Check the write-up on lstms, this will greatly help. The output series must have dimensions as n,2,21 ; n data with 2 timestep and 21 variables.

Y.reshape( (440/2),2,21)

The x dimension is already in place, change the y dimension to have 3 dimensions. Num. Samples, time step, variables

Great tutorial, it really helped me understand Model API better! THANKS!

I’m glad to hear that.

Hii Jason, this was a great post, specially for beginners to learn the Functional API. Would you mind to write a post to explain the code for a image segmentation problem step by step for beginners?

Thanks for the suggestion.

Hi Jason, thank you for such a great post. It helped me a lot to understand functional API’s in keras.

Could you please explain how we define the model.compile statement in multiple output case where each sub-model has a different objective function. For example, one output might be regression and the other classification as you mentioned in this post.

I believe you must have one objective function for the whole model.

Splendid tutorial as always!

Do you think you could make a tutorial about siamese neural nets in the future? It would be particularly interesting to see how a triplet loss model can be created in keras, one that recognizes faces, for example. The functional API must be the way to go, but I can’t imagine exactly how the layers should be connected.

Thanks for the suggestion Harry.

Good tutorial

Thanks alot

Thanks.

Thank you very much for your tutorial! He helped me a lot!

I have a question about cost functions. I have one request and several documents: 1 relevant and 4 irrelevant. I would like a cost function that both maximizes SCORE(Q, D+) and minimizes SCORE(Q, D-). So, I could have Delta = SUM{ Score(Q,D+) – Score(Q,Di-) } for i in (1..4)

Using the Hinge Loss cost function, I have L = max(0, 4 – Delta)

I wanted to know if taking the 4 documents, calculating their score with the NN and sending everything in the cost function is a good practice?

I was wondering if was possible to have two separate layers as inputs to the output layer, without concatenating them in a new layer and then having the concatenated layer project to the output layer. If you can’t do this with Keras, could you suggest another library that allows you to do this? I am new to neural networks, so would prefer a library that is suitable for newbies.

There are a host of merge layers to choose from besides concat.

Does that help?

I have two images, first image and its label is good, second images and its label is bad. I want to pass both images at a time to deep learning model for training. While testing I will have two images (unlabelled) and I want to detect which one is good and which one is bad. Could you please tell how to do it?

You will need a model with two inputs, one for each image.

Any examples you have to give two inputs to a model

Yes, see this caption example for inputting a photo and text:

https://machinelearningmastery.com/develop-a-deep-learning-caption-generation-model-in-python/

Thanks so much for the tutorial! It is much appreciated.

Where I am confused is for a model with multiple inputs and multiple outputs. To make it simple, lets say we have two input layers, some shared layers, and two output layers. I was able to build this in Keras and get a model printout that looks as expected, but when I go to fit the model Keras complains: ValueError: All input arrays (x) should have the same number of samples

Is it not possible to feed in inputs of different sizes?

Correct. Inputs must be padded to the same length.

Hi,

In the start of the post, you talked about hanging dimension to entertain the mini batch size. Could you kindly explain this a little.

My feature Matrix is a numpy N-d Array, in one -hot -encoded form: (6000,200) , and my batch size = 150.

Does this mean, I should give shape=(200,) ?

* batch size = 50.

Thanks!

Sorry, it means a numpy array where there is really only 1D of data with the second dimension not specified.

For example:

Results in:

It’s 1D, but looks confusing to beginners.

This means I should use shape(200,).

Thanks a lot for the prompt reply !!!

how to do a case with multi-input and multi-output cases

Simply combine some of the examples from this post.

Hi Jason,

Thank you very much for your blog, it’s easy to understand via some examples, I recognize that learning from some example is one of the fast way to learn new things.

In your post, I have a little confuse that in case multi input. If you have 1 image but you want get RGB 32x32x3 version and 64x64x1 gray-scale version for each Conv branch. How can the network know that. Because when we define the network we only said the input_shape, we don’t say which kind of image we want to led into the Conv in branch 1 or branch 2? In fit method we also have to said input and output, not give the detail. And if I want in the gray-scale version is: 32x32x3 (3 because I want to channel-wise, triple gray-scale version). And how can the network recognize the first branch is for gray-scale. Sorry for my question if is there any easy thing I don’t know. Thanks again for your post. I always follow your post.

You could run all images through one input and pad the smaller image to the dimensions of the larger image. Or you can use a multiple input model and define two separate input shapes.

Hi,

When I run this functional API in model for k fold cross validation, the numbers in the naming the dense layer is increasing in the return fitted model of each fold.

Like in first fold it’s “dense_2_acc”, then in 2nd fold its “dense_5_acc”.

By my model summary shows my model is correct. Could you kindly tell why is it changing the names in the fitted model “history” object of each fold?

regards,

Sorry, i have not seen this behavior. Perhaps it’s a fault? You could try posting to the keras list:

https://machinelearningmastery.com/get-help-with-keras/

This was a fantastic and concise beginner tutorial for building neural networks with Keras. Great job !

Thanks, I’m glad to hear that.

Thanks for the tutorial.

“When input data is one-dimensional, such as for a multilayer Perceptron, the shape must explicitly leave room for the shape of the mini-batch size used when splitting the data when training the network.

Therefore, the shape tuple is always defined with a hanging last dimension when the input is one-dimensional (2,)

…

visible = Input(shape=(2,))

”

I was a bit confused at first after reading these 2 sentences.

regarding trailing comma: A trailing comma is always required when we have a tuple with a single element. Otherwise (2) returns only the value 2, not a tuple with the value 2. https://docs.python.org/3/reference/expressions.html#expression-lists

As the shape parameter for Input should be a tuple ( https://keras.io/layers/core/#input ), we do not have any option other than to add a comma when we have a single element to be passed.

So, I’m not able to get the meaning implied in “the shape must explicitly leave room for the shape of the mini-batch size … Therefore, the shape tuple is always defined with a hanging last dimension”

Hi Jason,

Thanks so much for such a great post.

So, in your case in shared input layers section, you have the same CNN models for feature extraction, and the output can be concated since both features produced binary classification result.

But what if we have separate categorical classification model (for sequence classification) and regression model (for time series) which relies on the same input data. So is it possible to concate categorical classification model (which produces more than two classes) with a regression model, and the final result after model concatenation is binary classification?

Your opinion, in this case, is much appreciated.

Thank you.

Not sure I follow. Perhaps try it and as many variations as you can think of, and see.

Hi Jason, thanks for the neat post.

You’re welcome, I’m glad it helped.

Thanks Jason, great and helpful post

Can you go over combining wide and deep models using th functional api?

Thanks for the suggestion.

Do you have a specific question or concern with the approach?

Thanks Jason,

Your articles are the best and the consistency across articles is something to be admired.

Can you also explain residual nets using functional api.

Thanks

Thanks, and thanks for the suggestion.

Thank you so much for your great post.

though I have one question, I use the Multiple Input and Output Models with same network for my inputs. I wanna share the weights between them, can you please point out how should I address that?

Copy them between layers or use a wrapper that lets you reuse a layer, e.g. like timedistributed.

All ur posts r awesome. God bless u 🙂

hanks, I’m glad they help.

Really, amazing tutorial.

Why don’t u complete it with the testing step “predict”?

Thanks again 🙂

Thanks for the suggestion.

I explain how to make predictions here:

https://machinelearningmastery.com/how-to-make-classification-and-regression-predictions-for-deep-learning-models-in-keras/

The “Shared Input Layer” is very interesting. I wonder if the 2 convolutional structures can be replaced by 2 pre-trained models (let’s say VGG16 and Inception). What do u think?

Sure, try it.

This question is with reference to your older post on “Multilayer Perceptron Using the Window Method” : https://machinelearningmastery.com/time-series-prediction-with-deep-learning-in-python-with-keras/

In your code there, you have successfully created a model using a multidimensional array input, without having to flatten it.

Is it possible to do this with the keras functional API as well? Every solution i find seems like it requires flattening of data, however i’m trying to do a time series analysis and flattening would lead to loss of information.

The shape of the input is unrelated to use the use of the functional API.

You can use either API regardless of the shape of the data.

Time series data must be transformed into a supervised learning problem:

https://machinelearningmastery.com/convert-time-series-supervised-learning-problem-python/

Hey,

Thanks for the blog. I want to know how can I extract features form intermediate layer in Alexnet model . I am using functional api .

You can create a new model that ends at the layer of interest, then use a forward propagation (e.g. call predict()) to get the features.

I give an example for VGG in the image captioning tutorial:

https://machinelearningmastery.com/develop-a-deep-learning-caption-generation-model-in-python/

Hi Jason!

Great blog once again, thank you. I have a question regarding the current research on multi-input models.

I’m building a model that combines text-sequences and patient-characteristics. For this I’m using an LSTM ‘branch’ that i concat with a normal ‘branch’ in a neural network. I was wondering whether you came across some nice papers/articles that go a little deeper into such architectures, possibly giving me some insights in how to optimize this model and understand it thoroughly.

With kind regards,

Joost Zeeuw

Not off hand. I recommend experimenting a lot with the architecture and see what works best for your dataset.

I’d love to hear how to you go.

Hi Jason! Really great blog!

My question is: how to feed this kind of models with a generator? Well two generators actually, one for test, and one for train. I’m trying to do phoneme classification BTW

I have tried something like:

#model

input_data = Input(name='the_input', shape=(None, self.n_feats))

x = Bidirectional(LSTM(20, return_sequences=False, dropout=0.3), merge_mode='sum')(input_data)

y_pred = Dense(39, activation="softmax"), name="out")(x)

labels = Input(name='the_labels', shape=[39], dtype='int32') # not sure of this but how to compare labels otherwise??

self.model = Model(inputs=[input_data, labels], outputs=y_pred)

...

# I'm gonna omit the optimization and compile steps for simplicty

my generator yields something like this:

return ({'the_input':data_x, 'the_labels':labels},{'out':np.zeros([batch_size, np.max(seq_lens), num_classes])})

Also, just to be sure for sequence classification (many-to-one) I should use return_sequences=False in recurrent layers and Dense instead of TimeDistributed rigth?

Thanks!

Isaac

I have an example of using a generator here (under progressive loading):

https://machinelearningmastery.com/develop-a-deep-learning-caption-generation-model-in-python/

Hi Jason,

Thanks for the blog. It is very interesting. After reading your blog, I got one doubt if you can help me out in solving that – what if one wants to extract feature from an intermediate layer from a fine-tuned Siamese network which is pre-trained with a feed-forward multi-layer perceptron.

Is there any lead that you can provide. It would be very helpful to me.

You can get the weights for a network via layer.get_weights()

Hi, Thanks for your article.

I have one question.

What is the more efficient way to combine discrete and continuous features layers?

Often an integer encoding, one hot encoding or an embedding layer are effective for categorical variables.

Hi, Jason your blog is very good. I want to add custom layer in keras. Can you please explain how can I do?

Thanks.

I hope to cover that topic in the future.

Hi Jason,

Thanks for the excellent post. I attempted to implement a 1 hidden layer with 2 neurons followed by an output layer, both dense with sigmoid activation to train on XOR input – classical problem, that of course has a solution. However, without specifying a particular initialisation, I was unable to train this minimal neuron network toward a solution (with high enough number of neurons, I think it is working independent of initialisation). Could you include such a simple example as a test case of Keras machinery and perhaps comment on the pitfalls where presumably the loss function has multiple critical points?

Cheers,

Matt

Thanks for the suggestion.

XOR is really only an academic exercise anyway, perhaps focus on some real datasets?

Thanks for your excellent tutorials. I am trying to use Keras Functional API for my problem. I have two different sets of input which I am trying to use a two input – one output model.

My model looks like your “Multiple Input Model” example and as you mentioned I am doing the same thing as :

model = Model(inputs=[visible1, visible2], outputs=output)

and I am fitting the model with this code:

model.fit([XTrain1, XTrain2], [YTrain1, YTrain2], validation_split=0.33, epochs=100, batch_size=150, verbose=2), but I’m receiving error regarding the size mismatching.

The output TensorShape has a dimension of 3 and YTrain1 and YTrain2 has also the shape of (–, 3). Do you have any suggestion on how to resolve this error? I would be really thankful.

If the model has one output, you only need to specify one yTrain.

Hi

Thank you for your reply.

I have another question which I will be grateful if you could help me with that.

In your Multilayer Perceptron example, which the input data is 1-D, if I add a reshape module at the end of the Dense4 to reshape the output into a 2D object, then is it possible to see this 2D feature space as an image?

Is there any syntax to plot this 2D tensor object?

Thanks

If you fit an MLP on an image, the image pixels must be flattened to 1D before being provided as input.

Thanks, Jason

Can you give me an example of how to combine Conv1D => BiLSTM => Dense

I try to do but can’t figure out how to combine them

This will help as a start:

https://machinelearningmastery.com/cnn-long-short-term-memory-networks/

Thank you so much for quick reply Jason, I read this article, very useful!

But when I apply, I face that it has a very strange thing, I don’t know why:

Let see my program, it runs normally, but the val_acc, I don’t know why it always .] – ETA: 0s – loss: 0.2195 – acc: 0.8978

Epoch 00046: loss improved from 0.22164 to 0.21951,

40420/40420 [==============================] – 386s – loss: 0.2195 – acc: 0.8978 – val_loss: 5.2004 – val_acc: 0.2399

Epoch 48/100

40416/40420 [============================>.] – ETA: 0s – loss: 0.2161 – acc: 0.9010

Epoch 00047: loss improved from 0.21951 to 0.21610,

40420/40420 [==============================] – 390s – loss: 0.2161 – acc: 0.9010 – val_loss: 5.0661 – val_acc: 0.2369

Epoch 49/100

40416/40420 [============================>.] – ETA: 0s – loss: 0.2274 – acc: 0.8965

Epoch 00048: loss did not improve

40420/40420 [==============================] – 393s – loss: 0.2276 – acc: 0.8964 – val_loss: 5.1333 – val_acc: 0.2412

Epoch 50/100

40416/40420 [============================>.] – ETA: 0s – loss: 0.2145 – acc: 0.9028

Epoch 00049: loss improved from 0.21610 to 0.21455,

40420/40420 [==============================] – 395s – loss: 0.2146 – acc: 0.9027 – val_loss: 5.3898 – val_acc: 0.2344

Epoch 51/100

40416/40420 [============================>.] – ETA: 0s – loss: 0.2100 – acc: 0.9051

Epoch 00050: loss improved from 0.21455 to 0.20999,

You may need to tune the network to your problem.

I tried many times, but even it overfits all database, val_acc still low.

I know it overfits all because I use predict program to predict all database, acc high as training acc.

Thank you

Perhaps try adding some regularization like dropout?

Perhaps getting more data?

Perhaps try reducing the number of training epochs?

Perhaps try reducing the size of the model?

thank you, Jason,

– I am trying to test by adding some dropout layers,

– the number of epochs when training doesn’t need to reduce because I observe it frequently myself,

– about the size of the model, I am training 4 programs in parallel to check it.

– the last one, getting more data, I will do if all of above have better results

Sounds great.

hi Jason tnx for this awesome post

really helpful

when i run this code:

l_input = Input(shape=(336, 25))

adense = GRU(256)(l_input)

bdense = Dense(64, activation=’relu’)(adense)

.

.

.

i’ll get this error:

ValueError: Invalid reduction dimension 2 for input with 2 dimensions. for ‘model_1/gru_1/Sum’ (op: ‘Sum’) with input shapes: [?,336], [2] and with computed input tensors: input[1] = .

i’m really exhausted and i didn’t find the answer anywhere.

what should i do?

i appreciate your help

Sounds like the data and expectations of the model do not match. Perhaps change the data or the model?

This is a particularly helpful tutorial, but I cannot begin to use without data source.

Thanks.

I left a previous reply about needing data sources, I see other readers not having this problem, but seems I am still at the stage where I don’t see what data to input or how to preprocess for these examples. I am also confused, as looks like a png is common source.

I am particularly interested in example that takes text and question and returns an answer – where would I find such input and how to fit into your code?

Jason, What dataset from your github datasets would be good for this LSTM tutorial? Or is there an online dataset you could recommend. I am interested in both LSTM for text processing (not IMDB) and Keras functional API

Not sure I follow what you are trying to achieve?

Any chance of a tutorial on this using some real/toy data as a vehicle

I have many deep learning tutorials on real dataset, you can get started here:

https://machinelearningmastery.com/start-here/#deeplearning

And here:

https://machinelearningmastery.com/start-here/#nlp

And here:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Thank you Mr.Jason,

Can you help me to predict solar radiation using kalman filter?

Have you a matlab code about kalman filter for solar radiation prediction.

Best regards

Sorry, I don’t have examples in matlab nor an example of a kalman filter.

Hello how are you? Sorry for the inconvenience. I’m following up on his explanations of Keras using neural networks and convolutional neural networks. I’m trying to perform a convolution using a set of images that three channels each image and another set of images that has one channel each image. When I run a CNN with Keras for each type of image, I get a result. So I have two inputs and one output. The entries are X_train1 with size of (24484,227,227,1) and X_train2 with size of (24484,227,227,3). So I perform a convolution separately for each input and then I use the “merge” command from KERAS, then I apply the “merge” on a CNN. However, I get the following error:

ValueError: could not broadcast input array from shape (24484,227,227,1) into shape (24484,227,227).

I already tried to take the number 1 and so stick with the shape (24484,227,227). So it looks like it’s right. But the error happens again in X_train2 with the following warning:

ValueError: could not broadcast input array from shape (24484,227,227,3) into shape (24484,227,227).

However, I can not delete the number “3”.

Could you help me to eliminate this error?

My code is:

X_train1: shape of (24484,227,227,1)

X_train2: shape of (24484,227,227,3)

X_val1: shape of (2000,227,227,1)

X_val2: shape of (2000,227,227,3)

batch_size=64

num_epochs=30

DROPOUT = 0.5

model_input1 = Input(shape = (img_width, img_height, 1))

DM = Convolution2D(filters = 64, kernel_size = (1,1), strides = (1,1), activation = “relu”)(model_input1)

DM = Convolution2D(filters = 64, kernel_size = (1,1), strides = (1,1), activation = “relu”)(DM)

model_input2 = Input(shape = (img_width, img_height, 3))

RGB = Convolution2D(filters = 64, kernel_size = (1,1), strides = (1,1), activation = “relu”)(model_input2)

RGB = Convolution2D(filters = 64, kernel_size = (1,1), strides = (1,1), activation = “relu”)(RGB)

merge = concatenate([DM, RGB])

# First convolutional Layer

z = Convolution2D(filters = 96, kernel_size = (11,11), strides = (4,4), activation = “relu”)(merge)

z = BatchNormalization()(z)

z = MaxPooling2D(pool_size = (3,3), strides=(2,2))(z)

# Second convolutional Layer

z = ZeroPadding2D(padding = (2,2))(z)

z = Convolution2D(filters = 256, kernel_size = (5,5), strides = (1,1), activation = “relu”)(z)

z = BatchNormalization()(z)

z = MaxPooling2D(pool_size = (3,3), strides=(2,2))(z)

# Rest 3 convolutional layers

z = ZeroPadding2D(padding = (1,1))(z)

z = Convolution2D(filters = 384, kernel_size = (3,3), strides = (1,1), activation = “relu”)(z)

z = ZeroPadding2D(padding = (1,1))(z)

z = Convolution2D(filters = 384, kernel_size = (3,3), strides = (1,1), activation = “relu”)(z)

z = ZeroPadding2D(padding = (1,1))(z)

z = Convolution2D(filters = 256, kernel_size = (3,3), strides = (1,1), activation = “relu”)(z)

z = MaxPooling2D(pool_size = (3,3), strides=(2,2))(z)

z = Flatten()(z)

z = Dense(4096, activation=”relu”)(z)

z = Dropout(DROPOUT)(z)

z = Dense(4096, activation=”relu”)(z)

z = Dropout(DROPOUT)(z)

model_output = Dense(num_classes, activation=’softmax’)(z)

model = Model([model_input1,model_input2], model_output)

model.summary()

sgd = SGD(lr=0.001, decay=1e-6, momentum=0.9, nesterov=True)

model.compile(loss=’categorical_crossentropy’,

optimizer=sgd,

metrics=[‘accuracy’])

print(‘RGB_D’)

datagen_train = ImageDataGenerator(rescale=1./255)

datagen_val = ImageDataGenerator(rescale=1./255)

print(“fit_generator”)

# Train the model using the training set…

Results_Train = model.fit_generator(datagen_train.flow([X_train1,X_train2], [Y_train1,Y_train2], batch_size = batch_size),

steps_per_epoch = nb_train_samples//batch_size,

epochs = num_epochs,

validation_data = datagen_val.flow([X_val1,X_val1], [Y_val1,Y_val2],batch_size = batch_size),

shuffle=True,

verbose=1)

print(Results_Train.history)

Looks like a mismatch between your data and your model. You can reshape your data or change the expectations of your model.

Thank you for all the informations.

Do you have any example with Keras API using shared layers with 2 inputs and one output.

I want to knew how to use every input to get it’s : Xtrain, Ytrain and Xtest, Ytest,

I think, It will be more simple with an example.

Thank you.

Perhaps this will help:

https://machinelearningmastery.com/lstm-autoencoders/

Hi Jason.

Do you know of a way to combine models each with a different loss function?

Leeor.

Yes, as an ensemble after they are trained.

Hi Jason,

thank you for your wonderful tutorials!

I just wonder about the “Convolutional Neural Network” example. Isn’t there a Flatten layer missing between max_pooling2d_2 and dense_1?

Something like:

pool2 = MaxPooling2D(pool_size=(2, 2))(conv2)

flatt = Flatten()(pool2)

hidden1 = Dense(10, activation=’relu’)(flatt)

Beste regards

I think you’re right! Fixed.

Hi Jason

In Multiple Input Model, How did you naming the layers?

____________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

====================================================================================================

input_1 (InputLayer) (None, 64, 64, 1) 0

____________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 61, 61, 32) 544 input_1[0][0]

____________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 57, 57, 16) 1040 input_1[0][0]

____________________________________________________________________________________________________

max_pooling2d_1 (MaxPooling2D) (None, 30, 30, 32) 0 conv2d_1[0][0]

____________________________________________________________________________________________________

max_pooling2d_2 (MaxPooling2D) (None, 28, 28, 16) 0 conv2d_2[0][0]

____________________________________________________________________________________________________

flatten_1 (Flatten) (None, 28800) 0 max_pooling2d_1[0][0]

____________________________________________________________________________________________________

flatten_2 (Flatten) (None, 12544) 0 max_pooling2d_2[0][0]

____________________________________________________________________________________________________

concatenate_1 (Concatenate) (None, 41344) 0 flatten_1[0][0]

flatten_2[0][0]

____________________________________________________________________________________________________

dense_1 (Dense) (None, 10) 413450 concatenate_1[0][0]

____________________________________________________________________________________________________

dense_2 (Dense) (None, 1) 11 dense_1[0][0]

====================================================================================================

Total params: 415,045

Trainable params: 415,045

Non-trainable params: 0

____________________________________________________________________________________________________

If the model is built at one time, the default names are fine.

If the models are built at different times, I give arbitrary names to each head, like: name = ‘head_1_’ + name

Very good insight into Keras.

I also read your Deep_Learning_Time_Series_Forcasting and it was very helpful

Thanks.

It was your email that prompted me to update this post with the Python syntax explanation!

Wow thank a lot for all your post, you save me a lot of time in my learning and prototyping experience!

I use an LSTM layer and want to use the ouput to feed a Dense layer to get an first predictive value ans insert this new value to the first LSTM output and feed an new LSTM layer. Im stuck with the dimension problem…

main_inputs = Input(shape=(train_X.shape[1], train_X.shape[2]), name=’main_inputs’)

ly1 = LSTM(100, return_sequences=False)(main_inputs)

auxiliary_output = Dense(1, activation=’softmax’, name=’aux_output’)(ly1)

merged_input = concatenate([main_inputs, auxiliary_output])

ly2 = LSTM(100, return_sequences=True)(merged_input)

main_output = Dense(1, activation= ‘softmax’, name=’main_output’)(ly2)

Any suggestion is welcome

What’s the problem exactly?

I don’t have the capacity to debug your code, perhaps post to stackoverflow?

Thanks Jason for the post 🙂

In the multi-input CNN model example, does the two images enter to the model at the same time has the same index?

does the two images enter to the model at the same time has the same class?

In training, does each black image enters to the model many times (with all colored images) or each black image enters to the model one time (with only one colored image)?

Thanks..

Both images are provided to the model at the same time.

does the two images enter to the model at the same time has the same class?

It really depends on the problem that you are solving.

Hi Jason, I was looking for the comments hoping someone would ask a similar question.

I have images and their corresponding numeric values. If I am to construct a fine-tuned VGG model for images and MLP (or any other) for numeric values and concatenate them just how you did in this post, how do I need to keep the correspondence between them?

Is it practically possible to input images (and numeric values) into the model by some criteria, say, names of images? Because my images’ names and one column in my numeric dataset keeps the names for samples.

Thanks a lot.

You can use a multi-input model, one input for the image, one for the number.

The training dataset would be 2 arrays, one array of image one of numbers. The rows would correspond.

Does that help?

Thank you very much. To clarify for myself, please let me know your feedback for these:

1. Do you mean that I need to convert the images into an array and,based on their names, append to the numeric data file (csv) as a new column ?

2. I haven’t seen such a numeric data where it contains RGB values of images as an array in one column. Could you post some related links/ sources?

I appreciate your feedback. Thanks again!

No. There would be one array of images and one array of numbers and the rows between them would correspond. e.g. row 0 in the first array would be an image that would relate to the number in row 0 of the second array.

This will help you work with arrays if it is new:

https://machinelearningmastery.com/gentle-introduction-n-dimensional-arrays-python-numpy/

This will help you load images as arrays:

https://machinelearningmastery.com/how-to-load-and-manipulate-images-for-deep-learning-in-python-with-pil-pillow/

Hi, now I want to use a 1-D data like wave.shape=(360,) as input, and 3-D data like velocity.shape=(560,7986,3) as output. I want to ask if this problem can be solved by multilayers perceptron to tain these data? I have tried, but the shape problem is not solved, it shows “ValueError: Error when checking target: expected dense_3 to have 2 dimensions, but got array with shape (560, 7986, 3)”

Perhaps, it really comes down to what the data represents.

Hello, thank you for sharing the contents 🙂

Is it same that the ‘Multi-output’ case with ‘multi-task deep learning’ ?

I am trying to build up the multi-task deep learning model and found here.

Thank you again.

Yes, it can be.

Jason, a very modest contribution for now. Just a typo. In,

…Shared Feature Extraction Layer

In this section, we will two parallel submodels to interpret the output…

it looks like we are missing a verb or something in the sentence, it sounds strange.

If it sounds OK to you, just disregard, it must be me being tired.

I hope to support more substantially in the future this extraordinary site.

(You don’t need to post this comment)

Regards

Antonio

Thanks, fixed!

(I like to keep these comments in there, to show that everything is a work in progress and getting incrementally better)

Jason, thank you very much for your tutorial, it is very helpful! I have a question, how would the ModelCheckpoint callback work with multiple outputs? If I set save_best_only = True what will be saved? Is it the model that yields the best overall result for both outputs, or will there be two models saved?

Really good question, I go into this in great detail in this post:

https://machinelearningmastery.com/how-to-stop-training-deep-neural-networks-at-the-right-time-using-early-stopping/

Can you put a post on how to make partially connected layers in Keras? With a predefined neurons connections ( prev layer to the next layer)

Thanks for the suggestion.

Hi Jason,

If we want to write predictions to a separate txt file, what we have to add at the end of the code?

Thanks.

You can save the numpy array to a csv file directly with the savetext() function.

Hi Jason, I really loved this article. I have an one application in that data is in csv file with text data which has four columns and i’m considering first three columns as input data to predict fourth column data as my output. I need to take first three column as input because data is dependent. can you guide me using glove how can i train model?

Thanks

Perhaps this tutorial will help:

https://machinelearningmastery.com/tutorial-first-neural-network-python-keras/

Hi Jason

Thanks again for good tutorial, i want to know the different when concatenate two layer as feature extraction. You use merge = concatenate([interp1, interp13])

Other people use merge = concatenate([interp1, interp13], axis = -1). I want to know is there different between the two and how different is it

They are the same thing. The axis=-1 is the default and does not need to be specified. Learn more here:

https://keras.io/layers/merge/

Hi Jason

Thanks for good tutorial, i want use Multiple Input Model with fit generator

model.fit_generator(generator=fit_generator,

steps_per_epoch=steps_per_epoch_fit,

epochs=epochs,

verbose=1,

validation_data=val_generator,

validation_steps=steps_per_epoch_val)

but i get ValueError: Error when checking model input: the list of Numpy arrays that you are passing to your model is not the size the model expected. Expected to see 2 array(s), but instead got the following list of 1 arrays

keras code

input1 = Input(shape=(145,53,63,40))

input2 = Input(shape=(145,133560))

#feature 1

conv1 = Conv3D(16, (3,3,3),data_format =’channels_last’)(input1)

pool1 = MaxPooling3D(pool_size=(2, 2,2))(conv1)

batch1 = BatchNormalization()(pool1)

ac1=Activation(‘relu’)(batch1)

…….

ac3=Activation(‘relu’)(batch3)

flat1 = Flatten()(ac3)

#feature 2

gr1=GRU(8,return_sequences=True)(input2)

batch4 = BatchNormalization()(gr1)

ac4=Activation (‘relu’)(batch4)

flat2= Flatten()(ac4)

# merge feature extractors

merge = concatenate([flat1, flat2])

output = Dense(2)(merge)

out=BatchNormalization()(output)

out1=Activation(‘softmax’)(out)

# prediction output

model = Model(inputs=[input1,input2], outputs=out1)

opt2 = optimizers.Adam(lr=learn_rate, decay=decay)

model.compile(loss=’categorical_crossentropy’, optimizer=opt2,

metrics=[‘accuracy’])

I’m eager to help, but I don’t have the capacity to debug your code, I have some suggestions here:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

i tried the given section, (5. Multiple Input and Output Models )

please help me to fit the data . i got the error

AttributeError: ‘NoneType’ object has no attribute ‘shape’

my input :

Nice work.

Perhaps try some of the suggestions here:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

I’am knew to the Machine Learning. After fitting the model using functional API i got training accuracy and loss. but i don’t know how to find test accuracy using keras functional API.

You can evaluate the model on the a test dataset by calling model.evaluate()

I want make CNN + convLSTM model,

but, at last line, occured dim error .. how can i fit shape

: Input 0 is incompatible with layer conv_lst_m2d_27: expected ndim=5, found ndim=4

input = Input(shape=(30,30,3), dtype=’float32”)

conv1 = Convolution2D(kernel_size=1, filters=32, strides=(1,1), activation=”selu”)(input)

conv2 = Convolution2D(kernel_size=2, filters=64, strides=(2,2), activation=”selu”)(conv1)

conv3 = Convolution2D(kernel_size=2, filters=128, strides=(2,2), activation=”selu”)(conv2)

conv4 = Convolution2D(kernel_size=2, filters=256, strides=(2,2), activation=’selu’)(conv3)

ConvLSTM_1 = ConvLSTM2D(32, 2, strides=(1,1) )(conv1)

Perhaps change the input shape to match the expectations of the model or change the model to meet the expectations of the data shape?

Thank you so much for your great article.

What should I set up my model with API if my problem is like this:

First, classify if the chemicals are toxic or not, then if they are toxic, what toxic scores they are. I can create two models separately. But combine them together is a good regularization that some papers said that. I think it is a multi-input and multi-output problem. I have a dataset which has the same input shape, combineoutput will be y1 = 0 or 1, and y2= numerical scores if y1=1.

I don’t know where and how to put if statment in the combined model.

Any advice is highly appreciated.

Perhaps start here:

https://machinelearningmastery.com/start-here/#deeplearning

Maybe I didn’t explain my problem clearly.

I can build models for classification and regression separately using Keras without any problems. My problem is how to combine these two into one multi-input, multi-output model with “an IF STATEMENT”. From the link you provided, I couldn’t find the solutions. Could you please make it clear? Many thanks.

What would be the inputs and outputs exactly?

It is like this:

ex x1 x2 x3 x4 y1 y2

1 0.1 0.5 0.7 0.4 1 0.3

2 0.5 0.2 0.4 0.1 0

3 0.7 0.6 0.3 0.2 0

4 0.12 0.33 0.05 0.77 1 0.55

.. .. .. .. .. .. ..

Only when y1 = 1, y2 has a value

Above is not a real dataset.

There may be many ways to approach this problem.

Perhaps you could setup the model to always predict something, and only pay attention to y2 when y1 has a specific value?

First of all, thank you so much for your fast response.

I still didn’t get it. You said there are many ways to approach it, but I don’t know any of them. There are two datasets (X1, y1), (X2, y2). So I think it is a multi-input and multi-output problem. Should the number of samples between two datasets be equal?

Yes.

Thank you, Jason, for yet another awesome tutorial! You are a very talented teacher!

Thanks, I’m glad it helped.

Hi Jason, I always follow your blogs and book (Linear algebra for ML) and they are extremely helpful. Do you have post related to LSTM layer followed by 2D CNN/Maxpool?. If you have already have a post then please provide a link to it.

Actually I have some problem with dimensions after using the direction given in this post.