Time series data must be transformed into a structure of samples with input and output components before it can be used to fit a supervised learning model.

This can be challenging if you have to perform this transformation manually. The Keras deep learning library provides the TimeseriesGenerator to automatically transform both univariate and multivariate time series data into samples, ready to train deep learning models.

In this tutorial, you will discover how to use the Keras TimeseriesGenerator for preparing time series data for modeling with deep learning methods.

After completing this tutorial, you will know:

- How to define the TimeseriesGenerator generator and use it to fit deep learning models.

- How to prepare a generator for univariate time series and fit MLP and LSTM models.

- How to prepare a generator for multivariate time series and fit an LSTM model.

Kick-start your project with my new book Deep Learning for Time Series Forecasting, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

How to Use the TimeseriesGenerator for Time Series Forecasting in Keras

Photo by Chris Fithall, some rights reserved.

Tutorial Overview

This tutorial is divided into six parts; they are:

- Problem with Time Series for Supervised Learning

- How to Use the TimeseriesGenerator

- Univariate Time Series Example

- Multivariate Time Series Example

- Multivariate Inputs and Dependent Series Example

- Multi-step Forecasts Example

Note: This tutorial assumes that you are using Keras v2.2.4 or higher.

Problem with Time Series for Supervised Learning

Time series data requires preparation before it can be used to train a supervised learning model, such as a deep learning model.

For example, a univariate time series is represented as a vector of observations:

|

1 |

[1, 2, 3, 4, 5, 6, 7, 8, 9, 10] |

A supervised learning algorithm requires that data is provided as a collection of samples, where each sample has an input component (X) and an output component (y).

|

1 2 3 4 5 |

X, y example input, example output example input, example output example input, example output ... |

The model will learn how to map inputs to outputs from the provided examples.

|

1 |

y = f(X) |

A time series must be transformed into samples with input and output components. The transform both informs what the model will learn and how you intend to use the model in the future when making predictions, e.g. what is required to make a prediction (X) and what prediction is made (y).

For a univariate time series interested in one-step predictions, the observations at prior time steps, so-called lag observations, are used as input and the output is the observation at the current time step.

For example, the above 10-step univariate series can be expressed as a supervised learning problem with three time steps for input and one step as output, as follows:

|

1 2 3 4 5 |

X, y [1, 2, 3], [4] [2, 3, 4], [5] [3, 4, 5], [6] ... |

You can write code to perform this transform yourself; for example, see the post:

Alternately, when you are interested in training neural network models with Keras, you can use the TimeseriesGenerator class.

Need help with Deep Learning for Time Series?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

How to use the TimeseriesGenerator

Keras provides the TimeseriesGenerator that can be used to automatically transform a univariate or multivariate time series dataset into a supervised learning problem.

There are two parts to using the TimeseriesGenerator: defining it and using it to train models.

Defining a TimeseriesGenerator

You can create an instance of the class and specify the input and output aspects of your time series problem and it will provide an instance of a Sequence class that can then be used to iterate across the inputs and outputs of the series.

In most time series prediction problems, the input and output series will be the same series.

For example:

|

1 2 3 4 5 6 7 8 |

# load data inputs = ... outputs = ... # define generator generator = TimeseriesGenerator(inputs, outputs, ...) # iterator generator for i in range(len(generator)): ... |

Technically, the class is not a generator in the sense that it is not a Python Generator and you cannot use the next() function on it.

In addition to specifying the input and output aspects of your time series problem, there are some additional parameters that you should configure; for example:

- length: The number of lag observations to use in the input portion of each sample (e.g. 3).

- batch_size: The number of samples to return on each iteration (e.g. 32).

You must define a length argument based on your designed framing of the problem. That is the desired number of lag observations to use as input.

You must also define the batch size as the batch size of your model during training. If the number of samples in your dataset is less than your batch size, you can set the batch size in the generator and in your model to the total number of samples in your generator found via calculating its length; for example:

|

1 |

print(len(generator)) |

There are also other arguments such as defining start and end offsets into your data, the sampling rate, stride, and more. You are less likely to use these features, but you can see the full API for more details.

The samples are not shuffled by default. This is useful for some recurrent neural networks like LSTMs that maintain state across samples within a batch.

It can benefit other neural networks, such as CNNs and MLPs, to shuffle the samples when training. Shuffling can be enabled by setting the ‘shuffle‘ argument to True. This will have the effect of shuffling samples returned for each batch.

At the time of writing, the TimeseriesGenerator is limited to one-step outputs. Multi-step time series forecasting is not supported.

Training a Model with a TimeseriesGenerator

Once a TimeseriesGenerator instance has been defined, it can be used to train a neural network model.

A model can be trained using the TimeseriesGenerator as a data generator. This can be achieved by fitting the defined model using the fit_generator() function.

This function takes the generator as an argument. It also takes a steps_per_epoch argument that defines the number of samples to use in each epoch. This can be set to the length of the TimeseriesGenerator instance to use all samples in the generator.

For example:

|

1 2 3 4 5 6 |

# define generator generator = TimeseriesGenerator(...) # define model model = ... # fit model model.fit_generator(generator, steps_per_epoch=len(generator), ...) |

Similarly, the generator can be used to evaluate a fit model by calling the evaluate_generator() function, and using a fit model to make predictions on new data with the predict_generator() function.

A model fit with the data generator does not have to use the generator versions of the evaluate and predict functions. They can be used only if you wish to have the data generator prepare your data for the model.

Univariate Time Series Example

We can make the TimeseriesGenerator concrete with a worked example with a small contrived univariate time series dataset.

First, let’s define our dataset.

|

1 2 |

# define dataset series = array([1, 2, 3, 4, 5, 6, 7, 8, 9, 10]) |

We will choose to frame the problem where the last two lag observations will be used to predict the next value in the sequence. For example:

|

1 2 |

X, y [1, 2] 3 |

For now, we will use a batch size of 1, so that we can explore the data in the generator.

|

1 2 3 |

# define generator n_input = 2 generator = TimeseriesGenerator(series, series, length=n_input, batch_size=1) |

Next, we can see how many samples will be prepared by the data generator for this time series.

|

1 2 |

# number of samples print('Samples: %d' % len(generator)) |

Finally, we can print the input and output components of each sample, to confirm that the data was prepared as we expected.

|

1 2 3 |

for i in range(len(generator)): x, y = generator[i] print('%s => %s' % (x, y)) |

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# univariate one step problem from numpy import array from keras.preprocessing.sequence import TimeseriesGenerator # define dataset series = array([1, 2, 3, 4, 5, 6, 7, 8, 9, 10]) # define generator n_input = 2 generator = TimeseriesGenerator(series, series, length=n_input, batch_size=1) # number of samples print('Samples: %d' % len(generator)) # print each sample for i in range(len(generator)): x, y = generator[i] print('%s => %s' % (x, y)) |

Running the example first prints the total number of samples in the generator, which is eight.

We can then see that each input array has the shape [1, 2] and each output has the shape [1,].

The observations are prepared as we expected, with two lag observations that will be used as input and the subsequent value in the sequence as the output.

|

1 2 3 4 5 6 7 8 9 10 |

Samples: 8 [[1. 2.]] => [3.] [[2. 3.]] => [4.] [[3. 4.]] => [5.] [[4. 5.]] => [6.] [[5. 6.]] => [7.] [[6. 7.]] => [8.] [[7. 8.]] => [9.] [[8. 9.]] => [10.] |

Now we can fit a model on this data and learn to map the input sequence to the output sequence.

We will start with a simple Multilayer Perceptron, or MLP, model.

The generator will be defined so that all samples will be used in each batch, given the small number of samples.

|

1 2 3 |

# define generator n_input = 2 generator = TimeseriesGenerator(series, series, length=n_input, batch_size=8) |

We can define a simple model with one hidden layer with 50 nodes and an output layer that will make the prediction.

|

1 2 3 4 5 |

# define model model = Sequential() model.add(Dense(100, activation='relu', input_dim=n_input)) model.add(Dense(1)) model.compile(optimizer='adam', loss='mse') |

We can then fit the model with the generator using the fit_generator() function. We only have one batch worth of data in the generator so we’ll set the steps_per_epoch to 1. The model will be fit for 200 epochs.

|

1 2 |

# fit model model.fit_generator(generator, steps_per_epoch=1, epochs=200, verbose=0) |

Once fit, we will make an out of sample prediction.

Given the inputs [9, 10], we will make a prediction and expect the model to predict [11], or close to it. The model is not tuned; this is just an example of how to use the generator.

|

1 2 3 |

# make a one step prediction out of sample x_input = array([9, 10]).reshape((1, n_input)) yhat = model.predict(x_input, verbose=0) |

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

# univariate one step problem with mlp from numpy import array from keras.models import Sequential from keras.layers import Dense from keras.preprocessing.sequence import TimeseriesGenerator # define dataset series = array([1, 2, 3, 4, 5, 6, 7, 8, 9, 10]) # define generator n_input = 2 generator = TimeseriesGenerator(series, series, length=n_input, batch_size=8) # define model model = Sequential() model.add(Dense(100, activation='relu', input_dim=n_input)) model.add(Dense(1)) model.compile(optimizer='adam', loss='mse') # fit model model.fit_generator(generator, steps_per_epoch=1, epochs=200, verbose=0) # make a one step prediction out of sample x_input = array([9, 10]).reshape((1, n_input)) yhat = model.predict(x_input, verbose=0) print(yhat) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example prepares the generator, fits the model, and makes the out of sample prediction, correctly predicting a value close to 11.

|

1 |

[[11.510406]] |

We can also use the generator to fit a recurrent neural network, such as a Long Short-Term Memory network, or LSTM.

The LSTM expects data input to have the shape [samples, timesteps, features], whereas the generator described so far is providing lag observations as features or the shape [samples, features].

We can reshape the univariate time series prior to preparing the generator from [10, ] to [10, 1] for 10 time steps and 1 feature; for example:

|

1 2 3 |

# reshape to [10, 1] n_features = 1 series = series.reshape((len(series), n_features)) |

The TimeseriesGenerator will then split the series into samples with the shape [batch, n_input, 1] or [8, 2, 1] for all eight samples in the generator and the two lag observations used as time steps.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# univariate one step problem with lstm from numpy import array from keras.models import Sequential from keras.layers import Dense from keras.layers import LSTM from keras.preprocessing.sequence import TimeseriesGenerator # define dataset series = array([1, 2, 3, 4, 5, 6, 7, 8, 9, 10]) # reshape to [10, 1] n_features = 1 series = series.reshape((len(series), n_features)) # define generator n_input = 2 generator = TimeseriesGenerator(series, series, length=n_input, batch_size=8) # define model model = Sequential() model.add(LSTM(100, activation='relu', input_shape=(n_input, n_features))) model.add(Dense(1)) model.compile(optimizer='adam', loss='mse') # fit model model.fit_generator(generator, steps_per_epoch=1, epochs=500, verbose=0) # make a one step prediction out of sample x_input = array([9, 10]).reshape((1, n_input, n_features)) yhat = model.predict(x_input, verbose=0) print(yhat) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Again, running the example prepares the data, fits the model, and predicts the next out of sample value in the sequence.

|

1 |

[[11.092189]] |

Multivariate Time Series Example

The TimeseriesGenerator also supports multivariate time series problems.

These are problems where you have multiple parallel series, with observations at the same time step in each series.

We can demonstrate this with an example.

First, we can contrive a dataset of two parallel series.

|

1 2 3 |

# define dataset in_seq1 = array([10, 20, 30, 40, 50, 60, 70, 80, 90, 100]) in_seq2 = array([15, 25, 35, 45, 55, 65, 75, 85, 95, 105]) |

It is a standard structure to have multivariate time series formatted such that each time series is a separate column and rows are the observations at each time step.

The series we have defined are vectors, but we can convert them into columns. We can reshape each series into an array with the shape [10, 1] for the 10 time steps and 1 feature.

|

1 2 3 |

# reshape series in_seq1 = in_seq1.reshape((len(in_seq1), 1)) in_seq2 = in_seq2.reshape((len(in_seq2), 1)) |

We can now horizontally stack the columns into a dataset by calling the hstack() NumPy function.

|

1 2 |

# horizontally stack columns dataset = hstack((in_seq1, in_seq2)) |

We can now provide this dataset to the TimeseriesGenerator directly. We will use the prior two observations of each series as input and the next observation of each series as output.

|

1 2 3 |

# define generator n_input = 2 generator = TimeseriesGenerator(dataset, dataset, length=n_input, batch_size=1) |

Each sample will then be a three-dimensional array of [1, 2, 2] for the 1 sample, 2 time steps, and 2 features or parallel series. The output will be a two-dimensional series of [1, 2] for the 1 sample and 2 features. The first sample will be:

|

1 2 |

X, y [[10, 15], [20, 25]] [[30, 35]] |

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

# multivariate one step problem from numpy import array from numpy import hstack from keras.preprocessing.sequence import TimeseriesGenerator # define dataset in_seq1 = array([10, 20, 30, 40, 50, 60, 70, 80, 90, 100]) in_seq2 = array([15, 25, 35, 45, 55, 65, 75, 85, 95, 105]) # reshape series in_seq1 = in_seq1.reshape((len(in_seq1), 1)) in_seq2 = in_seq2.reshape((len(in_seq2), 1)) # horizontally stack columns dataset = hstack((in_seq1, in_seq2)) print(dataset) # define generator n_input = 2 generator = TimeseriesGenerator(dataset, dataset, length=n_input, batch_size=1) # number of samples print('Samples: %d' % len(generator)) # print each sample for i in range(len(generator)): x, y = generator[i] print('%s => %s' % (x, y)) |

Running the example will first print the prepared dataset, followed by the total number of samples in the dataset.

Next, the input and output portion of each sample is printed, confirming our intended structure.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

[[ 10 15] [ 20 25] [ 30 35] [ 40 45] [ 50 55] [ 60 65] [ 70 75] [ 80 85] [ 90 95] [100 105]] Samples: 8 [[[10. 15.] [20. 25.]]] => [[30. 35.]] [[[20. 25.] [30. 35.]]] => [[40. 45.]] [[[30. 35.] [40. 45.]]] => [[50. 55.]] [[[40. 45.] [50. 55.]]] => [[60. 65.]] [[[50. 55.] [60. 65.]]] => [[70. 75.]] [[[60. 65.] [70. 75.]]] => [[80. 85.]] [[[70. 75.] [80. 85.]]] => [[90. 95.]] [[[80. 85.] [90. 95.]]] => [[100. 105.]] |

The three-dimensional structure of the samples means that the generator cannot be used directly for simple models like MLPs.

This could be achieved by first flattening the time series dataset to a one-dimensional vector prior to providing it to the TimeseriesGenerator and set length to the number of steps to use as input multiplied by the number of columns in the series (n_steps * n_features).

A limitation of this approach is that the generator will only allow you to predict one variable. You almost certainly may be better off writing your own function to prepare multivariate time series for an MLP than using the TimeseriesGenerator.

The three-dimensional structure of the samples can be used directly by CNN and LSTM models. A complete example for multivariate time series forecasting with the TimeseriesGenerator is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

# multivariate one step problem with lstm from numpy import array from numpy import hstack from keras.models import Sequential from keras.layers import Dense from keras.layers import LSTM from keras.preprocessing.sequence import TimeseriesGenerator # define dataset in_seq1 = array([10, 20, 30, 40, 50, 60, 70, 80, 90, 100]) in_seq2 = array([15, 25, 35, 45, 55, 65, 75, 85, 95, 105]) # reshape series in_seq1 = in_seq1.reshape((len(in_seq1), 1)) in_seq2 = in_seq2.reshape((len(in_seq2), 1)) # horizontally stack columns dataset = hstack((in_seq1, in_seq2)) # define generator n_features = dataset.shape[1] n_input = 2 generator = TimeseriesGenerator(dataset, dataset, length=n_input, batch_size=8) # define model model = Sequential() model.add(LSTM(100, activation='relu', input_shape=(n_input, n_features))) model.add(Dense(2)) model.compile(optimizer='adam', loss='mse') # fit model model.fit_generator(generator, steps_per_epoch=1, epochs=500, verbose=0) # make a one step prediction out of sample x_input = array([[90, 95], [100, 105]]).reshape((1, n_input, n_features)) yhat = model.predict(x_input, verbose=0) print(yhat) |

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Running the example prepares the data, fits the model, and makes a prediction for the next value in each of the input time series, which we expect to be [110, 115].

|

1 |

[[111.03207 116.58153]] |

Multivariate Inputs and Dependent Series Example

There are multivariate time series problems where there are one or more input series and a separate output series to be forecasted that is dependent upon the input series.

To make this concrete, we can contrive one example with two input time series and an output series that is the sum of the input series.

|

1 2 3 4 |

# define dataset in_seq1 = array([10, 20, 30, 40, 50, 60, 70, 80, 90, 100]) in_seq2 = array([15, 25, 35, 45, 55, 65, 75, 85, 95, 105]) out_seq = array([25, 45, 65, 85, 105, 125, 145, 165, 185, 205]) |

Where values in the output sequence are the sum of values at the same time step in the input time series.

|

1 |

10 + 15 = 25 |

This is different from prior examples where, given inputs, we wish to predict a value in the target time series for the next time step, not the same time step as the input.

For example, we want samples like:

|

1 2 3 4 5 |

X, y [10, 15], 25 [20, 25], 45 [30, 35], 65 ... |

We don’t want samples like the following:

|

1 2 3 4 5 |

X, y [10, 15], 45 [20, 25], 65 [30, 35], 85 ... |

Nevertheless, the TimeseriesGenerator class assumes that we are predicting the next time step and will provide data as in the second case above.

For example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

# multivariate one step problem from numpy import array from numpy import hstack from keras.preprocessing.sequence import TimeseriesGenerator # define dataset in_seq1 = array([10, 20, 30, 40, 50, 60, 70, 80, 90, 100]) in_seq2 = array([15, 25, 35, 45, 55, 65, 75, 85, 95, 105]) out_seq = array([25, 45, 65, 85, 105, 125, 145, 165, 185, 205]) # reshape series in_seq1 = in_seq1.reshape((len(in_seq1), 1)) in_seq2 = in_seq2.reshape((len(in_seq2), 1)) out_seq = out_seq.reshape((len(out_seq), 1)) # horizontally stack columns dataset = hstack((in_seq1, in_seq2)) # define generator n_input = 1 generator = TimeseriesGenerator(dataset, out_seq, length=n_input, batch_size=1) # print each sample for i in range(len(generator)): x, y = generator[i] print('%s => %s' % (x, y)) |

Running the example prints the input and output portions of the samples with the output values for the next time step rather than the current time step as we may desire for this type of problem.

|

1 2 3 4 5 6 7 8 9 |

[[[10. 15.]]] => [[45.]] [[[20. 25.]]] => [[65.]] [[[30. 35.]]] => [[85.]] [[[40. 45.]]] => [[105.]] [[[50. 55.]]] => [[125.]] [[[60. 65.]]] => [[145.]] [[[70. 75.]]] => [[165.]] [[[80. 85.]]] => [[185.]] [[[90. 95.]]] => [[205.]] |

We can therefore modify the target series (out_seq) and insert an additional value at the beginning in order to push all observations down by one time step.

This artificial shift will allow the preferred framing of the problem.

|

1 2 |

# shift the target sample by one step out_seq = insert(out_seq, 0, 0) |

The complete example with this shift is provided below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# multivariate one step problem from numpy import array from numpy import hstack from numpy import insert from keras.preprocessing.sequence import TimeseriesGenerator # define dataset in_seq1 = array([10, 20, 30, 40, 50, 60, 70, 80, 90, 100]) in_seq2 = array([15, 25, 35, 45, 55, 65, 75, 85, 95, 105]) out_seq = array([25, 45, 65, 85, 105, 125, 145, 165, 185, 205]) # reshape series in_seq1 = in_seq1.reshape((len(in_seq1), 1)) in_seq2 = in_seq2.reshape((len(in_seq2), 1)) out_seq = out_seq.reshape((len(out_seq), 1)) # horizontally stack columns dataset = hstack((in_seq1, in_seq2)) # shift the target sample by one step out_seq = insert(out_seq, 0, 0) # define generator n_input = 1 generator = TimeseriesGenerator(dataset, out_seq, length=n_input, batch_size=1) # print each sample for i in range(len(generator)): x, y = generator[i] print('%s => %s' % (x, y)) |

Running the example shows the preferred framing of the problem.

This approach will work regardless of the length of the input sample.

|

1 2 3 4 5 6 7 8 9 |

[[[10. 15.]]] => [25.] [[[20. 25.]]] => [45.] [[[30. 35.]]] => [65.] [[[40. 45.]]] => [85.] [[[50. 55.]]] => [105.] [[[60. 65.]]] => [125.] [[[70. 75.]]] => [145.] [[[80. 85.]]] => [165.] [[[90. 95.]]] => [185.] |

Multi-step Forecasts Example

A benefit of neural network models over many other types of classical and machine learning models is that they can make multi-step forecasts.

That is, that the model can learn to map an input pattern of one or more features to an output pattern of more than one feature. This can be used in time series forecasting to directly forecast multiple future time steps.

This can be achieved either by directly outputting a vector from the model, by specifying the desired number of outputs as the number of nodes in the output layer, or it can be achieved by specialized sequence prediction models such as an encoder-decoder model.

A limitation of the TimeseriesGenerator is that it does not directly support multi-step outputs. Specifically, it will not create the multiple steps that may be required in the target sequence.

Nevertheless, if you prepare your target sequence to have multiple steps, it will honor and use them as the output portion of each sample. This means the onus is on you to prepare the expected output for each time step.

We can demonstrate this with a simple univariate time series with two time steps in the output sequence.

You can see that you must have the same number of rows in the target sequence as you do in the input sequence. In this case, we must know values beyond the values in the input sequence, or trim the input sequence to the length of the target sequence.

|

1 2 3 |

# define dataset series = array([1, 2, 3, 4, 5, 6, 7, 8, 9, 10]) target = array([[1,2],[2,3],[3,4],[4,5],[5,6],[6,7],[7,8],[8,9],[9,10],[10,11]]) |

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# univariate multi-step problem from numpy import array from keras.preprocessing.sequence import TimeseriesGenerator # define dataset series = array([1, 2, 3, 4, 5, 6, 7, 8, 9, 10]) target = array([[1,2],[2,3],[3,4],[4,5],[5,6],[6,7],[7,8],[8,9],[9,10],[10,11]]) # define generator n_input = 2 generator = TimeseriesGenerator(series, target, length=n_input, batch_size=1) # print each sample for i in range(len(generator)): x, y = generator[i] print('%s => %s' % (x, y)) |

Running the example prints the input and output portions of the samples showing the two lag observations as input and the two steps as output in the multi-step forecasting problem.

|

1 2 3 4 5 6 7 8 |

[[1. 2.]] => [[3. 4.]] [[2. 3.]] => [[4. 5.]] [[3. 4.]] => [[5. 6.]] [[4. 5.]] => [[6. 7.]] [[5. 6.]] => [[7. 8.]] [[6. 7.]] => [[8. 9.]] [[7. 8.]] => [[ 9. 10.]] [[8. 9.]] => [[10. 11.]] |

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

- How to Convert a Time Series to a Supervised Learning Problem in Python

- TimeseriesGenerator Keras API

- Sequence Keras API

- Sequential Model Keras API

- Python Generator

Summary

In this tutorial, you discovered how to use the Keras TimeseriesGenerator for preparing time series data for modeling with deep learning methods.

Specifically, you learned:

- How to define the TimeseriesGenerator generator and use it to fit deep learning models.

- How to prepare a generator for univariate time series and fit MLP and LSTM models.

- How to prepare a generator for multivariate time series and fit an LSTM model.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Hi Jason

Great tutorials.

When I run your code. The univariate one step problem with lstm.

there is an error when running model.fit

Traceback (most recent call last):

File “”, line 2, in

File “C:\Program Files (x86)\Microsoft Visual Studio\Shared\Python36_64\lib\site-packages\keras\legacy\interfaces.py”, line 91, in wrapper

return func(*args, **kwargs)

File “C:\Program Files (x86)\Microsoft Visual Studio\Shared\Python36_64\lib\site-packages\keras\engine\training.py”, line 1415, in fit_generator

initial_epoch=initial_epoch)

File “C:\Program Files (x86)\Microsoft Visual Studio\Shared\Python36_64\lib\site-packages\keras\engine\training_generator.py”, line 177, in fit_generator

generator_output = next(output_generator)

File “C:\Program Files (x86)\Microsoft Visual Studio\Shared\Python36_64\lib\site-packages\keras\utils\data_utils.py”, line 793, in get

six.reraise(value.__class__, value, value.__traceback__)

File “C:\Program Files (x86)\Microsoft Visual Studio\Shared\Python36_64\lib\site-packages\six.py”, line 693, in reraise

raise value

File “C:\Program Files (x86)\Microsoft Visual Studio\Shared\Python36_64\lib\site-packages\keras\utils\data_utils.py”, line 658, in _data_generator_task

generator_output = next(self._generator)

TypeError: ‘TimeseriesGenerator’ object is not an iterator

Something you know how to overcome?

Are you able to confirm that you Keras library is up to date?

E.g. v2.2.4 or higher?

Hi Jason

Thank you for your fast reply.

No, I was running v2.2.2 but have updated now to 2.2.4 and it works 🙂

Thank you

Glad to hear it. I have added a note to the tutorial.

Hi Jason,

Thanks for the tutorials, it helps me a lot.

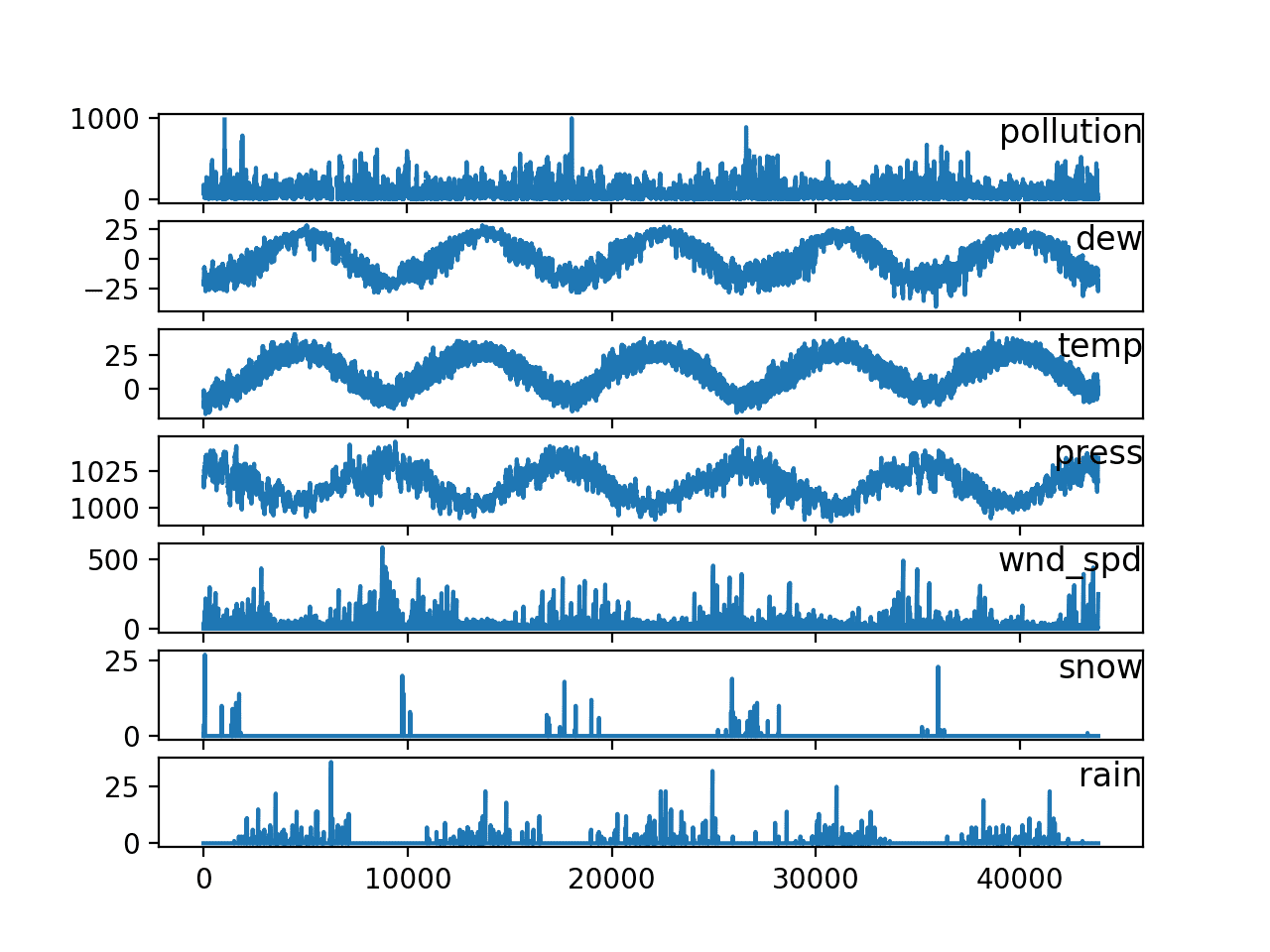

But I still have a huge problem when I deal with my dataset. I think it’s a multi-site multivariate time series forecasting dataset (the trajectory data of multiple vehicles over a period of time). I don’t know how to pre-process it before using the TimeseriesGenerator.

Could you give me some advice?

Thanks a lot.

Before pre-processing, perhaps start with a strong framing of the problem — it will guide you as to how to prepare the data.

Thanks for your advice. But what is a frame? Like a model? I don’t quite understand. Could you give a simple example or a link?

Thank you in advance.

A frame is the choice of the type of problem (classification/regression) and the choice of inputs and outputs. More here:

https://machinelearningmastery.com/how-to-define-your-machine-learning-problem/

I have read another article titled ‘A Standard Multivariate, Multi-Step, and Multi-Site Time Series Forecasting Problem’ on your blog. I have some kind of understanding the framing problem.

My project is quite like the ‘Air Quality Prediction’ project. Do you think it’s possible to use LSTM for the global forecasting problem?

Perhaps try it and see.

Great tutorial Jason. I was wondering how you could include other independent variables to forecast the output. In my case I want to forecast the air pollution for a set of measurement stations. Besides the historical pollution I also have other variables like termperature and humidity. Do you know how to deal with that? Thanks!

I show how to use it for multivariate data with a dependent series, does that help?

Hey, thanks for the great tutorial.

I’m getting an error in the multivariate case. When I insert the 0 the input and output series’ aren’t the same length anymore:

ValueError: Data and targets have to be of same length. Data length is 10 while target length is 11

Hmmm, perhaps post to stackoverflow or Keras help:

https://machinelearningmastery.com/get-help-with-keras/

Hi, Jason and Evan.

The numpy.insert() adds new value(s) to existing array.

So, in case of your example, “out_seq = insert(out_seq, 0, 0)” makes out_seq 1-size bigger.

I think that this occurs the Evan’s error.

You need to do the following before create a generator.

from numpy import delete

out_seq = delete(out_seq, -1)

Thanks for sharing.

thanks for your great tutorial, I copy your code and run it in my pycharm, and found that the result is different from yours, the sample number is always one less than yours, when your is 8 my is 7.

# univariate one step problem

from numpy import array

from keras.preprocessing.sequence import TimeseriesGenerator

# define dataset

series = array([1, 2, 3, 4, 5, 6, 7, 8, 9, 10])

# define generator

n_input = 2

generator = TimeseriesGenerator(series, series, length=n_input, batch_size=1)

# number of samples

print(‘Samples: %d’ % len(generator))

# print each sample

for i in range(len(generator)):

x, y = generator[i]

print(‘%s => %s’ % (x, y))

#Resluts:

Samples: 7

[[1. 2.]] => [3.]

[[2. 3.]] => [4.]

[[3. 4.]] => [5.]

[[4. 5.]] => [6.]

[[5. 6.]] => [7.]

[[6. 7.]] => [8.]

[[7. 8.]] => [9.]

Are you able to confirm that your libraries are up to date?

Yes, I checked my keras version and update it to the latest one, now the result is same to you. Thank youuuuuu!!

I’m glad to hear that.

Hi jason,

thanks for your great tutorial!~

I have a question now.

I want to generate sequences which give a certain seed and end, like the sentence begin with “hello” and end with “bye”

Do u have any idea about it?

Thanks a lot.

Yes, if your training data has this then the model will learn it.

Hi Mr jason

As Evan mentioned above i have the same error for the same example

ValueError: Data and targets have to be of same length. Data length is 10 while target length is 11

Confirm that your version of Keras is 2.2.4 or higher and tensorflow is 1.12. or higher.

I get the same error message in the Multivariate Inputs and Dependent Series Example

when target shifts

# shift the target sample by one step

out_seq = insert(out_seq, 0, 0)

target is length 11 and data 10

# confirm Keras version

import keras;

print(keras.__version__)

2.2.4

What version of tensorflow are you using?

If you are shifting the target, you should also shift the data:

dataset = np.append(dataset, [[0] * np.size(dataset, axis=1)], axis=0)

out_seq = np.insert(out_seq, 0, 0)

How “TimeseriesGenerator” differ from the function “series_to_supervised” that you have used it in many other tutorials?

In many ways. What differences are you interested in exactly?

Hi Jason, thanks a lot for your blog, it’s really a huge help and a great ressource!

Tamer above never replied but I’m actually interested in the same question. I understand that times series reframed with your “series_to_supervised” function will be inputed differently to a model for fitting than the TimeSeriesGenerator. My question is: are they functionally identical?

From what I could see, the “series_to_supervised”, they fulfil the same role, albeit in a different way. Am I correct in that understanding?

Thanks again

You’re welcome.

They can be configured to do the same thing I think. Internally they are different.

Thanks Jason!

You’re welcome.

Suppose a multi-step ahead (2 steps) for univariate series (using 3 lags) as:

series = array([1, 2, 3, 4, 5, 6, 7, 8, 9, 10])

target = array([[1,2],[2,3],[3,4],[4,5],[5,6],[6,7],[7,8],[8,9],[9,10],[10,11]])

# define generator

n_input = 3

generator = TimeseriesGenerator(series, target, length=n_input, batch_size=1)

n_features=1

n_output=2

# define model

model_lstm2 = Sequential()

model_lstm2.add(LSTM(10, activation=’relu’,

input_shape=(n_input, n_features)))

model_lstm2.add(Dense(n_output))

model_lstm2.compile(optimizer=’sgd’, loss=’mse’)

# fit model

history_lstm2=model_lstm2.fit_generator(generator, steps_per_epoch=1, epochs=50, verbose=1)

plt.plot(history_lstm2.history[‘loss’])

I get an error:

“Error when checking input: expected lstm_26_input to have 3 dimensions, but got array with shape (1, 3)”

I suppose that there is something wrong with “generator”, as, I suppose, lstm ipunt_shape is correct, but how to change for this case?

Thanks.

Yes, input to LSTMs must be 3D, e.g. some number of samples each with the same number of timesteps and features. Perhaps this will help:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Just wanted to say thank you, was going crazy building x and y (multivariate x), until I ended up here… This article helped me keeping my (somewhat) sanity!

Thanks, hang in there!

Hi Jason, excellent tutorial! It really answered alot of questions that have been bothering me about time series forecast the last week.

Just one question please!

Can you please give a very simple real life use case of a Multivariate Inputs and Dependent Series problem? I am just trying to get a better picture in my mind of when I would need to apply this.

Thanks.

Yes, I have many examples, perhaps start here:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Hi Jason, Thanks again for posting this tutorial.

I am working on a project to perform future forecasting of stock data. I have 13 features and 2.5 years of data to train my LSTM. I tried implementing a generator to do some future forecasting (5 days into the future) but i receive the error:

ValueError: Error when checking input: expected lstm_1_input to have shape (529, 13) but got array with shape (5, 13)

would this be due to my input and output shapes? Right now I have them set to the same array:

generator = TimeseriesGenerator(stock_dataset, stock_dataset, length = n_lag, batch_size = 8)

In this case would using my array “stock_dataset” as the input (data) and output (target) make sense because the data output I am looking for is the same shape as the input data. Please if you can provide some guidance on this and let me know if this is the cause of my ValueError. Thank you so much in advance!

It suggests a mismatch between the shape of one sample provided by the generator and the expectation of the model.

You can change the generator to match the model or change the model to match the data.

More on the 3D shape expected by an LSTM here:

https://machinelearningmastery.com/faq/single-faq/what-is-the-difference-between-samples-timesteps-and-features-for-lstm-input

Hi Jason, thanks again for the links. I am a little confused because in the links you provide you said the input layer for an LSTM expects a 3D array as input but your example above you are only passing a 2D array from the generator:

model.add(LSTM(100, activation=’relu’, input_shape=(n_input, n_features)))

Also in the keras documentation for TimeseriesGenerator it also mentions the input shape should be a 2D array.

Yes, we specify the shape of on sample, but always provide many samples, hence it is always 3d. Sorry for the confusion.

Means during model preparation, we specify the shape of a sample only.

hi Jason, is there any way to pass two targets to the timeseriesgenerator, I mean, I have an input in my model and two target, it’s a classification problem with two outputs. Thanks.

There might be, not as far as I know, perhaps use a custom function:

https://machinelearningmastery.com/convert-time-series-supervised-learning-problem-python/

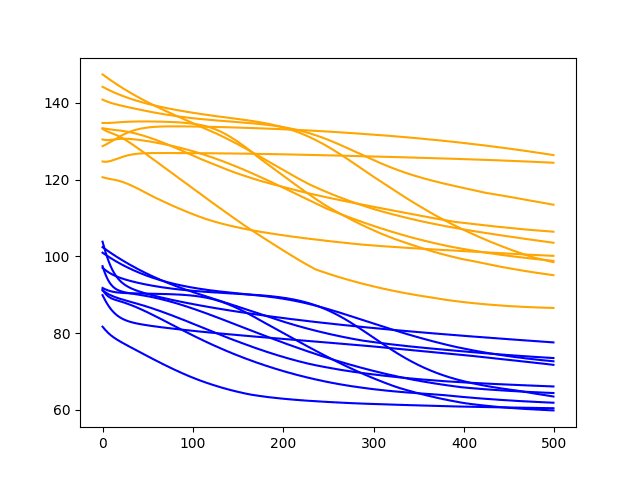

Hi Jason

I added a loop to your code with minor revision. I would like to forecast t+1 day(s) using multi-step forward. At validation step forecast values gone to +infinity or zero related to architecture. My full code at gist.github. Any suggestion?

”’

x1=495 # end of train period

valid=pred[x1:x1+n_input] # initial of validation = end of train period

print (valid,n_input, n_features)

for n_phase in range(0,n_valid-5):

x_input = valid[n_phase+0: n_phase+n_input].reshape((1, n_input, n_features))

yhat = model.predict(x_input, verbose=0)

valid=np.append (valid, yhat)

valid=valid[n_input:len(pred)-1]

Plot:

https://imgur.com/a/1RFeqEM

Full code:

https://gist.github.com/yusufmet/84a5c8f0c535132256bee08db43b3206

Nice work.

Misunderstand me. Why forecast gone to infinity or zero? How can I correct te lstm architecture?

Perhaps you have a vanishing or exploding gradient?

Perhaps try scaling data prior to modeling?

Perhaps try using relu activation?

Perhaps try weight constraints?

Hi Jason,

Is there a post of you doing data augmentation for time series prediction/classification in Keras?And also is this logical?

Not really, sorry. Maybe adding gaussian noise:

https://machinelearningmastery.com/how-to-improve-deep-learning-model-robustness-by-adding-noise/

Hi jason,

I am currently working on a sales prediction project by using timeseriesgenerator.

Everything seems to be going well until the fitting part. It returns

AttributeError: ‘TimeseriesGenerator’ object has no attribute ‘shape’

the code is like this:

n_input = 30

n_features = 1

generator = TimeseriesGenerator(X_train,y_train,length = n_input,batch_size=16)

model = keras.models.Sequential()

model.add(keras.layers.LSTM(100,activation = ‘relu’,return_sequences=True,input_shape (n_input,n_features)))

model.add(keras.layers.LSTM(50,activation = ‘relu’,return_sequences = True))

model.add(keras.layers.LSTM(25))

model.add(keras.layers.Dense(1))

when it reaches fitting it shows this error.

could you please tell what might be a possible reason behind it?

Looks like you are missing an “=” in the first hidden layer.

sorry jason that was an error while copying the code.

the “=” is in the code snippet.

other than that what would you think the time series generator has a problem with accepting shape as attribute?

I got the same error, how can I solve it?

Try copying the code from the tutorial directly.

This will help:

https://machinelearningmastery.com/faq/single-faq/how-do-i-copy-code-from-a-tutorial

Thanks Jason for the helpful tutorials!

I’m not seeing a keras’ function to normalize the generator’s output. Although I’d prefer to split the test/train sets with the generator – it seems one must first split, then normalize, then generate. Is that true?

Cheers

Yes. Get the min/max or mean/stdev from train then apply scaling to train and test.

What about a trend or seasonal differencing with the generator?

Not that I’m aware of.

Hi,

my data is x_train.shape (1100, 3000) and y_train (1100,1)

when i use the generator, I get batches of size (batch_size, 1, 3000) which is fine, but i want to reshape it to (batch_size, 3000, 1).

is this possible, and how?

It’s been a long time, I don’t recall. You may have to experiment with the parameters to the generator.

Perhaps start with the example in the post and adapt it as a prototype for what you want to achieve.

Completing the multivariate example to the point of model.fit yields an error that I have commented in-line “Error when checking target: expected dense_14 to have shape (2,) but got array with shape (1,)”

# multivariate one step problem with lstm

from numpy import array

from numpy import hstack

from numpy import insert

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import LSTM

from keras.preprocessing.sequence import TimeseriesGenerator

# define dataset

in_seq1 = array([10, 20, 30, 40, 50, 60, 70, 80, 90, 100])

in_seq2 = array([15, 25, 35, 45, 55, 65, 75, 85, 95, 105])

out_seq = array([25, 45, 65, 85, 105, 125, 145, 165, 185, 205])

# reshape series

in_seq1 = in_seq1.reshape((len(in_seq1), 1))

in_seq2 = in_seq2.reshape((len(in_seq2), 1))

out_seq = out_seq.reshape((len(out_seq), 1))

# horizontally stack columns

dataset = hstack((in_seq1, in_seq2))

”’

PROBLEM

shift the target sample by one step

out_seq = insert(out_seq, 0, 0)

Ignore the shift and let’s just try to fit the model.

NOTE : Error at “model.fit_generator…”

Error when checking target: expected dense_14 to have shape (2,) but got array with shape (1,)

”’

# define generator

n_input = 1

generator = TimeseriesGenerator(dataset, out_seq, length=n_input, batch_size=1)

# define model

model = Sequential()

model.add(LSTM(100, activation=’relu’, input_shape=(n_input, n_features)))

model.add(Dense(2))

model.compile(optimizer=’adam’, loss=’mse’)

# fit model

model.fit_generator(generator, steps_per_epoch=1, epochs=5, verbose=1)

# make a one step prediction out of sample

x_input = array([[90, 95], [100, 105]]).reshape((1, n_input, n_features))

yhat = model.predict(x_input, verbose=0)

print(yhat)

HI jason, i have used window size by using the shifting function to my time-series data to convert to supervised data so

can you please tell me what is the main difference if I use generator and window shift function

I have tried couple of articles not able to understand can you please help me to understand

I think they are probably functionally equivalent, just different implementations of the same thing.

Hi Jason,

thx for your great tutorial.

I bought your advertised book in this article “Deep Learning for Time Series Forecasting” and could not find any reference to the TimeseriesGenerator.

Has TimeseriesGenerator become obsolete or did you miss to update your book?

Looking forward to reading your book 🙂

Thanks!

No, I just don’t recommend using it (e.g. it’s not flexible enough for me) therefore it’s not used in the tutorials or un the book.

Hello Jason, I am getting this error:

My keras version = 2.3.0-tf

Thanks for your blogs!

—————————————————————————

ValueError Traceback (most recent call last)

in ()

15 # define model

16 model = Sequential()

—> 17 model.add(LSTM(100, activation=’relu’, input_shape=(n_input, n_features)))

18 model.add(Dense(1))

19 model.compile(optimizer=’adam’, loss=’mse’)

~\AppData\Roaming\Python\Python36\site-packages\keras\engine\sequential.py in add(self, layer)

164 # and create the node connecting the current layer

165 # to the input layer we just created.

–> 166 layer(x)

167 set_inputs = True

168 else:

~\AppData\Roaming\Python\Python36\site-packages\keras\layers\recurrent.py in __call__(self, inputs, initial_state, constants, **kwargs)

539

540 if initial_state is None and constants is None:

–> 541 return super(RNN, self).__call__(inputs, **kwargs)

542

543 # If any of

initial_stateorconstantsare specified and are Keras~\AppData\Roaming\Python\Python36\site-packages\keras\backend\tensorflow_backend.py in symbolic_fn_wrapper(*args, **kwargs)

73 if _SYMBOLIC_SCOPE.value:

74 with get_graph().as_default():

—> 75 return func(*args, **kwargs)

76 else:

77 return func(*args, **kwargs)

~\AppData\Roaming\Python\Python36\site-packages\keras\engine\base_layer.py in __call__(self, inputs, **kwargs)

461 ‘You can build it manually via: ‘

462 ‘

layer.build(batch_input_shape)‘)–> 463 self.build(unpack_singleton(input_shapes))

464 self.built = True

465

~\AppData\Roaming\Python\Python36\site-packages\keras\layers\recurrent.py in build(self, input_shape)

500 self.cell.build([step_input_shape] + constants_shape)

501 else:

–> 502 self.cell.build(step_input_shape)

503

504 # set or validate state_spec

~\AppData\Roaming\Python\Python36\site-packages\keras\layers\recurrent.py in build(self, input_shape)

1917 initializer=self.kernel_initializer,

1918 regularizer=self.kernel_regularizer,

-> 1919 constraint=self.kernel_constraint)

1920 self.recurrent_kernel = self.add_weight(

1921 shape=(self.units, self.units * 4),

~\AppData\Roaming\Python\Python36\site-packages\keras\engine\base_layer.py in add_weight(self, name, shape, dtype, initializer, regularizer, trainable, constraint)

277 if dtype is None:

278 dtype = self.dtype

–> 279 weight = K.variable(initializer(shape, dtype=dtype),

280 dtype=dtype,

281 name=name,

~\AppData\Roaming\Python\Python36\site-packages\keras\initializers.py in __call__(self, shape, dtype)

225 limit = np.sqrt(3. * scale)

226 x = K.random_uniform(shape, -limit, limit,

–> 227 dtype=dtype, seed=self.seed)

228 if self.seed is not None:

229 self.seed += 1

~\AppData\Roaming\Python\Python36\site-packages\keras\backend\tensorflow_backend.py in random_uniform(shape, minval, maxval, dtype, seed)

4355 with tf_ops.init_scope():

4356 return tf_keras_backend.random_uniform(

-> 4357 shape, minval=minval, maxval=maxval, dtype=dtype, seed=seed)

4358

4359

~\AppData\Roaming\Python\Python36\site-packages\tensorflow\python\keras\backend.py in random_uniform(shape, minval, maxval, dtype, seed)

5684 seed = np.random.randint(10e6)

5685 return random_ops.random_uniform(

-> 5686 shape, minval=minval, maxval=maxval, dtype=dtype, seed=seed)

5687

5688

~\AppData\Roaming\Python\Python36\site-packages\tensorflow\python\ops\random_ops.py in random_uniform(shape, minval, maxval, dtype, seed, name)

286 maxval_is_one = maxval is 1 # pylint: disable=literal-comparison

287 if not minval_is_zero or not maxval_is_one or dtype.is_integer:

–> 288 minval = ops.convert_to_tensor(minval, dtype=dtype, name=”min”)

289 maxval = ops.convert_to_tensor(maxval, dtype=dtype, name=”max”)

290 seed1, seed2 = random_seed.get_seed(seed)

~\AppData\Roaming\Python\Python36\site-packages\tensorflow\python\framework\ops.py in convert_to_tensor(value, dtype, name, as_ref, preferred_dtype, dtype_hint, ctx, accepted_result_types)

1339

1340 if ret is None:

-> 1341 ret = conversion_func(value, dtype=dtype, name=name, as_ref=as_ref)

1342

1343 if ret is NotImplemented:

~\AppData\Roaming\Python\Python36\site-packages\tensorflow\python\framework\tensor_conversion_registry.py in _default_conversion_function(***failed resolving arguments***)

50 def _default_conversion_function(value, dtype, name, as_ref):

51 del as_ref # Unused.

—> 52 return constant_op.constant(value, dtype, name=name)

53

54

~\AppData\Roaming\Python\Python36\site-packages\tensorflow\python\framework\constant_op.py in constant(value, dtype, shape, name)

260 “””

261 return _constant_impl(value, dtype, shape, name, verify_shape=False,

–> 262 allow_broadcast=True)

263

264

~\AppData\Roaming\Python\Python36\site-packages\tensorflow\python\framework\constant_op.py in _constant_impl(value, dtype, shape, name, verify_shape, allow_broadcast)

268 ctx = context.context()

269 if ctx.executing_eagerly():

–> 270 t = convert_to_eager_tensor(value, ctx, dtype)

271 if shape is None:

272 return t

~\AppData\Roaming\Python\Python36\site-packages\tensorflow\python\framework\constant_op.py in convert_to_eager_tensor(value, ctx, dtype)

94 dtype = dtypes.as_dtype(dtype).as_datatype_enum

95 ctx.ensure_initialized()

—> 96 return ops.EagerTensor(value, ctx.device_name, dtype)

97

98

ValueError: object __array__ method not producing an array

Sorry to hear that, this may help:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

Thanks for your great tutorial, Jason.

May I ask a question?

I want to predict the AQI(Air Quality Index) of some places. These are relationships among nearby places. So I use TimeseriesGenerator on every place.

Then I want to contact generator1, generator2… to train in one model. How can I do that?

Perhaps consecutively on the same model?

Or perhaps use some custom code to create your own generator to load all datasets and train on them all in one run.

Thanks for your replying! But I don’t know how to create my own generator.

I found a way that using np.vstack to contact generator1[0][0], generator1[1][0]…, then train the model with fit() instead of fit_generator().

I’m testing it, hoping it available.

If you are new to Python generators, this will help:

https://wiki.python.org/moin/Generators

I am also unable to fit the generator using this example. The problem is with the dataset dimensions:

Error when checking target: expected dense_6 to have 3 dimensions, but got array with shape (1000, 1)

Could someone elaborate the dimensions concept here? It is difficult to understand the dimension of the generator, what it is currently and what it should be?

Sorry to hear that you’re having trouble with the example, perhaps some of these suggestions will help:

https://machinelearningmastery.com/faq/single-faq/why-does-the-code-in-the-tutorial-not-work-for-me

Thank you very much!

Does your book (Deep learning for Time Series Forecasting) build a model based on

TimeseriesGenerator?

No, all examples use custom code for controlled walk-forward validation of forecast models.

Thank you very much! I just have a question about using TimeseriesGenerator with “validation”. Usually, the length of TimeseriesGenerator has to be less than length of test dataset.

Do you happen to know this one?

If not, it is totally fine with me. I do not want you to bother to explain this one!

I do not use validation sets for time series forecasting, I don’t see a straightforward way to implement it.

Hi Jason, and thank for the great tutorial.

May I ask a question please.

In the above Multi-step Forecasts case, what if one needs to use generator for a data set that includes multiple feature (independent) rows (the current and a number of previous ones) and only the current dependent output.

The idea is the current output can be foretasted as shown below.

What could be written in this case?

[[[10. 15.] 25.] [[20. 25.]]] => [45.]

[[[20. 25.][45.]] [[30. 35.]]] => [65.]

[[[30. 35.][65.]] [[40. 45.]]] => [85.]

……..

You’re welcome.

Perhaps.

It might be easier to code the generator yourself – custom for your data? E.g. see the examples of data prep here:

https://machinelearningmastery.com/how-to-develop-lstm-models-for-time-series-forecasting/

Thanks much, I didn’t refresh the page to see your response.

No problem.

In other words: for the below 31, 32, 33 are independent outputs that need not to be predicted by the model. Only 36 is the subject for prediction.

Generator[0] => (array([[[ 1, 2, 3, 6],

[11, 12, 13, 16],

[21, 22, 23, 26]]]), array([[31, 32, 33, 36]]))

Hey Jason,

Thanks for this tutorial. I am trying to implement this with my dataset that has a shape of (1821, 137), with 1821 being the time steps and 137 being the features. I define the generator and print the samples, but I keep getting “1” for my sample count when it obviously should be higher. Do you know what the issue is?

#1820 so that one observation can be current time step

generator = TimeseriesGenerator(data, data, length=1820, batch_size=1)

print(‘Samples: %d’ % len(generator))

Samples: 1

Thank you,

Sorry, I don’t know the cause of your fault. Perhaps some of these tips will help:

https://machinelearningmastery.com/faq/single-faq/can-you-read-review-or-debug-my-code

Hey Jason,

I found the issue. In the Keras TimeseriesGenerator documentation (https://keras.io/api/preprocessing/timeseries/), they define length as “Length of the output sequences (in number of timesteps)” ……so in my question above, by setting length = 1820, I’m actually defining the length of my output sequence as 1820, which thus only leaves me with 1 sample as input.

When I change length = 1, and print the number of samples..I get the correct number I was looking for of 1820.

What do you think?

Perhaps test it with some sample data and see if it matches your expectations.

Just curious does it work with .tif files from Landsat and MODIS data

Sorry, I don’t know what “Landsat and MODIS data” means.

Just want to say thanks for your consistently high quality tutorials. I keep ending back on your site from my Googling, and your explanations and examples are some of the best. I also find I have a confidence in the validity of your explanations, compared to many other sites where I have to question whether the training results are legitimate.

Thanks Chris!

Hi Jason,

Is there a way to use time series generator objects in RandomizedGridSearch through the KerasRegressor wrapper? I am trying to optimize my LSTM model.

I am trying to do this, but first, it needs X and y as separate arrays; when I solve this, I think it has a problem in model evaluation.

Is there an example that might help?

I don’t believe so, I would recommend using the Keras API directly and running your own random/grid search manually.

I took the opportunity and created the following keras independent package: https://time-series-generator.readthedocs.io/

It would be great if we could augment its functionality overcoming the limitation in TimeseriesGenerator just mentioned here in the post. Pull requests in this regard are welcome!

Well done!

There is a candidate solution to this limitation here: https://time-series-generator.readthedocs.io/en/latest/#advanced-usage

For my project, I am working on a machine learning area. My yolo output contains four classes 1)not_empty 2)empty 3)hand 4)tool and detected objects co-ordinates. The task is to build a theoretical dataset for the classification of the process.

For example, if we consider the time series of 10 sec then if from sec 1 to 8 Yolo detects only class hand and its co-ordinates X and Y (simplification I am using X and Y instead of Xmin,Xmax,Ymin,Ymax) and at 9th and 10th sec, it detects class tool as well with nearby co-ordinates of hand so based on that we need to classify that as picking of a tool as a new process.

Like this, we need to define 3 process manually in excel or CSV formate and give it as an input only in the dense layer and classify the process. My input is in one series i have 8 features , 10 time stamps and like that i have total 3 series.My output will be 1 class for each series. For that i used time series generator but it will not help because input length and target length are different. Which other method should i use ?

Not sure I follow, sorry. Perhaps the tutorials here will give you some ideas:

https://machinelearningmastery.com/start-here/#deep_learning_time_series

Hi Jason,

I created a CNN-LSTM network to process real time satellite images and power data.

A time distributed time series of satellite images are processed by a CNN, flattened and next additional time series power data is added (concatenate).

n_input timesteps are used to predict n_output timesteps

Structure:

# define model CNN

visible1 = Input(shape=(n_input, pixels, pixels, 3))

vgg_cnn = TimeDistributed(vggmodel)(visible1)

flat1 = TimeDistributed(Flatten())(vgg_cnn)

# Actual power input (4 variables)

visible2 = Input(shape=(n_input,4))

# Merge two models

merge = concatenate([flat1, visible2])

# Encoder – LSTM

LSTM1 = LSTM(400, activation=’relu’, return_sequences=True)(merge)

LSTM2 = LSTM(400, activation=’relu’, return_sequences=True)(LSTM1)

LSTM3 = LSTM(400, activation=’relu’, return_sequences=False)(LSTM2)

# Decoder – LSTM

Repeater = RepeatVector(n_out)(LSTM3)

LSTM4 = LSTM(400, activation=’relu’, return_sequences=True)(Repeater)

LSTM5 = LSTM(400, activation=’relu’, return_sequences=True)(LSTM4)

LSTM6 = LSTM(400, activation=’relu’, return_sequences=True)(LSTM5)

hidden1 = TimeDistributed(Dense(400, activation=’relu’))(LSTM6)

hidden2 = TimeDistributed(Dense(100, activation=’relu’))(hidden1)

output = TimeDistributed(Dense(1))(hidden2)

# put the model together

model = Model(inputs=[visible1, visible2], outputs=output)

#compile method

model.compile(loss=’mse’, optimizer=’adam’, metrics=[‘mse’,’mae’,

metrics.RootMeanSquaredError()])

Now I could fit the model with the data directly, using:

model.fit([X_train_images_steps, X_train_power_steps] , y_train_steps, batch_size=96, …ect]

However, this is not possible with a large amount of image data for obvious reasons.

So I need to use a generator to make this work, however the standard TimeseriesGenerator does not support multiple outputs ([x1, x2], y).

I created a work around, however this changes the object type from Sequence which is what a TimeseriesGenerator is to a generic generator.

Work around:

def generate_generator_multiple(X_train, y_train, y_train_steps):

batch_size = 96

# INPUT 1 – Image generator

data_gen1 = TimeseriesGenerator(X_train_image, y_train_steps_out, length=n_input, batch_size=batch_size)

# INPUT 2 – Actual generator

data_gen2 = TimeseriesGenerator(X_train_power, y_train_steps_out, length=n_input, batch_size=batch_size)

i = -1

while True:

if i == -1:

print(‘inititation’)

x1, y1 = data_gen1[0]

x2, y2 = data_gen2[0]

i+=1

elif i>=0:

print(‘generation with i: ‘ + str(i))

x1, y1 = data_gen1[i]

x2, y2 = data_gen2[i]

i+=1

if i==len(data_gen2): i=0

else: i = i

print(‘next i: ‘ + str(i))

yield [x1, x2], y1

With:

model.fit(generate_generator_multiple(X_train, y_train, y_train_steps), steps_per_epoch=365, …ect)

There is no error but the training error is all over the place (jumping up and down instead of consistently going down like it did with the direct feed-in approach), and the model doesn’t learn to make good predictions.

What do you think is a good work around solution? create an own version of the TimeSeriesGenerator class perhaps?

Long question, but I hope you can help with some ideas.

Really learned a lot from all your blogs, thanks a lot!

Kind regards,

Gijs

I don’t have the capacity to review your code, sorry.

Perhaps you can summarise your problem in a sentence or two?

Hi Jason,

I solved the problem.

The question was: How to manipulate a TimeSeriesGenerator to output something like [x1, x2], y when training a model with various input streams.

My work around above does not work, as keras fit() expects a Sequence instead of a general generator.

The solution is: Manipulate the keras.utils.Sequence.TimeseriesGenerator functionality for your own purpose here.

If somebody ever is coming across the same problem, here is my solution code:

[DELETED]

I’m happy to hear you solved your problem!

Perhaps you can experiment with the TimeSeriesGenerator on some test data – prototype a few approaches until you achieve your desired effect?

Hi Jason,

Is it possible for me to delete my own comments here? Or can you do that?

I shared parts of my code here that are picked up picked up by Turnitin. I do not like that.

Kind regards, Gijs

I deleted the code from the comment for you. I hope that helps.

Hi Jason,

Thank you so much! Just the deleted code bit is fine.

However, my question also holds for the comment above (#599918).

Can you also delete the code part from that comment?

Finally, I would like to thank you a lot for this website and all the blogs you write. It helped me a lot during my graduation project!!

Many thanks, Gijs

Hi Jason thanks for the tutorial, I want to predict 300 data ahead ( I have 1200 data points). How do I predict 300 data points using univariate?

Simplest would be to predict one step at a time and reuse the prediction result to predict next time step. However, you should expect the prediction accuracy to drop a lot at 300 steps ahead.

Hi Jason,

For the below (which is lifted from the example in the above tutorial), may I ask if instead of using “input_dim” I use input_shape, what is the “input_shape” I should use? Thank you

I tried input_shape= (n_input,), but I get a different yhat as compared to input_dim=n_input

Thank you so much!

# univariate one step problem with mlp

# define generator

n_input = 2

generator = TimeseriesGenerator(series, series, length=n_input, batch_size=8)

# define model

model = Sequential()

model.add(Dense(100, activation=’relu’, input_dim=n_input))

Hi Jason,

For code below if I change n_input and length in ‘generator’ I will get an error and it does not run properly. I don’t know the relationship between n_input and n_features because if they are different and independent from each other again I will get error.

Also I don’t know whether I concatenate the features in my network in from a right axis.

I would really appreciate it if you can help me through this since it has been a while I stuck in it.

from locale import normalize

import tensorflow as tf

from sklearn.preprocessing import MinMaxScaler

from focal_loss import BinaryFocalLoss

from sklearn.model_selection import train_test_split

SIGNAL_WIDTH = 61862

import h5py

import numpy as np

import os

import scipy.io

from keras.layers import Input

def build_model(input_layer):

#input_shape = (16,None,640)

#input_layer = Input((None,100))

c1 = tf.keras.layers.Conv1D(filters=32,kernel_size=3,strides=1, padding=”same”)(input_layer)

c1 = tf.keras.layers.BatchNormalization()(c1)

c1 = tf.keras.layers.LeakyReLU(alpha=0.01)(c1)

c1 = tf.keras.layers.Conv1D(filters=32,kernel_size=3,strides=1, padding=”same”)(c1)

c1 = tf.keras.layers.BatchNormalization()(c1)

c1 = tf.keras.layers.LeakyReLU(alpha=0.01)(c1)

p1 = tf.keras.layers.MaxPool1D(pool_size=2, strides=2, padding=”same”)(c1)

c2 = tf.keras.layers.Conv1D(filters=32,kernel_size=3,strides=1, padding=”same”)(p1)

c2 = tf.keras.layers.BatchNormalization()(c2)

c2 = tf.keras.layers.LeakyReLU(alpha=0.01)(c2)

p2 = tf.keras.layers.MaxPool1D(pool_size=2, strides=2, padding=”same”)(c2)

c3 = tf.keras.layers.Conv1D(filters=32,kernel_size=3,strides=1, padding=”same”)(p2)

c3 = tf.keras.layers.BatchNormalization()(c3)

c3 = tf.keras.layers.LeakyReLU(alpha=0.01)(c3)

p3 = tf.keras.layers.MaxPool1D(pool_size=2, strides=2, padding=”same”)(c3)

c4 = tf.keras.layers.Conv1D(filters=32,kernel_size=3,strides=1, padding=”same”)(p3)

c4 = tf.keras.layers.BatchNormalization()(c4)

c4 = tf.keras.layers.LeakyReLU(alpha=0.01)(c4)

p4 = tf.keras.layers.MaxPool1D(pool_size=2, strides=2, padding=”same”)(c4)

c5 = tf.keras.layers.Conv1D(filters=32,kernel_size=3,strides=1, padding=”same”)(p4)

c5 = tf.keras.layers.BatchNormalization()(c5)

c5 = tf.keras.layers.LeakyReLU(alpha=0.01)(c5)

p5 = tf.keras.layers.MaxPool1D(pool_size=2, strides=2, padding=”same”)(c5)

c6 = tf.keras.layers.Conv1D(filters=32,kernel_size=3,strides=1, padding=”same”)(p5)

c6 = tf.keras.layers.BatchNormalization()(c6)

c6 = tf.keras.layers.LeakyReLU(alpha=0.01)(c6)

p6 = tf.keras.layers.MaxPool1D(pool_size=2, strides=2, padding=”same”)(c6)

c7 = tf.keras.layers.Conv1D(filters=32,kernel_size=3,strides=1, padding=”same”)(p6)

c7 = tf.keras.layers.BatchNormalization()(c7)

c7 = tf.keras.layers.LeakyReLU(alpha=0.01)(c7)

c7 = tf.keras.layers.Conv1D(filters=32,kernel_size=3,strides=1, padding=”same”)(c7)

c7 = tf.keras.layers.BatchNormalization()(c7)

c7 = tf.keras.layers.LeakyReLU(alpha=0.01)(c7)

# Up_sampling & Output part

u8 = tf.keras.layers.UpSampling1D(size=2)(c7)

u8 = tf.keras.layers.concatenate([u8,c2],axis=1)

c8 = tf.keras.layers.Conv1DTranspose(filters=32,kernel_size=3,strides=1, padding=”same”)(u8)

#c8 = tf.keras.layers.BatchNormalization()(c8)

#c8 = tf.keras.layers.LeakyReLU(alpha=0.01)(c8)

u9 = tf.keras.layers.UpSampling1D(size=2)(c8)

u9 = tf.keras.layers.concatenate([u9,c5],axis=1)

c9 = tf.keras.layers.Conv1DTranspose(filters=32,kernel_size=3,strides=1, padding=”same”)(u9)

#c9 = tf.keras.layers.BatchNormalization()(c9)

c9 = tf.keras.layers.LeakyReLU(alpha=0.01)(c9)

u10 = tf.keras.layers.UpSampling1D(size=2)(c9)

u10 = tf.keras.layers.concatenate([u10,c4],axis=1)

c10 = tf.keras.layers.Conv1DTranspose(filters=32,kernel_size=3,strides=1, padding=”same”)(u10)

#c10 = tf.keras.layers.BatchNormalization()(c10)

c10 = tf.keras.layers.LeakyReLU(alpha=0.01)(c10)

u11 = tf.keras.layers.UpSampling1D(size=2)(c10)

u11 = tf.keras.layers.concatenate([u11,c3],axis=1)

c11 = tf.keras.layers.Conv1DTranspose(filters=32,kernel_size=3,strides=1, padding=”same”)(u11)

#c11 = tf.keras.layers.BatchNormalization()(c11)

c11 = tf.keras.layers.LeakyReLU(alpha=0.01)(c11)

u12 = tf.keras.layers.UpSampling1D(size=2)(c11)

u12 = tf.keras.layers.concatenate([u12,c2],axis=1)

c12 = tf.keras.layers.Conv1DTranspose(filters=32,kernel_size=3,strides=1, padding=”same”)(u12)

#c12 = tf.keras.layers.BatchNormalization()(c12)

c12 = tf.keras.layers.LeakyReLU(alpha=0.01)(c12)

u13 = tf.keras.layers.UpSampling1D(size=2)(c12)

u13 = tf.keras.layers.concatenate([u13,c1],axis=1)

c13 = tf.keras.layers.Conv1DTranspose(filters=32,kernel_size=3,strides=1, padding=”same”)(u13)

#c13 = tf.keras.layers.BatchNormalization()(c13)

#c13 = tf.keras.layers.LeakyReLU(alpha=0.01)(c13)

c13 = tf.keras.layers.Conv1DTranspose(filters=32,kernel_size=3,strides=1, padding=”same”)(c13)

#c13 = tf.keras.layers.BatchNormalization()(c13)

#c13 = tf.keras.layers.LeakyReLU(alpha=0.01)(c13)

c13 = tf.keras.layers.Conv1DTranspose(filters=2,kernel_size=3,strides=1, padding=”same”)(c13)

#c13 = tf.keras.layers.BatchNormalization()(c13)

#c13 = tf.keras.layers.LeakyReLU(alpha=0.01)(c13)

outputs = tf.keras.layers.Conv1DTranspose(filters=1,kernel_size=1,strides=1, padding=”same”,activation=’sigmoid’)(c13)

return outputs

input = h5py.File(‘pseudo_test_640.mat’)

# create scaler

scaler = MinMaxScaler()

# fit scaler on data

# dx = input[‘x’]

# dy = input[‘y’]

dx = np.random.randn(4724,5000)

y = np.random.randn(4724,5000)

scaler.fit(dx)

# apply transform

normalized_data = scaler.transform(dx)

#y = np.array(input[“y”][:])

#inputs = np.expand_dims(normalized_data.transpose(), axis=2)

train_x, test_x , train_mask , test_mask = train_test_split(normalized_data.transpose(), y.transpose(), test_size =0.2,random_state=42)

n_features = 4724

n_input = 4000

input_layer = Input((n_input, n_features))

output_layer = build_model(input_layer)

model = tf.keras.Model([input_layer],[output_layer])

#model = tf.keras.Model([input_layer],[outputs])

import keras.backend as K

ALPHA = 2

GAMMA = 4

def FocalLoss(targets,inputs, alpha = ALPHA , gamma = GAMMA) :

targets = K.cast(targets,’float32′)

BCE = K.binary_crossentropy(targets,inputs)

BCE_EXP = K.exp(-BCE)

focal_loss = K.mean(alpha*K.pow((1-BCE_EXP),gamma)*BCE)

return focal_loss

model.compile(metrics=[‘accuracy’],optimizer = ‘adam’,loss = FocalLoss) #loss=[BinaryFocalLoss(gamma=2)],loss = tf.keras.losses.Huber()

model.summary()

generator = tf.keras.preprocessing.sequence.TimeseriesGenerator(train_x,train_mask,length = 1,batch_size= 10)

history = model.fit_generator(generator,steps_per_epoch= (len(train_x))//200,epochs = 5) #,validation_data=(test_x, test_mask)

# generator = tf.keras.preprocessing.sequence.TimeseriesGenerator(train_x,train_mask,length = 212,batch_size= 50)

# history = model.fit_generator(generator,steps_per_epoch= (len(train_x))//200,epochs = 5) #,validation_data=(test_x, test_mask)

”’

n_features = 640

n_input = 4000

input_layer = Input((n_input, n_features))

#input_layer = Input((10,640))

#train_mask = np.asarray(train_mask).astype(‘float64’).reshape((-1,1))

output_layer = build_model(input_layer)

model = tf.keras.Model([input_layer],[output_layer])

#model = tf.keras.Model([input_layer],[outputs])

model.compile(loss = ‘BinaryCrossentropy’,optimizer = ‘adam’,metrics=[‘accuracy’]) #loss=[BinaryFocalLoss(gamma=2)],loss = tf.keras.losses.Huber()

model.summary()

#train_x1 = train_x.reshape(4000,-1)

#train_x1 = np.expand_dims(train_x , axis = -1)

#train_mask1 = np.expand_dims(train_mask , axis = -1)

generator = tf.keras.preprocessing.sequence.TimeseriesGenerator(train_x,train_mask,length = 1,batch_size= 32)

history = model.fit_generator(generator,steps_per_epoch= (len(train_x))//32,validation_data=(test_x, test_mask),epochs = 5) #

#history = model.fit(train_x1,train_mask,epochs = 5,batch_size = 10)

Hi SF_mir…Please elaborate on the exact error message you are receiving so that we may better assist you.

Hello.

Can tensorflow timeseries_dataset_from_array or timeseriesgenerator generated data be used as input for sklearn?

I guess its type needs to be transformed.