Develop a Deep Learning Model to Automatically Classify Movie Reviews

as Positive or Negative in Python with Keras, Step-by-Step.

Word embeddings are a technique for representing text where different words with similar meaning have a similar real-valued vector representation.

They are a key breakthrough that has led to great performance of neural network models on a suite of challenging natural language processing problems.

In this tutorial, you will discover how to develop word embedding models for neural networks to classify movie reviews.

After completing this tutorial, you will know:

- How to prepare movie review text data for classification with deep learning methods.

- How to learn a word embedding as part of fitting a deep learning model.

- How to learn a standalone word embedding and how to use a pre-trained embedding in a neural network model.

Kick-start your project with my new book Deep Learning for Natural Language Processing, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Nov/2019: Fixed code typo when preparing training dataset (thanks HSA).

- Update Aug/2020: Updated link to movie review dataset.

How to Develop a Word Embedding Model for Predicting Movie Review Sentiment

Photo by Katrina Br*?#*!@nd, some rights reserved.

Tutorial Overview

This tutorial is divided into 5 parts; they are:

- Movie Review Dataset

- Data Preparation

- Train Embedding Layer

- Train word2vec Embedding

- Use Pre-trained Embedding

Python Environment

This tutorial assumes you have a Python SciPy environment installed, ideally with Python 3.

You must have Keras (2.2 or higher) installed with either the TensorFlow or Theano backend.

The tutorial also assumes you have scikit-learn, Pandas, NumPy, and Matplotlib installed.

If you need help with your environment, see this tutorial:

A GPU is not required for this tutorial, nevertheless, you can access GPUs cheaply on Amazon Web Services. Learn how in this tutorial:

Let’s dive in.

Need help with Deep Learning for Text Data?

Take my free 7-day email crash course now (with code).

Click to sign-up and also get a free PDF Ebook version of the course.

1. Movie Review Dataset

The Movie Review Data is a collection of movie reviews retrieved from the imdb.com website in the early 2000s by Bo Pang and Lillian Lee. The reviews were collected and made available as part of their research on natural language processing.

The reviews were originally released in 2002, but an updated and cleaned up version were released in 2004, referred to as “v2.0”.

The dataset is comprised of 1,000 positive and 1,000 negative movie reviews drawn from an archive of the rec.arts.movies.reviews newsgroup hosted at imdb.com. The authors refer to this dataset as the “polarity dataset.”

Our data contains 1000 positive and 1000 negative reviews all written before 2002, with a cap of 20 reviews per author (312 authors total) per category. We refer to this corpus as the polarity dataset.

— A Sentimental Education: Sentiment Analysis Using Subjectivity Summarization Based on Minimum Cuts, 2004.

The data has been cleaned up somewhat, for example:

- The dataset is comprised of only English reviews.

- All text has been converted to lowercase.

- There is white space around punctuation like periods, commas, and brackets.

- Text has been split into one sentence per line.

The data has been used for a few related natural language processing tasks. For classification, the performance of machine learning models (such as Support Vector Machines) on the data is in the range of high 70% to low 80% (e.g. 78%-82%).

More sophisticated data preparation may see results as high as 86% with 10-fold cross validation. This gives us a ballpark of low-to-mid 80s if we were looking to use this dataset in experiments of modern methods.

… depending on choice of downstream polarity classifier, we can achieve highly statistically significant improvement (from 82.8% to 86.4%)

— A Sentimental Education: Sentiment Analysis Using Subjectivity Summarization Based on Minimum Cuts, 2004.

You can download the dataset from here:

- Movie Review Polarity Dataset (review_polarity.tar.gz, 3MB)

After unzipping the file, you will have a directory called “txt_sentoken” with two sub-directories containing the text “neg” and “pos” for negative and positive reviews. Reviews are stored one per file with a naming convention cv000 to cv999 for each neg and pos.

Next, let’s look at loading and preparing the text data.

2. Data Preparation

In this section, we will look at 3 things:

- Separation of data into training and test sets.

- Loading and cleaning the data to remove punctuation and numbers.

- Defining a vocabulary of preferred words.

Split into Train and Test Sets

We are pretending that we are developing a system that can predict the sentiment of a textual movie review as either positive or negative.

This means that after the model is developed, we will need to make predictions on new textual reviews. This will require all of the same data preparation to be performed on those new reviews as is performed on the training data for the model.

We will ensure that this constraint is built into the evaluation of our models by splitting the training and test datasets prior to any data preparation. This means that any knowledge in the data in the test set that could help us better prepare the data (e.g. the words used) are unavailable in the preparation of data used for training the model.

That being said, we will use the last 100 positive reviews and the last 100 negative reviews as a test set (100 reviews) and the remaining 1,800 reviews as the training dataset.

This is a 90% train, 10% split of the data.

The split can be imposed easily by using the filenames of the reviews where reviews named 000 to 899 are for training data and reviews named 900 onwards are for test.

Loading and Cleaning Reviews

The text data is already pretty clean; not much preparation is required.

If you are new to cleaning text data, see this post:

Without getting bogged down too much in the details, we will prepare the data using the following way:

- Split tokens on white space.

- Remove all punctuation from words.

- Remove all words that are not purely comprised of alphabetical characters.

- Remove all words that are known stop words.

- Remove all words that have a length <= 1 character.

We can put all of these steps into a function called clean_doc() that takes as an argument the raw text loaded from a file and returns a list of cleaned tokens. We can also define a function load_doc() that loads a document from file ready for use with the clean_doc() function.

An example of cleaning the first positive review is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

from nltk.corpus import stopwords import string # load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text # turn a doc into clean tokens def clean_doc(doc): # split into tokens by white space tokens = doc.split() # remove punctuation from each token table = str.maketrans('', '', string.punctuation) tokens = [w.translate(table) for w in tokens] # remove remaining tokens that are not alphabetic tokens = [word for word in tokens if word.isalpha()] # filter out stop words stop_words = set(stopwords.words('english')) tokens = [w for w in tokens if not w in stop_words] # filter out short tokens tokens = [word for word in tokens if len(word) > 1] return tokens # load the document filename = 'txt_sentoken/pos/cv000_29590.txt' text = load_doc(filename) tokens = clean_doc(text) print(tokens) |

Running the example prints a long list of clean tokens.

There are many more cleaning steps we may want to explore and I leave them as further exercises.

I’d love to see what you can come up with.

Post your approaches and findings in the comments at the end.

|

1 2 |

... 'creepy', 'place', 'even', 'acting', 'hell', 'solid', 'dreamy', 'depp', 'turning', 'typically', 'strong', 'performance', 'deftly', 'handling', 'british', 'accent', 'ians', 'holm', 'joe', 'goulds', 'secret', 'richardson', 'dalmatians', 'log', 'great', 'supporting', 'roles', 'big', 'surprise', 'graham', 'cringed', 'first', 'time', 'opened', 'mouth', 'imagining', 'attempt', 'irish', 'accent', 'actually', 'wasnt', 'half', 'bad', 'film', 'however', 'good', 'strong', 'violencegore', 'sexuality', 'language', 'drug', 'content'] |

Define a Vocabulary

It is important to define a vocabulary of known words when using a bag-of-words or embedding model.

The more words, the larger the representation of documents, therefore it is important to constrain the words to only those believed to be predictive. This is difficult to know beforehand and often it is important to test different hypotheses about how to construct a useful vocabulary.

We have already seen how we can remove punctuation and numbers from the vocabulary in the previous section. We can repeat this for all documents and build a set of all known words.

We can develop a vocabulary as a Counter, which is a dictionary mapping of words and their counts that allow us to easily update and query.

Each document can be added to the counter (a new function called add_doc_to_vocab()) and we can step over all of the reviews in the negative directory and then the positive directory (a new function called process_docs()).

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 |

from string import punctuation from os import listdir from collections import Counter from nltk.corpus import stopwords # load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text # turn a doc into clean tokens def clean_doc(doc): # split into tokens by white space tokens = doc.split() # remove punctuation from each token table = str.maketrans('', '', punctuation) tokens = [w.translate(table) for w in tokens] # remove remaining tokens that are not alphabetic tokens = [word for word in tokens if word.isalpha()] # filter out stop words stop_words = set(stopwords.words('english')) tokens = [w for w in tokens if not w in stop_words] # filter out short tokens tokens = [word for word in tokens if len(word) > 1] return tokens # load doc and add to vocab def add_doc_to_vocab(filename, vocab): # load doc doc = load_doc(filename) # clean doc tokens = clean_doc(doc) # update counts vocab.update(tokens) # load all docs in a directory def process_docs(directory, vocab, is_trian): # walk through all files in the folder for filename in listdir(directory): # skip any reviews in the test set if is_trian and filename.startswith('cv9'): continue if not is_trian and not filename.startswith('cv9'): continue # create the full path of the file to open path = directory + '/' + filename # add doc to vocab add_doc_to_vocab(path, vocab) # define vocab vocab = Counter() # add all docs to vocab process_docs('txt_sentoken/neg', vocab, True) process_docs('txt_sentoken/pos', vocab, True) # print the size of the vocab print(len(vocab)) # print the top words in the vocab print(vocab.most_common(50)) |

Running the example shows that we have a vocabulary of 44,276 words.

We also can see a sample of the top 50 most used words in the movie reviews.

Note, that this vocabulary was constructed based on only those reviews in the training dataset.

|

1 2 |

44276 [('film', 7983), ('one', 4946), ('movie', 4826), ('like', 3201), ('even', 2262), ('good', 2080), ('time', 2041), ('story', 1907), ('films', 1873), ('would', 1844), ('much', 1824), ('also', 1757), ('characters', 1735), ('get', 1724), ('character', 1703), ('two', 1643), ('first', 1588), ('see', 1557), ('way', 1515), ('well', 1511), ('make', 1418), ('really', 1407), ('little', 1351), ('life', 1334), ('plot', 1288), ('people', 1269), ('could', 1248), ('bad', 1248), ('scene', 1241), ('movies', 1238), ('never', 1201), ('best', 1179), ('new', 1140), ('scenes', 1135), ('man', 1131), ('many', 1130), ('doesnt', 1118), ('know', 1092), ('dont', 1086), ('hes', 1024), ('great', 1014), ('another', 992), ('action', 985), ('love', 977), ('us', 967), ('go', 952), ('director', 948), ('end', 946), ('something', 945), ('still', 936)] |

We can step through the vocabulary and remove all words that have a low occurrence, such as only being used once or twice in all reviews.

For example, the following snippet will retrieve only the tokens that of appears 2 or more times in all reviews.

|

1 2 3 4 |

# keep tokens with a min occurrence min_occurane = 2 tokens = [k for k,c in vocab.items() if c >= min_occurane] print(len(tokens)) |

Running the above example with this addition shows that the vocabulary size drops by a little more than half its size from 44,276 to 25,767 words.

|

1 |

25767 |

Finally, the vocabulary can be saved to a new file called vocab.txt that we can later load and use to filter movie reviews prior to encoding them for modeling. We define a new function called save_list() that saves the vocabulary to file, with one word per file.

For example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# save list to file def save_list(lines, filename): # convert lines to a single blob of text data = '\n'.join(lines) # open file file = open(filename, 'w') # write text file.write(data) # close file file.close() # save tokens to a vocabulary file save_list(tokens, 'vocab.txt') |

Running the min occurrence filter on the vocabulary and saving it to file, you should now have a new file called vocab.txt with only the words we are interested in.

The order of words in your file will differ, but should look something like the following:

|

1 2 3 4 5 6 7 8 9 10 11 |

aberdeen dupe burt libido hamlet arlene available corners web columbia ... |

We are now ready to look at learning features from the reviews.

3. Train Embedding Layer

In this section, we will learn a word embedding while training a neural network on the classification problem.

A word embedding is a way of representing text where each word in the vocabulary is represented by a real valued vector in a high-dimensional space. The vectors are learned in such a way that words that have similar meanings will have similar representation in the vector space (close in the vector space). This is a more expressive representation for text than more classical methods like bag-of-words, where relationships between words or tokens are ignored, or forced in bigram and trigram approaches.

The real valued vector representation for words can be learned while training the neural network. We can do this in the Keras deep learning library using the Embedding layer.

If you are new to word embeddings, see the post:

If you are new to word embedding layers in Keras, see the post:

The first step is to load the vocabulary. We will use it to filter out words from movie reviews that we are not interested in.

If you have worked through the previous section, you should have a local file called ‘vocab.txt‘ with one word per line. We can load that file and build a vocabulary as a set for checking the validity of tokens.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text # load the vocabulary vocab_filename = 'vocab.txt' vocab = load_doc(vocab_filename) vocab = vocab.split() vocab = set(vocab) |

Next, we need to load all of the training data movie reviews. For that we can adapt the process_docs() from the previous section to load the documents, clean them, and return them as a list of strings, with one document per string. We want each document to be a string for easy encoding as a sequence of integers later.

Cleaning the document involves splitting each review based on white space, removing punctuation, and then filtering out all tokens not in the vocabulary.

The updated clean_doc() function is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 |

# turn a doc into clean tokens def clean_doc(doc, vocab): # split into tokens by white space tokens = doc.split() # remove punctuation from each token table = str.maketrans('', '', punctuation) tokens = [w.translate(table) for w in tokens] # filter out tokens not in vocab tokens = [w for w in tokens if w in vocab] tokens = ' '.join(tokens) return tokens |

The updated process_docs() can then call the clean_doc() for each document on the ‘pos‘ and ‘neg‘ directories that are in our training dataset.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# load all docs in a directory def process_docs(directory, vocab, is_trian): documents = list() # walk through all files in the folder for filename in listdir(directory): # skip any reviews in the test set if is_trian and filename.startswith('cv9'): continue if not is_trian and not filename.startswith('cv9'): continue # create the full path of the file to open path = directory + '/' + filename # load the doc doc = load_doc(path) # clean doc tokens = clean_doc(doc, vocab) # add to list documents.append(tokens) return documents # load all training reviews positive_docs = process_docs('txt_sentoken/pos', vocab, True) negative_docs = process_docs('txt_sentoken/neg', vocab, True) train_docs = negative_docs + positive_docs |

The next step is to encode each document as a sequence of integers.

The Keras Embedding layer requires integer inputs where each integer maps to a single token that has a specific real-valued vector representation within the embedding. These vectors are random at the beginning of training, but during training become meaningful to the network.

We can encode the training documents as sequences of integers using the Tokenizer class in the Keras API.

First, we must construct an instance of the class then train it on all documents in the training dataset. In this case, it develops a vocabulary of all tokens in the training dataset and develops a consistent mapping from words in the vocabulary to unique integers. We could just as easily develop this mapping ourselves using our vocabulary file.

|

1 2 3 4 |

# create the tokenizer tokenizer = Tokenizer() # fit the tokenizer on the documents tokenizer.fit_on_texts(train_docs) |

Now that the mapping of words to integers has been prepared, we can use it to encode the reviews in the training dataset. We can do that by calling the texts_to_sequences() function on the Tokenizer.

|

1 2 |

# sequence encode encoded_docs = tokenizer.texts_to_sequences(train_docs) |

We also need to ensure that all documents have the same length.

This is a requirement of Keras for efficient computation. We could truncate reviews to the smallest size or zero-pad (pad with the value ‘0’) reviews to the maximum length, or some hybrid. In this case, we will pad all reviews to the length of the longest review in the training dataset.

First, we can find the longest review using the max() function on the training dataset and take its length. We can then call the Keras function pad_sequences() to pad the sequences to the maximum length by adding 0 values on the end.

|

1 2 3 |

# pad sequences max_length = max([len(s.split()) for s in train_docs]) Xtrain = pad_sequences(encoded_docs, maxlen=max_length, padding='post') |

Finally, we can define the class labels for the training dataset, needed to fit the supervised neural network model to predict the sentiment of reviews.

|

1 2 |

# define training labels ytrain = array([0 for _ in range(900)] + [1 for _ in range(900)]) |

We can then encode and pad the test dataset, needed later to evaluate the model after we train it.

|

1 2 3 4 5 6 7 8 9 10 |

# load all test reviews positive_docs = process_docs('txt_sentoken/pos', vocab, False) negative_docs = process_docs('txt_sentoken/neg', vocab, False) test_docs = negative_docs + positive_docs # sequence encode encoded_docs = tokenizer.texts_to_sequences(test_docs) # pad sequences Xtest = pad_sequences(encoded_docs, maxlen=max_length, padding='post') # define test labels ytest = array([0 for _ in range(100)] + [1 for _ in range(100)]) |

We are now ready to define our neural network model.

The model will use an Embedding layer as the first hidden layer. The Embedding requires the specification of the vocabulary size, the size of the real-valued vector space, and the maximum length of input documents.

The vocabulary size is the total number of words in our vocabulary, plus one for unknown words. This could be the vocab set length or the size of the vocab within the tokenizer used to integer encode the documents, for example:

|

1 2 |

# define vocabulary size (largest integer value) vocab_size = len(tokenizer.word_index) + 1 |

We will use a 100-dimensional vector space, but you could try other values, such as 50 or 150. Finally, the maximum document length was calculated above in the max_length variable used during padding.

The complete model definition is listed below including the Embedding layer.

We use a Convolutional Neural Network (CNN) as they have proven to be successful at document classification problems. A conservative CNN configuration is used with 32 filters (parallel fields for processing words) and a kernel size of 8 with a rectified linear (‘relu’) activation function. This is followed by a pooling layer that reduces the output of the convolutional layer by half.

Next, the 2D output from the CNN part of the model is flattened to one long 2D vector to represent the ‘features’ extracted by the CNN. The back-end of the model is a standard Multilayer Perceptron layers to interpret the CNN features. The output layer uses a sigmoid activation function to output a value between 0 and 1 for the negative and positive sentiment in the review.

For more advice on effective deep learning model configuration for text classification, see the post:

|

1 2 3 4 5 6 7 8 9 |

# define model model = Sequential() model.add(Embedding(vocab_size, 100, input_length=max_length)) model.add(Conv1D(filters=32, kernel_size=8, activation='relu')) model.add(MaxPooling1D(pool_size=2)) model.add(Flatten()) model.add(Dense(10, activation='relu')) model.add(Dense(1, activation='sigmoid')) print(model.summary()) |

Running just this piece provides a summary of the defined network.

We can see that the Embedding layer expects documents with a length of 442 words as input and encodes each word in the document as a 100 element vector.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= embedding_1 (Embedding) (None, 442, 100) 2576800 _________________________________________________________________ conv1d_1 (Conv1D) (None, 435, 32) 25632 _________________________________________________________________ max_pooling1d_1 (MaxPooling1 (None, 217, 32) 0 _________________________________________________________________ flatten_1 (Flatten) (None, 6944) 0 _________________________________________________________________ dense_1 (Dense) (None, 10) 69450 _________________________________________________________________ dense_2 (Dense) (None, 1) 11 ================================================================= Total params: 2,671,893 Trainable params: 2,671,893 Non-trainable params: 0 _________________________________________________________________ |

Next, we fit the network on the training data.

We use a binary cross entropy loss function because the problem we are learning is a binary classification problem. The efficient Adam implementation of stochastic gradient descent is used and we keep track of accuracy in addition to loss during training. The model is trained for 10 epochs, or 10 passes through the training data.

The network configuration and training schedule were found with a little trial and error, but are by no means optimal for this problem. If you can get better results with a different configuration, let me know.

|

1 2 3 4 |

# compile network model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) # fit network model.fit(Xtrain, ytrain, epochs=10, verbose=2) |

After the model is fit, it is evaluated on the test dataset. This dataset contains words that we have not seen before and reviews not seen during training.

|

1 2 3 |

# evaluate loss, acc = model.evaluate(Xtest, ytest, verbose=0) print('Test Accuracy: %f' % (acc*100)) |

We can tie all of this together.

The complete code listing is provided below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 |

from string import punctuation from os import listdir from numpy import array from keras.preprocessing.text import Tokenizer from keras.preprocessing.sequence import pad_sequences from keras.models import Sequential from keras.layers import Dense from keras.layers import Flatten from keras.layers import Embedding from keras.layers.convolutional import Conv1D from keras.layers.convolutional import MaxPooling1D # load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text # turn a doc into clean tokens def clean_doc(doc, vocab): # split into tokens by white space tokens = doc.split() # remove punctuation from each token table = str.maketrans('', '', punctuation) tokens = [w.translate(table) for w in tokens] # filter out tokens not in vocab tokens = [w for w in tokens if w in vocab] tokens = ' '.join(tokens) return tokens # load all docs in a directory def process_docs(directory, vocab, is_trian): documents = list() # walk through all files in the folder for filename in listdir(directory): # skip any reviews in the test set if is_trian and filename.startswith('cv9'): continue if not is_trian and not filename.startswith('cv9'): continue # create the full path of the file to open path = directory + '/' + filename # load the doc doc = load_doc(path) # clean doc tokens = clean_doc(doc, vocab) # add to list documents.append(tokens) return documents # load the vocabulary vocab_filename = 'vocab.txt' vocab = load_doc(vocab_filename) vocab = vocab.split() vocab = set(vocab) # load all training reviews positive_docs = process_docs('txt_sentoken/pos', vocab, True) negative_docs = process_docs('txt_sentoken/neg', vocab, True) train_docs = negative_docs + positive_docs # create the tokenizer tokenizer = Tokenizer() # fit the tokenizer on the documents tokenizer.fit_on_texts(train_docs) # sequence encode encoded_docs = tokenizer.texts_to_sequences(train_docs) # pad sequences max_length = max([len(s.split()) for s in train_docs]) Xtrain = pad_sequences(encoded_docs, maxlen=max_length, padding='post') # define training labels ytrain = array([0 for _ in range(900)] + [1 for _ in range(900)]) # load all test reviews positive_docs = process_docs('txt_sentoken/pos', vocab, False) negative_docs = process_docs('txt_sentoken/neg', vocab, False) test_docs = negative_docs + positive_docs # sequence encode encoded_docs = tokenizer.texts_to_sequences(test_docs) # pad sequences Xtest = pad_sequences(encoded_docs, maxlen=max_length, padding='post') # define test labels ytest = array([0 for _ in range(100)] + [1 for _ in range(100)]) # define vocabulary size (largest integer value) vocab_size = len(tokenizer.word_index) + 1 # define model model = Sequential() model.add(Embedding(vocab_size, 100, input_length=max_length)) model.add(Conv1D(filters=32, kernel_size=8, activation='relu')) model.add(MaxPooling1D(pool_size=2)) model.add(Flatten()) model.add(Dense(10, activation='relu')) model.add(Dense(1, activation='sigmoid')) print(model.summary()) # compile network model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) # fit network model.fit(Xtrain, ytrain, epochs=10, verbose=2) # evaluate loss, acc = model.evaluate(Xtest, ytest, verbose=0) print('Test Accuracy: %f' % (acc*100)) |

Running the example prints the loss and accuracy at the end of each training epoch.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

We can see that the model very quickly achieves 100% accuracy on the training dataset. At the end of the run, the model achieves an accuracy of 84.5% on the test dataset, which is a great score.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

... Epoch 6/10 2s - loss: 0.0013 - acc: 1.0000 Epoch 7/10 2s - loss: 8.4573e-04 - acc: 1.0000 Epoch 8/10 2s - loss: 5.8323e-04 - acc: 1.0000 Epoch 9/10 2s - loss: 4.3155e-04 - acc: 1.0000 Epoch 10/10 2s - loss: 3.3083e-04 - acc: 1.0000 Test Accuracy: 84.500000 |

We have just seen an example of how we can learn a word embedding as part of fitting a neural network model.

Next, let’s look at how we can efficiently learn a standalone embedding that we could later use in our neural network.

4. Train word2vec Embedding

In this section, we will discover how to learn a standalone word embedding using an efficient algorithm called word2vec.

A downside of learning a word embedding as part of the network is that it can be very slow, especially for very large text datasets.

The word2vec algorithm is an approach to learning a word embedding from a text corpus in a standalone way. The benefit of the method is that it can produce high-quality word embeddings very efficiently, in terms of space and time complexity.

The first step is to prepare the documents ready for learning the embedding.

This involves the same data cleaning steps from the previous section, namely splitting documents by their white space, removing punctuation, and filtering out tokens not in the vocabulary.

The word2vec algorithm processes documents sentence by sentence. This means we will preserve the sentence-based structure during cleaning.

We start by loading the vocabulary, as before.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text # load the vocabulary vocab_filename = 'vocab.txt' vocab = load_doc(vocab_filename) vocab = vocab.split() vocab = set(vocab) |

Next, we define a function named doc_to_clean_lines() to clean a loaded document line by line and return a list of the cleaned lines.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# turn a doc into clean tokens def doc_to_clean_lines(doc, vocab): clean_lines = list() lines = doc.splitlines() for line in lines: # split into tokens by white space tokens = line.split() # remove punctuation from each token table = str.maketrans('', '', punctuation) tokens = [w.translate(table) for w in tokens] # filter out tokens not in vocab tokens = [w for w in tokens if w in vocab] clean_lines.append(tokens) return clean_lines |

Next, we adapt the process_docs() function to load and clean all of the documents in a folder and return a list of all document lines.

The results from this function will be the training data for the word2vec model.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# load all docs in a directory def process_docs(directory, vocab, is_trian): lines = list() # walk through all files in the folder for filename in listdir(directory): # skip any reviews in the test set if is_trian and filename.startswith('cv9'): continue if not is_trian and not filename.startswith('cv9'): continue # create the full path of the file to open path = directory + '/' + filename # load and clean the doc doc = load_doc(path) doc_lines = doc_to_clean_lines(doc, vocab) # add lines to list lines += doc_lines return lines |

We can then load all of the training data and convert it into a long list of ‘sentences’ (lists of tokens) ready for fitting the word2vec model.

|

1 2 3 4 5 |

# load training data positive_docs = process_docs('txt_sentoken/pos', vocab, True) negative_docs = process_docs('txt_sentoken/neg', vocab, True) sentences = negative_docs + positive_docs print('Total training sentences: %d' % len(sentences)) |

We will use the word2vec implementation provided in the Gensim Python library. Specifically the Word2Vec class.

For more on training a standalone word embedding with Gensim, see the post:

The model is fit when constructing the class. We pass in the list of clean sentences from the training data, then specify the size of the embedding vector space (we use 100 again), the number of neighboring words to look at when learning how to embed each word in the training sentences (we use 5 neighbors), the number of threads to use when fitting the model (we use 8, but change this if you have more or less CPU cores), and the minimum occurrence count for words to consider in the vocabulary (we set this to 1 as we have already prepared the vocabulary).

After the model is fit, we print the size of the learned vocabulary, which should match the size of our vocabulary in vocab.txt of 25,767 tokens.

|

1 2 3 4 5 |

# train word2vec model model = Word2Vec(sentences, size=100, window=5, workers=8, min_count=1) # summarize vocabulary size in model words = list(model.wv.vocab) print('Vocabulary size: %d' % len(words)) |

Finally, we save the learned embedding vectors to file using the save_word2vec_format() on the model’s ‘wv‘ (word vector) attribute. The embedding is saved in ASCII format with one word and vector per line.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 |

from string import punctuation from os import listdir from gensim.models import Word2Vec # load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text # turn a doc into clean tokens def doc_to_clean_lines(doc, vocab): clean_lines = list() lines = doc.splitlines() for line in lines: # split into tokens by white space tokens = line.split() # remove punctuation from each token table = str.maketrans('', '', punctuation) tokens = [w.translate(table) for w in tokens] # filter out tokens not in vocab tokens = [w for w in tokens if w in vocab] clean_lines.append(tokens) return clean_lines # load all docs in a directory def process_docs(directory, vocab, is_trian): lines = list() # walk through all files in the folder for filename in listdir(directory): # skip any reviews in the test set if is_trian and filename.startswith('cv9'): continue if not is_trian and not filename.startswith('cv9'): continue # create the full path of the file to open path = directory + '/' + filename # load and clean the doc doc = load_doc(path) doc_lines = doc_to_clean_lines(doc, vocab) # add lines to list lines += doc_lines return lines # load the vocabulary vocab_filename = 'vocab.txt' vocab = load_doc(vocab_filename) vocab = vocab.split() vocab = set(vocab) # load training data positive_docs = process_docs('txt_sentoken/pos', vocab, True) negative_docs = process_docs('txt_sentoken/neg', vocab, True) sentences = negative_docs + positive_docs print('Total training sentences: %d' % len(sentences)) # train word2vec model model = Word2Vec(sentences, size=100, window=5, workers=8, min_count=1) # summarize vocabulary size in model words = list(model.wv.vocab) print('Vocabulary size: %d' % len(words)) # save model in ASCII (word2vec) format filename = 'embedding_word2vec.txt' model.wv.save_word2vec_format(filename, binary=False) |

Running the example loads 58,109 sentences from the training data and creates an embedding for a vocabulary of 25,767 words.

You should now have a file ’embedding_word2vec.txt’ with the learned vectors in your current working directory.

|

1 2 |

Total training sentences: 58109 Vocabulary size: 25767 |

Next, let’s look at using these learned vectors in our model.

5. Use Pre-trained Embedding

In this section, we will use a pre-trained word embedding prepared on a very large text corpus.

We can use the pre-trained word embedding developed in the previous section and the CNN model developed in the section before that.

The first step is to load the word embedding as a directory of words to vectors. The word embedding was saved in so-called ‘word2vec‘ format that contains a header line. We will skip this header line when loading the embedding.

The function below named load_embedding() loads the embedding and returns a directory of words mapped to the vectors in NumPy format.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# load embedding as a dict def load_embedding(filename): # load embedding into memory, skip first line file = open(filename,'r') lines = file.readlines()[1:] file.close() # create a map of words to vectors embedding = dict() for line in lines: parts = line.split() # key is string word, value is numpy array for vector embedding[parts[0]] = asarray(parts[1:], dtype='float32') return embedding |

Now that we have all of the vectors in memory, we can order them in such a way as to match the integer encoding prepared by the Keras Tokenizer.

Recall that we integer encode the review documents prior to passing them to the Embedding layer. The integer maps to the index of a specific vector in the embedding layer. Therefore, it is important that we lay the vectors out in the Embedding layer such that the encoded words map to the correct vector.

Below defines a function get_weight_matrix() that takes the loaded embedding and the tokenizer.word_index vocabulary as arguments and returns a matrix with the word vectors in the correct locations.

|

1 2 3 4 5 6 7 8 9 10 |

# create a weight matrix for the Embedding layer from a loaded embedding def get_weight_matrix(embedding, vocab): # total vocabulary size plus 0 for unknown words vocab_size = len(vocab) + 1 # define weight matrix dimensions with all 0 weight_matrix = zeros((vocab_size, 100)) # step vocab, store vectors using the Tokenizer's integer mapping for word, i in vocab.items(): weight_matrix[i] = embedding.get(word) return weight_matrix |

Now we can use these functions to create our new Embedding layer for our model.

|

1 2 3 4 5 6 7 |

... # load embedding from file raw_embedding = load_embedding('embedding_word2vec.txt') # get vectors in the right order embedding_vectors = get_weight_matrix(raw_embedding, tokenizer.word_index) # create the embedding layer embedding_layer = Embedding(vocab_size, 100, weights=[embedding_vectors], input_length=max_length, trainable=False) |

Note that the prepared weight matrix embedding_vectors is passed to the new Embedding layer as an argument and that we set the ‘trainable‘ argument to ‘False‘ to ensure that the network does not try to adapt the pre-learned vectors as part of training the network.

We can now add this layer to our model. We also have a slightly different model configuration with a lot more filters (128) in the CNN model and a kernel that matches the 5 words used as neighbors when developing the word2vec embedding. Finally, the back-end of the model was simplified.

|

1 2 3 4 5 6 7 8 |

# define model model = Sequential() model.add(embedding_layer) model.add(Conv1D(filters=128, kernel_size=5, activation='relu')) model.add(MaxPooling1D(pool_size=2)) model.add(Flatten()) model.add(Dense(1, activation='sigmoid')) print(model.summary()) |

These changes were found with a little trial and error.

The complete code listing is provided below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 |

from string import punctuation from os import listdir from numpy import array from numpy import asarray from numpy import zeros from keras.preprocessing.text import Tokenizer from keras.preprocessing.sequence import pad_sequences from keras.models import Sequential from keras.layers import Dense from keras.layers import Flatten from keras.layers import Embedding from keras.layers.convolutional import Conv1D from keras.layers.convolutional import MaxPooling1D # load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text # turn a doc into clean tokens def clean_doc(doc, vocab): # split into tokens by white space tokens = doc.split() # remove punctuation from each token table = str.maketrans('', '', punctuation) tokens = [w.translate(table) for w in tokens] # filter out tokens not in vocab tokens = [w for w in tokens if w in vocab] tokens = ' '.join(tokens) return tokens # load all docs in a directory def process_docs(directory, vocab, is_trian): documents = list() # walk through all files in the folder for filename in listdir(directory): # skip any reviews in the test set if is_trian and filename.startswith('cv9'): continue if not is_trian and not filename.startswith('cv9'): continue # create the full path of the file to open path = directory + '/' + filename # load the doc doc = load_doc(path) # clean doc tokens = clean_doc(doc, vocab) # add to list documents.append(tokens) return documents # load embedding as a dict def load_embedding(filename): # load embedding into memory, skip first line file = open(filename,'r') lines = file.readlines()[1:] file.close() # create a map of words to vectors embedding = dict() for line in lines: parts = line.split() # key is string word, value is numpy array for vector embedding[parts[0]] = asarray(parts[1:], dtype='float32') return embedding # create a weight matrix for the Embedding layer from a loaded embedding def get_weight_matrix(embedding, vocab): # total vocabulary size plus 0 for unknown words vocab_size = len(vocab) + 1 # define weight matrix dimensions with all 0 weight_matrix = zeros((vocab_size, 100)) # step vocab, store vectors using the Tokenizer's integer mapping for word, i in vocab.items(): weight_matrix[i] = embedding.get(word) return weight_matrix # load the vocabulary vocab_filename = 'vocab.txt' vocab = load_doc(vocab_filename) vocab = vocab.split() vocab = set(vocab) # load all training reviews positive_docs = process_docs('txt_sentoken/pos', vocab, True) negative_docs = process_docs('txt_sentoken/neg', vocab, True) train_docs = negative_docs + positive_docs # create the tokenizer tokenizer = Tokenizer() # fit the tokenizer on the documents tokenizer.fit_on_texts(train_docs) # sequence encode encoded_docs = tokenizer.texts_to_sequences(train_docs) # pad sequences max_length = max([len(s.split()) for s in train_docs]) Xtrain = pad_sequences(encoded_docs, maxlen=max_length, padding='post') # define training labels ytrain = array([0 for _ in range(900)] + [1 for _ in range(900)]) # load all test reviews positive_docs = process_docs('txt_sentoken/pos', vocab, False) negative_docs = process_docs('txt_sentoken/neg', vocab, False) test_docs = negative_docs + positive_docs # sequence encode encoded_docs = tokenizer.texts_to_sequences(test_docs) # pad sequences Xtest = pad_sequences(encoded_docs, maxlen=max_length, padding='post') # define test labels ytest = array([0 for _ in range(100)] + [1 for _ in range(100)]) # define vocabulary size (largest integer value) vocab_size = len(tokenizer.word_index) + 1 # load embedding from file raw_embedding = load_embedding('embedding_word2vec.txt') # get vectors in the right order embedding_vectors = get_weight_matrix(raw_embedding, tokenizer.word_index) # create the embedding layer embedding_layer = Embedding(vocab_size, 100, weights=[embedding_vectors], input_length=max_length, trainable=False) # define model model = Sequential() model.add(embedding_layer) model.add(Conv1D(filters=128, kernel_size=5, activation='relu')) model.add(MaxPooling1D(pool_size=2)) model.add(Flatten()) model.add(Dense(1, activation='sigmoid')) print(model.summary()) # compile network model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) # fit network model.fit(Xtrain, ytrain, epochs=10, verbose=2) # evaluate loss, acc = model.evaluate(Xtest, ytest, verbose=0) print('Test Accuracy: %f' % (acc*100)) |

Running the example shows that performance was not improved.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

In fact, performance was a lot worse. The results show that the training dataset was learned successfully, but evaluation on the test dataset was very poor, at just above 50% accuracy.

The cause of the poor test performance may be because of the chosen word2vec configuration or the chosen neural network configuration.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

... Epoch 6/10 2s - loss: 0.3306 - acc: 0.8778 Epoch 7/10 2s - loss: 0.2888 - acc: 0.8917 Epoch 8/10 2s - loss: 0.1878 - acc: 0.9439 Epoch 9/10 2s - loss: 0.1255 - acc: 0.9750 Epoch 10/10 2s - loss: 0.0812 - acc: 0.9928 Test Accuracy: 53.000000 |

The weights in the embedding layer can be used as a starting point for the network, and adapted during the training of the network. We can do this by setting ‘trainable=True‘ (the default) in the creation of the embedding layer.

Repeating the experiment with this change shows slightly better results, but still poor.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

I would encourage you to explore alternate configurations of the embedding and network to see if you can do better. Let me know how you do.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

... Epoch 6/10 4s - loss: 0.0950 - acc: 0.9917 Epoch 7/10 4s - loss: 0.0355 - acc: 0.9983 Epoch 8/10 4s - loss: 0.0158 - acc: 1.0000 Epoch 9/10 4s - loss: 0.0080 - acc: 1.0000 Epoch 10/10 4s - loss: 0.0050 - acc: 1.0000 Test Accuracy: 57.500000 |

It is possible to use pre-trained word vectors prepared on very large corpora of text data.

For example, both Google and Stanford provide pre-trained word vectors that you can download, trained with the efficient word2vec and GloVe methods respectively.

Let’s try to use pre-trained vectors in our model.

You can download pre-trained GloVe vectors from the Stanford webpage. Specifically, vectors trained on Wikipedia data:

- glove.6B.zip (822 Megabyte download)

Unzipping the file, you will find pre-trained embeddings for various different dimensions. We will load the 100 dimension version in the file ‘glove.6B.100d.txt‘

The Glove file does not contain a header file, so we do not need to skip the first line when loading the embedding into memory. The updated load_embedding() function is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# load embedding as a dict def load_embedding(filename): # load embedding into memory, skip first line file = open(filename,'r') lines = file.readlines() file.close() # create a map of words to vectors embedding = dict() for line in lines: parts = line.split() # key is string word, value is numpy array for vector embedding[parts[0]] = asarray(parts[1:], dtype='float32') return embedding |

It is possible that the loaded embedding does not contain all of the words in our chosen vocabulary. As such, when creating the Embedding weight matrix, we need to skip words that do not have a corresponding vector in the loaded GloVe data. Below is the updated, more defensive version of the get_weight_matrix() function.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# create a weight matrix for the Embedding layer from a loaded embedding def get_weight_matrix(embedding, vocab): # total vocabulary size plus 0 for unknown words vocab_size = len(vocab) + 1 # define weight matrix dimensions with all 0 weight_matrix = zeros((vocab_size, 100)) # step vocab, store vectors using the Tokenizer's integer mapping for word, i in vocab.items(): vector = embedding.get(word) if vector is not None: weight_matrix[i] = vector return weight_matrix |

We can now load the GloVe embedding and create the Embedding layer as before.

|

1 2 3 4 5 6 |

# load embedding from file raw_embedding = load_embedding('glove.6B.100d.txt') # get vectors in the right order embedding_vectors = get_weight_matrix(raw_embedding, tokenizer.word_index) # create the embedding layer embedding_layer = Embedding(vocab_size, 100, weights=[embedding_vectors], input_length=max_length, trainable=False) |

We will use the same model as before.

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 |

from string import punctuation from os import listdir from numpy import array from numpy import asarray from numpy import zeros from keras.preprocessing.text import Tokenizer from keras.preprocessing.sequence import pad_sequences from keras.models import Sequential from keras.layers import Dense from keras.layers import Flatten from keras.layers import Embedding from keras.layers.convolutional import Conv1D from keras.layers.convolutional import MaxPooling1D # load doc into memory def load_doc(filename): # open the file as read only file = open(filename, 'r') # read all text text = file.read() # close the file file.close() return text # turn a doc into clean tokens def clean_doc(doc, vocab): # split into tokens by white space tokens = doc.split() # remove punctuation from each token table = str.maketrans('', '', punctuation) tokens = [w.translate(table) for w in tokens] # filter out tokens not in vocab tokens = [w for w in tokens if w in vocab] tokens = ' '.join(tokens) return tokens # load all docs in a directory def process_docs(directory, vocab, is_trian): documents = list() # walk through all files in the folder for filename in listdir(directory): # skip any reviews in the test set if is_trian and filename.startswith('cv9'): continue if not is_trian and not filename.startswith('cv9'): continue # create the full path of the file to open path = directory + '/' + filename # load the doc doc = load_doc(path) # clean doc tokens = clean_doc(doc, vocab) # add to list documents.append(tokens) return documents # load embedding as a dict def load_embedding(filename): # load embedding into memory, skip first line file = open(filename,'r') lines = file.readlines() file.close() # create a map of words to vectors embedding = dict() for line in lines: parts = line.split() # key is string word, value is numpy array for vector embedding[parts[0]] = asarray(parts[1:], dtype='float32') return embedding # create a weight matrix for the Embedding layer from a loaded embedding def get_weight_matrix(embedding, vocab): # total vocabulary size plus 0 for unknown words vocab_size = len(vocab) + 1 # define weight matrix dimensions with all 0 weight_matrix = zeros((vocab_size, 100)) # step vocab, store vectors using the Tokenizer's integer mapping for word, i in vocab.items(): vector = embedding.get(word) if vector is not None: weight_matrix[i] = vector return weight_matrix # load the vocabulary vocab_filename = 'vocab.txt' vocab = load_doc(vocab_filename) vocab = vocab.split() vocab = set(vocab) # load all training reviews positive_docs = process_docs('txt_sentoken/pos', vocab, True) negative_docs = process_docs('txt_sentoken/neg', vocab, True) train_docs = negative_docs + positive_docs # create the tokenizer tokenizer = Tokenizer() # fit the tokenizer on the documents tokenizer.fit_on_texts(train_docs) # sequence encode encoded_docs = tokenizer.texts_to_sequences(train_docs) # pad sequences max_length = max([len(s.split()) for s in train_docs]) Xtrain = pad_sequences(encoded_docs, maxlen=max_length, padding='post') # define training labels ytrain = array([0 for _ in range(900)] + [1 for _ in range(900)]) # load all test reviews positive_docs = process_docs('txt_sentoken/pos', vocab, False) negative_docs = process_docs('txt_sentoken/neg', vocab, False) test_docs = negative_docs + positive_docs # sequence encode encoded_docs = tokenizer.texts_to_sequences(test_docs) # pad sequences Xtest = pad_sequences(encoded_docs, maxlen=max_length, padding='post') # define test labels ytest = array([0 for _ in range(100)] + [1 for _ in range(100)]) # define vocabulary size (largest integer value) vocab_size = len(tokenizer.word_index) + 1 # load embedding from file raw_embedding = load_embedding('glove.6B.100d.txt') # get vectors in the right order embedding_vectors = get_weight_matrix(raw_embedding, tokenizer.word_index) # create the embedding layer embedding_layer = Embedding(vocab_size, 100, weights=[embedding_vectors], input_length=max_length, trainable=False) # define model model = Sequential() model.add(embedding_layer) model.add(Conv1D(filters=128, kernel_size=5, activation='relu')) model.add(MaxPooling1D(pool_size=2)) model.add(Flatten()) model.add(Dense(1, activation='sigmoid')) print(model.summary()) # compile network model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) # fit network model.fit(Xtrain, ytrain, epochs=10, verbose=2) # evaluate loss, acc = model.evaluate(Xtest, ytest, verbose=0) print('Test Accuracy: %f' % (acc*100)) |

Running the example shows better performance.

Note: Your results may vary given the stochastic nature of the algorithm or evaluation procedure, or differences in numerical precision. Consider running the example a few times and compare the average outcome.

Again, the training dataset is easily learned and the model achieves 76% accuracy on the test dataset. This is good, but not as good as using a learned Embedding layer.

This may be cause of the higher quality vectors trained on more data and/or using a slightly different training process.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

... Epoch 6/10 2s - loss: 0.0278 - acc: 1.0000 Epoch 7/10 2s - loss: 0.0174 - acc: 1.0000 Epoch 8/10 2s - loss: 0.0117 - acc: 1.0000 Epoch 9/10 2s - loss: 0.0086 - acc: 1.0000 Epoch 10/10 2s - loss: 0.0068 - acc: 1.0000 Test Accuracy: 76.000000 |

In this case, it seems that learning the embedding as part of the learning task may be a better direction than using a specifically trained embedding or a more general pre-trained embedding.

Further Reading

This section provides more resources on the topic if you are looking go deeper.

Papers

- A Sentimental Education: Sentiment Analysis Using Subjectivity Summarization Based on Minimum Cuts, 2004.

APIs

- collections API – Container datatypes

- Tokenizer Keras API

- Embedding Keras API

- Gensim Word2Vec API

- Gensim WordVector API

Embedding Methods

Related Posts

- Using pre-trained word embeddings in a Keras model, 2016.

- Implementing a CNN for Text Classification in TensorFlow, 2015.

Summary

In this tutorial, you discovered how to develop word embeddings for the classification of movie reviews.

Specifically, you learned:

- How to prepare movie review text data for classification with deep learning methods.

- How to learn a word embedding as part of fitting a deep learning model.

- How to learn a standalone word embedding and how to use a pre-trained embedding in a neural network model.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Note: This post is an excerpt chapter from: “Deep Learning for Natural Language Processing“. Take a look, if you want more step-by-step tutorials on getting the most out of deep learning methods when working with text data.

Thank you for interest work.

Jason, help me please.

Imagine that we have an dataset which contains reviews with very different lengths (from just two words “good film” to long description “I remember the first work of this director….”.

Which length should we choose when we talk about “pad_sequence”?

All reviews must be padded to the same length.

You can use the longest review, you can use the average length, etc. Try a few and see what works best.

Hi Jason

I think it’s difficult to distinguish ”good” and “bad” using word2vec, but it’s important in sentiment analysis anyway .

Is there a good method to solve this ? Thank you and sorry for my poor English .

Generally, the model will minimize a loss function. We want the loss to be low.

I agree, it feels like clustering to some degree.

Thanks for feedback.

Jason, sorry i don’t understand one thing. Help me please.

I read you works about technique “Word Embeddings”. And I saw that when you use Embedding layer with Convolutional layer as feature extractor you always use 1D version.

Since the machine sees the sentence in form of matrix why we don’t base on Conv2D?

Good question.

A sequence of words is a one dimensions vector of integers. The embedding maps each integer onto a real vector, so now we have a sequence of vectors. It is still a one-dimensional sequence, it just so happens each item in the sequence has many features.

It is not like an image that has spatial relationships in two dimensions.

Does that help?

Thank for clear explanation, Jason.

I try to find an opportunity to use knowledges we have in Embedding layer the best way.

I’m glad it helped Alexander.

Excellent post. Thanks.

Thanks.

Thank you for amazing posts. Eagerly waiting for your new book launch. I had one query on test,train split. Rather than defining a X and Y test/train, can’t we just use split model from sklearn. Doesn’t is automatically split the entire dataset into train and test? Thank you.

Thanks Amit!

Yes, you can use sklearn if you wish.

Hi jason,

Sorry once again I am asking the same question which I mentioned in some other blog that what if I am using my own dataset. How can I make my dataset compatible to the above pre-built dataset ? I have a dataset of tweets so how can i make my dataset of positive and negative tweets and then use them as a test and train set ?

The post above shows how to prepare data.

Also, this post shows how to clean text:

https://machinelearningmastery.com/clean-text-machine-learning-python/

Thank you Jason for a valuable article! It is an exploration on it’s own.

I also get better results if Embedding Layer is learned as part of the model, rather than using predifined embeddings.

First I was very much excited about using GloVe embeddings, yet it gives quite poor results. I think it is because real-world texts contain different word forms (for. example: cat, cats), while GloVe has embeddings only for a single form of each word (for. example: cat). And even a small deviation is considered as a different word, and thus ignored. Probably if this “word-forms” problem is solved, GloVe could show tremendous results…

You may be right in the general case. It is also critical that the vocab/word usage/etc is a good fit for the problem being solved.

Hi,

I am following this example to classify malicious urls. I am able to filter out words, encode and pad my training and testing data. However, when I apply the training data to the model, I get errors.

Do you have the code up on github? I’d like to run through the code to debug.

Thanks!

Sorry, I cannot help you debug your code.

Hi, This is very helpful thanks! Is there a java version of this please?

Thanks

Sorry, I do not have Java versions at this stage.

how this fucntion

“encoded_docs = tokenizer.texts_to_sequences(test_docs)”

index test vocabs when we didn’t call

“tokenizer.fit_on_texts(train_docs)”

on test_docs.

It uses the vocab learned from the training data to index words in test. If there are new works, they are marked as zero.

Thanks, really appreciate your help.

can we use CNN with multiclass problem?

Yes.

Why use cnn because lots of deep learning algorithms are there

CNN is effective for some problems, e.g. works very well for text classification tasks compared to other methods (on average).

Hi Jason, thanks for your sharing, For word embedding layer in keras, what is the window size? For example, in word2vec, we can set the window size to 5, but in keras embedding layer, there is no parameter for that.

The window size is the number of words around each word to consider, e.g. the context, when building the model.

Keras does not need this, as the embedding is built via back-propagating error during training, ideally using BPTT which builds up error over input time steps.

From my experiments, I find the Keras approach results in better skill than using a prebuilt model. It should be the other way around according to intuition, but not in my experience.

I read your blog about building language model with LSTM, and I tried work with pre-trained embedding weights, actually results in lower perplexity, but a little lower BLEU. So, it is hard for me to say which one is better.

Thanks for your blogs! I learned a lot from them.

Nice work!

Dear Jason,

In above post, you have used a CNN model after data preparation and embedding layer training tasks. Kindly, can you help that how I can perform

(i) .Data Preparation

(ii).Train Embedding Layer

to use in LSTM Recurrent Neural Networks instead of CNN OR How can I use LSTM Recurrent Neural Networks instead of CNN after these two tasks?

Thanks for your time!

Here is an example:

https://machinelearningmastery.com/sequence-classification-lstm-recurrent-neural-networks-python-keras/

Thanks Sir, but I have an issue that, In the provided link training of embedding layer is not performed. Kindly can you guide me how to train embedding layer for LSTM RNN Model?

Sorry, I cannot prepare a specific case for you.

You can use a code example that shows how to use an embedding on the front end and combine it with the tutorial.

Thanks Sir for your guidance.

You’re welcome.

Hi Jason,

I am so grateful for the practical tutorial. I am a beginner at keras and deep learning. I applied a data set which is about 2 diseases. I wanna to train RNN via GloVe in order to classification but the output result is as follow :

precision 0.0

FPR 0.9999999999807433

TPR 0.0

FNR 0.999999999980609

specificity 0.0

accuracy 0.0

F_score 0.0

I think just because GloVe did not pre-train for disease dataset???

am I right ?? or it needs to train in many eopche?

thank you for guiding me.

Best

Maram

There are many possible reasons. Perhaps this framework will help you debug your model:

https://machinelearningmastery.com/improve-deep-learning-performance/

Hi Jason,

that was awesome like the others tutorials. I do not have a separate data set for train dataset and test data set as I want to select test dataset randomly each time. so I have already done it by this statment:

‘from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(x_datasetpad,y_datasetpad,stratify=y_datasetpad,test_size=0.25)’

according to your tutorial, you set ytest in this way :

ytest = array([0 for _ in range(100)] + [1 for _ in range(100)])

I have already created x_dataset but I do not know how i can create ydataset??

please guide me Jason, I realy need it.

Sarah

The output will be the sentiment, or an integer representing the sentiment classes for each input review.

Hello Jason,

I was following your awesome tutorial but in the end I was asking myself: How can I predict a new sentence represented as a string for its sentiment? I think there will be some more people who would like to test their trained network for some example inputs. Could you maybe add such a section to this tutorial or give a short explanation on how to do this exactly? In such a way:

exampleSentence = ‘The weather is very good today’

prediction = trainedModel.predict(exampleSentence)

print(sentimentForPrediction(prediction))

Kind regards

Hegg

Yes, you can use:;

Hi Jason,

I tried implementing one of your codes for predicting the sentiment for a sentence.

First i trained the model the way you have. I am getting an accuracy of 86% on test data.

This is the function that i used for predicting on a new sentence.

# classify a review as negative (0) or positive (1)

def predict_sentiment(review, vocab, tokenizer, model):

# clean

tokens = clean_doc(review)

# filter by vocab

tokens = [w for w in tokens if w in vocab]

# convert to line

line = ‘ ‘.join(tokens)

#print(line)

# encode

tokenizer.fit_on_texts(line)

encoded = tokenizer.texts_to_sequences(line)

#print(encoded)

# prediction

max_length = max([len(s.split()) for s in line])

pred = pad_sequences(encoded, maxlen=1317, padding=’post’)

#print(pred)

yhat = model.predict(pred, verbose=0)

return round(yhat[0,0])

# test positive text

text = ‘this is a good movie’

print(predict_sentiment(text, vocab, tokenizer, model))

# test negative text

text = ‘This is a bad movie.’

print(predict_sentiment(text, vocab, tokenizer, model))

somehow, I am getting a ‘0’ in both the cases. I tried using other test cases as well, but it is always giving a zero.

I cant find where I am going wrong.

Any insights would be really helpful.

Thanks!

Sorry, I don’t have the capacity to debug your code. Perhaps try stackoverflow?

Let us say I have a raw text which I want to predict using model.predict().

First step would be to assign the same index value (on which the model was trained on) to each of the words in the raw text .

Then we would have to convert it to sequences (using texts_to_sequences())

Then will be the padding (max_length would be the same of that of the training set)

And then this will be given to the model.predict()

Is that correct ? ^

Yes. All preparation performed to training data must also be performed to any new data when you want to make a prediction with the trained model.

i tried to predict the same way as mentioned above and irrespective of review i am getting a value around 0.5100..what to do?

Perhaps try re-fitting the model from scratch?

Perhaps try alternate configurations of the model or training?

Perhaps try different data when making predictions?

Hi Jason,

Thank you so much for your great post. I always enjoy your work and they help me a lot.

My question is that how can I handle other features and text feature at the same time in my classification. I mean if I have other features like the number of words, the number of punctuation, category, etc for each review how can I use them in my classification.

Best

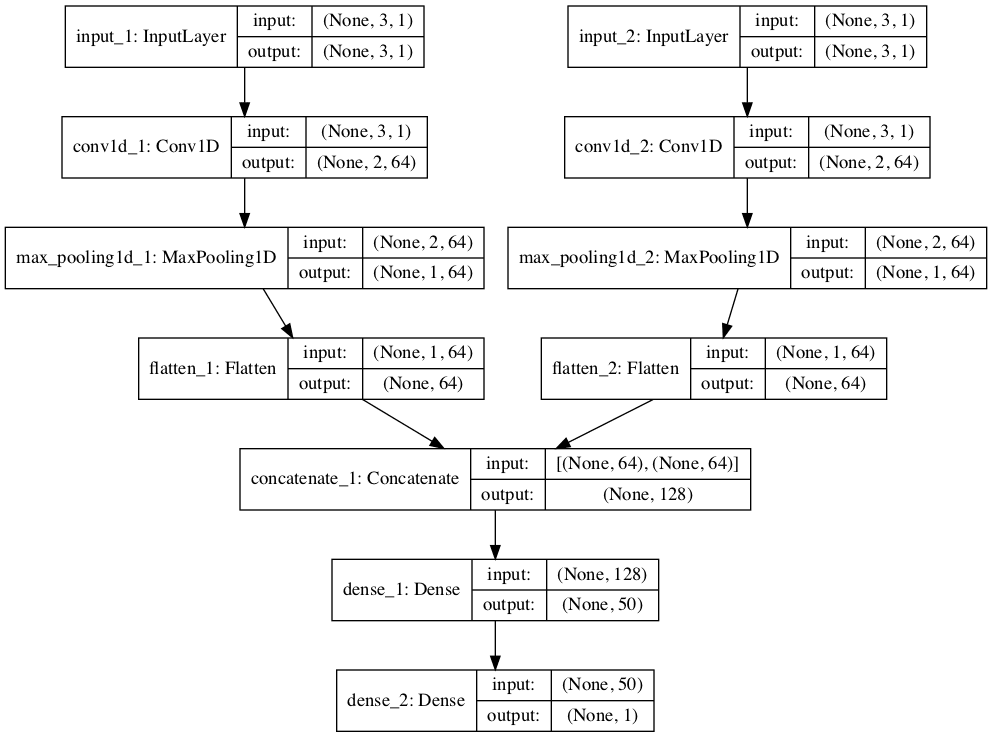

Great question, perhaps a two-headed model would be appropriate. One head for text data and one with a vector summarizing the document.

This tutorial will help:

https://machinelearningmastery.com/keras-functional-api-deep-learning/

Hi Jason, awesome article. Can you please tell which python version can I use to run the code?

As nltk wont work on pythong 2.7 and Keras is not available for python 3.6.

Looking forward to your response.

Thanks,

Anjali Batra

Python 3.5 or 3.6. I believe Python 2.7 would work too, perhaps with minor tweaks.

Hi Jason,

Great tutorial, helped a lot.

I am working on a multi-class classification , could you help me on how I would have to tweak the network to facilitate a 8 class classifier.

Thank You

Change the number of nodes in the output layer to 8, the transfer function to softmax and the loss function to categorical cross entropy.

Hi Jason,

I’ve a question. To develop the vocab I’ve to use both training and testing reviews?

Thanks a lot.

Ideally, you would just use training data to develop your vocab when evaluating the model.

You would use all data when preparing the final model.

It really comes down to your project goals.

Dear Jason,

Absolutely loved the tutorial. Nevertheless, I had a question regarding the convolution. You already answered a question regarding that, but I still have a doubt. You mentioned that the output of the embedding layer is a one-dimensional sequence.

But the output shape of the embedding layer is (None, 1317, 100). I know the “None” refers to the batch size of the number of training examples. But, how is convolution possible with a (1317,100) shape with a one-dimensional filter size of 8. I want to get into the depth of it. How are these values getting multiplied? Since the output shape of the convoluted layer is (1310,32), the only way I can make sense is that for every filter there are 100 values which are getting multiplied with every sequence for a certain word. Kindly correct me if I am wrong.

Regards,

Arghyadeep

No, the input is a sequence of integers. The output is a sequence of vectors.

Yes, absolutely! But I believe that is for the embedding layer.

But my question is about the convolution layer. How is the input shape (None, 1317, 100) getting converted to (None, 1310, 32)?

The 32 comes from the number of filters.

1317 gets converted to 1310 for (1317-8+1) which is the kernel size.

What about the number 100?

The 100 is the length of each word vector.

Thanks 🙂

Jason,

In the ‘Training Embedding Layer’ code, why did you add a 1 to the size of vocabulary, before passing the size of the vocabulary to the Embedding? You say, ‘….plus one for unknown words…’ – but by definition there are no unknown words in the training data. When I run your code without adding the one, it croaks at the point when it is fitting the training data. It runs fine when I add the 1 to the vocabulary. I am not certain why, and hoping you can elaborate.

There can be unknown words when using the model, e.g. test data or new data.

Thanks Jason. If I understood your response correctly…Unknown words in the test data or any new data, are not ascribed an integer encoding, because we are using the same tokenizer that was built for the training data, when working with these new data sets as well. So unknown words will not show up when we run these new datasets. I am still unsure why we need to add a 1, since again by definition the training data has no unknown words. I am missing something, esp. since when 1 is not added the code croaks at the fitting of training data stage, and well before we work with test/other datasets. One difference from your code is that I am using a MLP instead of CNN to train. I shall attempt to dig into this more, however if you have additional insight, please do reply.

Yes. We add one because known words start at 1 not 0, e.g. 1-offset.

Thank you. Of course. I should have seen the offset.

Hi Jason,

Thanks for your sharing. I have trained a CNN model with pre-trained Glove vectors to do the text classification on resume data. I have the result in the vector forms and I wonder how could we transfer it back to text so that we could interpret it with actual meanings. Or is there better ways to do it?

Thanks,

I’m not sure I follow. You can plot words from the embedding, but it won’t tell you much about the resumes.

Hello, i have two doubt ,

– you explain the ( 3. Train Embedding Layer ) and ( 4. Train word2vec Embedding) ,

we must chose on of those method or one complete the other ?

– Also , if i wan’t to give a new sentence and classify it (positive or negative), how can i do that ?

You can use a final model to make a prediction as follows:

yhat = model.predict([asdasdasd])

When i do a predict for a new sentence for example :

phrase = “very bad feeling”

tokens = tokenizer.texts_to_sequences([phrase])

model.predict(np.array(tokens))

its give me :

array([[0.99999934]], dtype=float32)

how can i now it about (positive or negative) sentiment ?

You can use predict_class() to get the 0/1 value or you can interpret the probability directly.

I think values close to 0 is negative, values close to 1 is positive.

is word2Vec great than Embedding Layer simple ?

They are different, perhaps try both and see what works best for your specific dataset.

Hi,

Really good tutorial, but i need some clarifications.

In this case you use word2vec model to use for the rnn model. That rnn model is used to predict the sentiments of words. Actually what is the exact use(purpose) of that word2vec model that you created? why these word embeddings really helpful for these analysis?

It creates a distributed representation of words, more here:

https://machinelearningmastery.com/what-are-word-embeddings/

Hi Jason, great writeup. I’m trying to get an intuitive understanding of how these higher dimensional representations effectively, cluster. It seems slightly magical at the moment. Is it because of the constant training to minimize the loss? And would backprop work downwards toward the embedding layer and modify the weights in those word vectors accordingly? If this correct, I’m to understand how this leads to clustering.

The result is similar imports map to similar vectors or points in the n-dimensional space.

Training pushes similar things together as an efficiency – it is a lower cost to the loss function to group the vectors.

It is amazing. Truely!

Super helpful. Last few questions just to get a deeper understanding of what’s going on.

Question 1: In order for this magic to really work, the training examples must contain data related to one another? And some of this data needs to be shared across other training examples? I can envision a graph of data where similar data are already connected to each other and the algorithm is telling us how they’re clustered and their strengths by filling in the vector values by fitting a function. Am I on the right track?

Question 2: At a higher level, should we think of using embeddings when we want to find similarities or create clusters / groupings around data? I’m trying to understand when best to think of converting categorical fields to embeddings. For example, how would you treat dates or categories in structured data using deep learning?

Thanks Jason.

Yes, generally richer training data is desired, more for the model to figure out. This is hard, so we solve it with volume, thousands or millions of examples.

Yes, when you want the model to learn how the categories should be grouped/best-grouped in the context of the problem that you’re solving. Days, months, stores, etc. These are really good examples where embeddings can help. It’s a new field, and few papers other than random kaggle write-ups. I hope to go deep on this later in the year.

Thanks Jason. Few more queries as I study more.

— The examples I’ve seen thus far to create embeddings have been in two forms:

1) A collaborative filtering (Netflix etc..) that take two embeddings, dot product them, and pass them throughs some layers with some output. The output is then compared to the label, and backprop works to fit the output by adjusting the embedding weights.

2) In this case, the task is to compute say some sales projection but the model takes in as input some embeddings that represent some categorical variables, like day of month or year etc..

I need some help understanding how meaningful weights are learnt in 2) for the embeddings of these categorical variables. I ask because the loss function in 2) is directly related to the sales projection, as opposed to say 1) in which the objective is to get the dot product of the embeddings as close as possible to the label.

Can you shed some light on 2) and how these embeddings of categorical variables get learnt in more structured neural net problems? How do the weights become meaningful?

It learns how to best group the days or months based on the loss for the specific task. There’s not a lot to it.

Perhaps prototype an example and see how it goes?

Is it possible to read indian languages to create word2vec

Sure.

Thank you. Could you please share the code to create word2vec for other languages? Where should I need to change in Word2vec function?

It is the same code, just new training data.

Perhaps start here:

https://machinelearningmastery.com/develop-word-embeddings-python-gensim/

Hello Mr. Jason