A question that comes up from time to time is:

What hardware do I need to practice machine learning?

There was a time when I was a student when I was obsessed with more speed and more cores so I could run my algorithms faster and for longer. I have changed my perspective. Big hardware still matters, but only after you have considered a bunch of other factors.

TRS 80!

Photo by blakespot, some rights reserved.

Hardware Lessons

The lesson is, if you are just starting out, you’re hardware doesn’t matter. Focus on learning with small datasets that fit in memory, such as those from the UCI Machine Learning Repository.

Learn good experimental design and make sure you ask the right questions and challenge your intuitions by testing diverse algorithms and interpreting your results through the lens of statistical hypothesis testing.

Once hardware does start to matter and you really need lots of cores and a whole lot of RAM, rent it just-in-time for your carefully designed project or experiment.

More CPU! More RAM!

I was naive when I first stated in artificial intelligence and machine learning. I would use all the data that was available and run it through my algorithms. I would re-run models with minor tweets to parameters in an effort to improve the final score. I would run my models for days or weeks on end. I was obsessed.

This mainly stemmed from the fact that competitions got be interested in pushing my machine learning skills. Obsession can be good, you can learn a lot very quickly. But when misapplied, you can waste a lot of time.

I built my own machines in those days. I would update my CPU and RAM often. It was the early 2000s, before multicore was the clear path (to me) and even before GPUs where talked about much for non-graphics use (at least in my circles). I needed bigger and faster CPUs and I needed lots and lots of RAM. I even commandeered the PCs of housemates so that I could do more runs.

A little later whilst in grad school, I had access to a small cluster in the lab and proceeded to make good use of it. But things started to change and it started to matter less how much raw compute power I had available.

Getting serious with GPU hardware for machine learning.

Photo by wstryder, some rights reserved.

Results Are Wrong

The first step in my change was the discovery of good (any) experimental design. I discovered the tools of statistical hypothesis testing which allowed me to get an idea of whether one result really was significantly different (such as better) when compared to another result.

Suddenly, the fractional improvements I thought I was achieving were nothing more than statistical blips. This was an important change. I started to spend a lot more time thinking about the experimental design.

Questions Are Wrong

I shifted my obsessions to making sure I was asking good questions.

I now spend a lot of time up front loading in as many questions and variations on the questions as I can think of for a given problem. I want to make sure that when I run long compute jobs, that the results I get really matter. That they are going to impact the problem.

You can see this when I strongly advocate spending a lot of time defining your problem.

Intuitions Are Wrong

Good hypothesis testing exposes how little you think you know. We’ll it did for me and still does. I “knew” that this configuration of that algorithm was stable, reliable and good. Results when interpreted through the lens of statistical tests quickly taught me otherwise.

This shifted my thinking to be less reliable on my old intuitions and to rebuild my institution through the lens of statistically significant results.

Now, I don’t assume I know which algorithm or even which class of algorithm will do well on a given problem. I spot check a diverse set and let the data guide me in.

I also strongly advice careful consideration of test options and use of tools like the Weka experimenter that bake in hypothesis testing when interpreting results.

Best is Not Best

For some problems, the very best results are fragile.

I used to be big into non-linear function optimization (and associated competitions) and you could expend a huge amount of compute time on exploring (in retrospect, essentially enumerating!) search spaces and come up with structures or configurations that were marginally better than easily found solutions.

The thing is, the hard to find configurations were commonly very strange or exploited bugs or quirks in the domain or simulator. These solutions were good for competitions or for experiments because the numbers were better, but not necessarily viable for use in the domain or operations.

I see the same pattern in machine learning competitions. A quick and easily found solution is lower in a given performance measure, but is robust. Often, once you pour days, weeks, and months into tuning your models, you are building a fragile model of glass that is very much overfit to the training data and/or the leaderboard. Good for learning and for doing well in competitions, not necessarily usable in operations (for example, the Netflix Prize-Winning System was not Deployed).

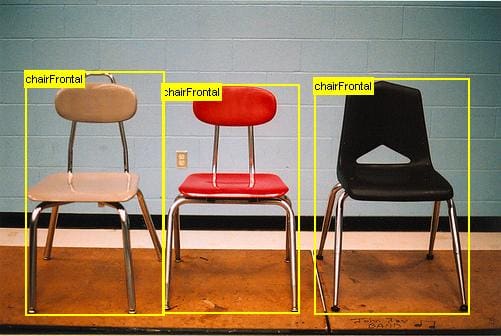

Machine Learning in a Data Center.

Photo by bandarji, some rights reserved.

Machine Learning Hardware

There are big data that require big hardware. Learning about big machine learning requires big data and big hardware.

On this site, I focus on beginners starting out in machine learning, who are much better off with small data on small hardware. Once you get enough of the machine learning, you can graduate to the bigger problems.

Today, I have an iMac i7 with a bunch of cores and 8 GB of RAM. It’s a run-of-the-mill workstation and does the job. I think that your workstation or laptop is good enough to get started in machine learning.

I do need bigger hardware on occasion, such as a competition or for my own personal satisfaction. On these occasions I rent cloud infrastructure, spin up some instances and run my models, then download the CSV predictions or whatever. It’s very cheap in time and dollars.

When it comes time for you to start practicing on big hardware with big data, rent it. Invest a little bit of money in your own education, design some careful experiments and rent a cluster to execute them.

What hardware do you practice machine learning on? Leave a comment and share your experiences.

I need to upgrade my desktop and want to do some AI/machine learning algorithms on it. I see people using Nvidia graphics cards to speed things. I am wondering which cards to get and what type of general computer specs i need. This is just for home use and i would like something of reasonable price if not dirt cheap. Lol. Thanks.

what if It also has both ml and gaming? It is hard and critical problem.

Hey Jason,

Thanks for your blog and this writeup. I’ve found it to be very useful.

What do you think is a good heuristic limit for rowXcolumns type data that one can analyze on a decent laptop of the type you mention in your writeup versus, say, EC2.

Hi Ganesh,

I need fast turn around times. I want results in minutes. This means I often scale data down to a size where I can model it in minutes. I then use big computers to help understand how the results on small data map to the full dataset.

I find the real the bottleneck is ideas and testing them. You want an environment that helps you test things fast.

I am also facing kind of same problem. Can be specifically recommend some “cloud infrastructure” ?

I am new to machine learning and I think I’m not ready yet to rent a cluster, how about a laptop with decent GPU, right now I don’t have access to large data to play from. I have a laptop with gtx950m.

Awesome books, I bought 3 of them.

Thanks Jonathan.

What is the minimum configuration needed to train deep learning model ? Do i need NVIDIA GPU ?or is it possible on Intel HD graphics?

No, you can use the CPU until you need to train large models, then you can use AWS.

I am confused between AMD vs INTEL cpu? What should i buy for machine learning ? Is there any compatibility issues with AMD cpu and NVEDIA Graphic card ?

I would recommend performing large GPU experiments in the cloud using AWS:

https://machinelearningmastery.com/develop-evaluate-large-deep-learning-models-keras-amazon-web-services/

Honestly I am only looking for an excuse to buy a high end gaming laptop. I am not getting it from here…but very educational information. Cheers.

Thanks.

Very good advice… I have also concluded I need to brush up on my statistical knowledge stack. It is not enough to be able to use different models without having a beyond-shallow statistical understanding of results and model behaviour.

If you can also recommend good resources where one can improve their statistical knowledge. it’d be super nice.

Thanks!

Perhaps you could start here:

https://machinelearningmastery.com/crash-course-statistics-machine-learning/

This article is full of wisdom, particularly regarding fragile models. You gave me a new perspective on my ML results. Thanks!

I’m glad to hear it.

Hello, I don’t know anything about machine learning. I just have a doubt. I learnt internet of things, I used different hardware components like Arduino board and sensors etc., Are there Any hardware components like such in machine learning?? Apart from having a computer or laptop!

You can use machine learning on any computing device you wish.

Perhaps IoT would be a great source of data as input for training a model.

Hello, I’m just a beginner in this field and I recently bought Dell Inspiron 15 5570 i7 8th gen 8gb RAM, 1tb HDD + 128gb SSD, Windows 10 with Intel HD 620 integrated GPU. But it does not have dedicated graphic card. So is it sufficient for machine learning and AI or do I need dedicated graphic card?

You’re hardware sounds fine, you’re ready to begin!

Hi Jason,

Can you tell me how much time it will take for Dell Inspiron 15 5570 i7 8th gen 8gb RAM, 1tb HDD + 128gb SSD, Windows 10 with Intel HD 620 integrated GPU, to execute machine learning algorithm to detect and recognize 60 different faces in a single photograph.

No, sorry. I don’t know. Perhaps run experiments to discover the answer.

Hi Jason

Thank you for this sensible article. I am getting to learn Machine Learning & Data Science. I was kind of surprised when one of my friends, came forward to help me learn and was saying he has bought a laptop with GPU power for almost AU $4,500- and I was like what….

And I found this article on googling.. Somehow I was convinced to learn and experiment, I dont need complex / high power can always fall back on google/aws if required.

I appreciate your help on this article.

Thanks.

Yes, I still use AWS EC2 today and have saved a lot of money in doing so.

Hi Jason

I work on Mac OS X with configuration: i5 processor ,10 GB 1333 MHz DDR3, Intel HD Graphics 3000 512 MB. I need to process high resolution image datasets for my work. Well everything works fine with other machine learning algorithms using conda environment. But whether this configuration is suitable for deep learning extensive computations with opencv environment? Can you please help me out?

It is a good start.

You can run large models on EC2 later.

Thanks Jason for your prompt reply! It is certainly a good option!

It is the approach that I use.

My current computer specifications right now are i5 3rd gen, Dual-Core with max speed of 1.70 Ghz, 4GB RAM and Nvidia GeForce GT 640M Le…

Sounds great!

My Laptop specification is Core i7 7th Gen , 7700HQ CPU, 2.80Ghz, 32GB RAM , NVDIA GEFORCE GTX 1050 Ti….I brought this to run some high cpu processing applications in my field of Networking but i assume this will serve good for Machine Learning as well. ? What do you suggest Jason..

I generally recommend using AWS EC2 when getting started with GPUs:

https://machinelearningmastery.com/faq/single-faq/do-i-need-special-hardware-for-deep-learning

Hi Jason

How are you.

How to compare the hardware required for two machine learning (ML) models?.

Is there any parameter to say that my ML model works on less computer hardware compared to others ML model?

Not sure I understand your question sorry?

Perhaps you can elaborate?