A large portion of the field of statistics and statistical methods is dedicated to data where the distribution is known.

Samples of data where we already know or can easily identify the distribution of are called parametric data. Often, parametric is used to refer to data that was drawn from a Gaussian distribution in common usage. Data in which the distribution is unknown or cannot be easily identified is called nonparametric.

In the case where you are working with nonparametric data, specialized nonparametric statistical methods can be used that discard all information about the distribution. As such, these methods are often referred to as distribution-free methods.

In this tutorial, you will discover nonparametric statistics and their role in applied machine learning.

After completing this tutorial, you will know:

- The difference between parametric and nonparametric data.

- How to rank data in order to discard all information about the data’s distribution.

- Example of statistical methods that can be used for ranked data.

Kick-start your project with my new book Statistics for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

A Gentle Introduction to Nonparametric Statistics

Photo by Daniel Hartwig, some rights reserved.

Tutorial Overview

This tutorial is divided into 4 parts; they are:

- Parametric Data

- Nonparametric Data

- Ranking Data

- Working with Ranked Data

Need help with Statistics for Machine Learning?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Parametric Data

Parametric data is a sample of data drawn from a known data distribution.

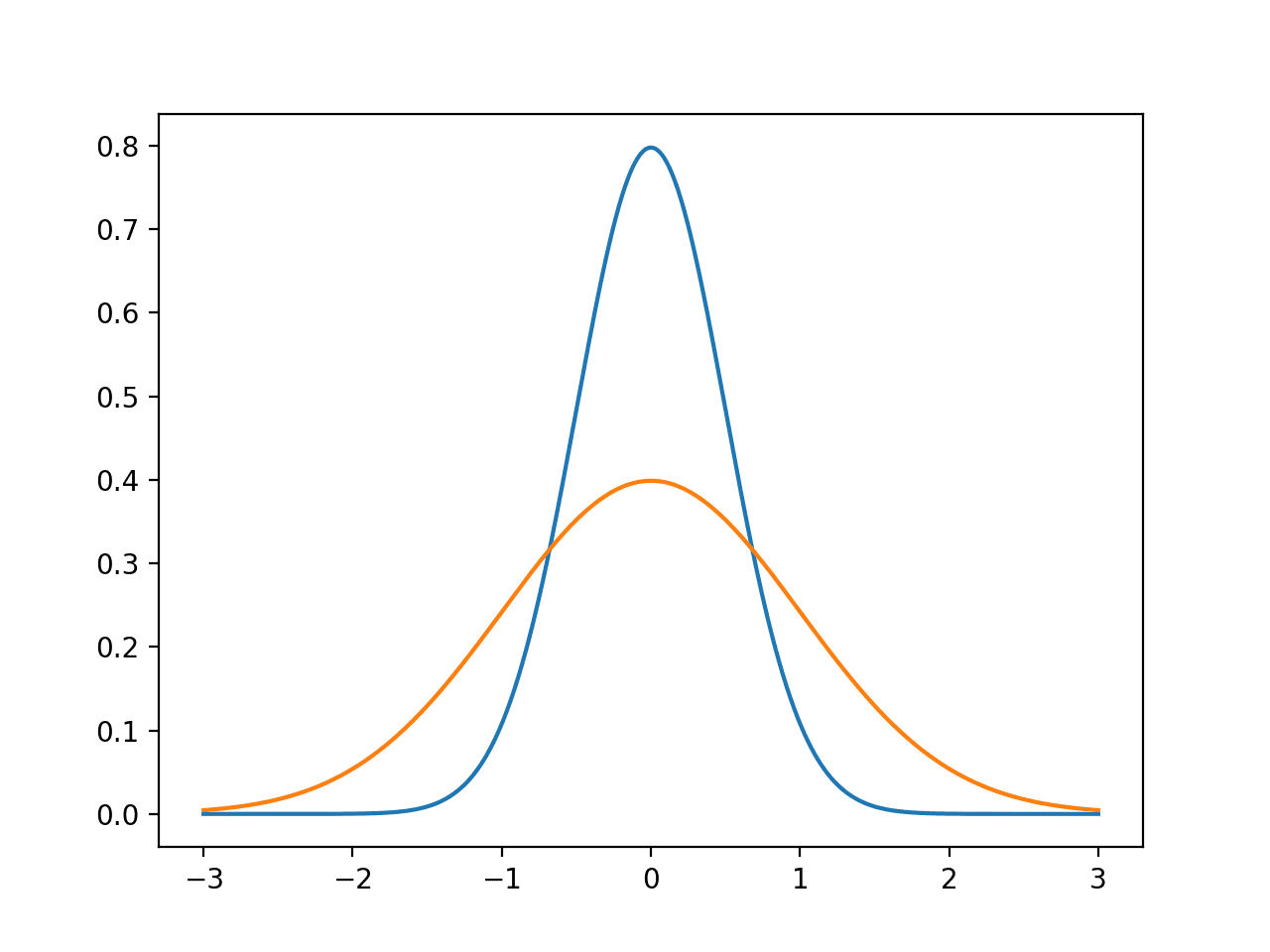

This means that we already know the distribution or we have identified the distribution, and that we know the parameters of the distribution. Often, parametric is shorthand for real-valued data drawn from a Gaussian distribution. This is a useful shorthand, but strictly this is not entirely accurate.

If we have parametric data, we can use parametric methods. Continuing with the shorthand of parametric meaning Gaussian. If we have parametric data, we can harness the entire suite of statistical methods developed for data assuming a Gaussian distribution, such as:

- Summary statistics.

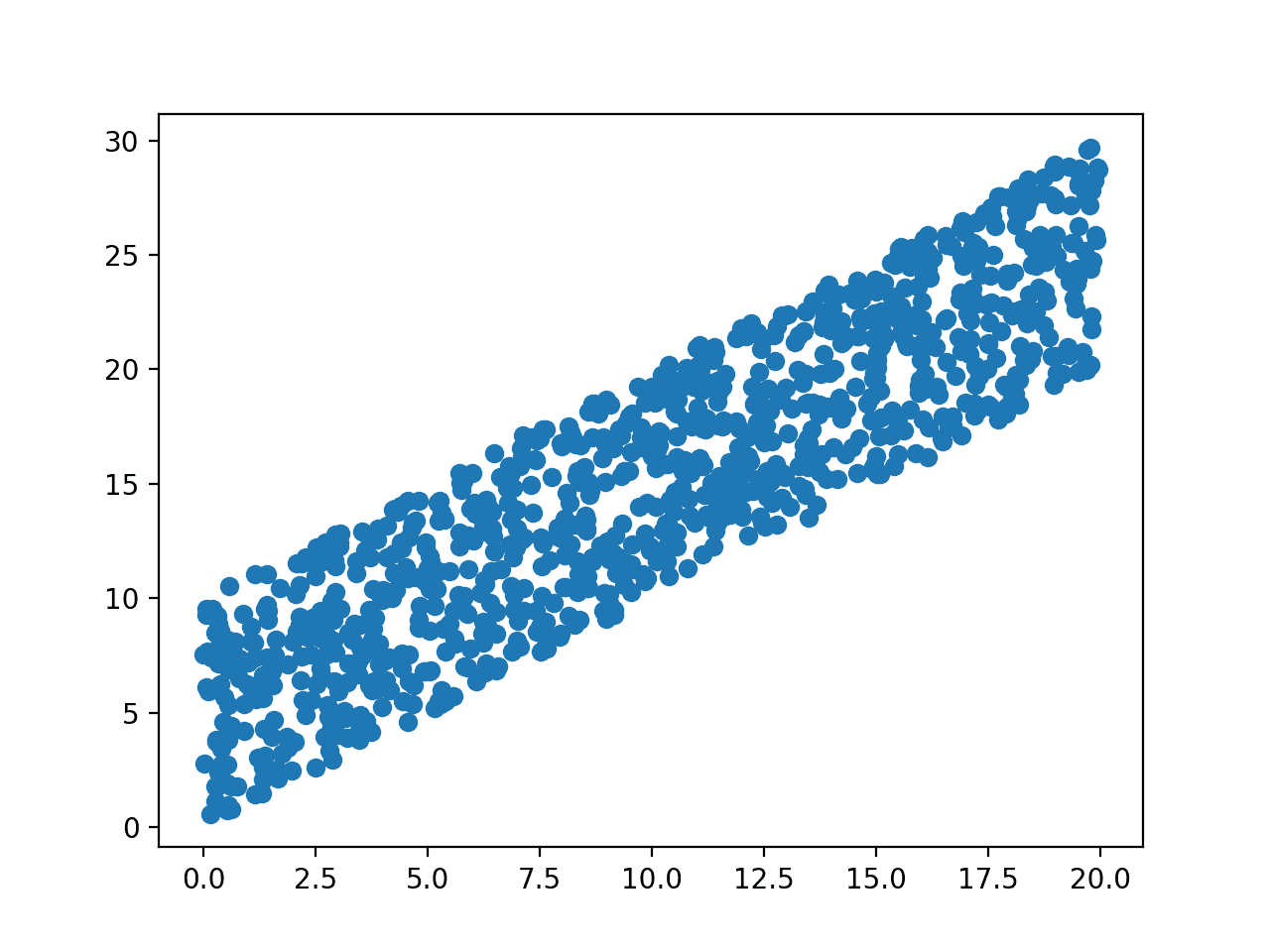

- Correlation between variables.

- Significance tests for comparing means.

In general, we prefer to work with parametric data, and even go so far as to use data preparation methods that make data parametric, such as data transforms, so that we can harness these well-understood statistical methods.

Nonparametric Data

Data that does not fit a known or well-understood distribution is referred to as nonparametric data.

Data could be non-parametric for many reasons, such as:

- Data is not real-valued, but instead is ordinal, intervals, or some other form.

- Data is real-valued but does not fit a well understood shape.

- Data is almost parametric but contains outliers, multiple peaks, a shift, or some other feature.

There are a suite of methods that we can use for nonparametric data called nonparametric statistical methods. In fact, most parametric methods have an equivalent nonparametric version.

In general, the findings from nonparametric methods are less powerful than their parametric counterparts, namely because they must be generalized to work for all types of data. We can still use them for inference and make claims about findings and results, but they will not hold the same weight as similar claims with parametric methods. Information about the distribution is discarded.

In the case of ordinal or interval data, nonparametric statistics are the only type of statistics that can be used. For real-valued data, nonparametric statistical methods are required in applied machine learning when you are trying to make claims on data that does not fit the familiar Gaussian distribution.

Ranking Data

Before a nonparametric statistical method can be applied, the data must be converted into a rank format.

As such, statistical methods that expect data in rank format are sometimes called rank statistics, such as rank correlation and rank statistical hypothesis tests.

Ranking data is exactly as its name suggests. The procedure is as follows:

- Sort all data in the sample in ascending order.

- Assign an integer rank from 1 to N for each unique value in the data sample.

For example, imagine we have the following data sample, presented as a column:

|

1 2 3 4 5 |

0.020 0.184 0.431 0.550 0.620 |

We can sort it as follows:

|

1 2 3 4 5 |

0.020 0.184 0.431 0.550 0.620 |

Then assign a rank to each value, starting at 1:

|

1 2 3 4 5 |

1 = 0.021055 2 = 0.404622 3 = 0.488733 4 = 0.618510 5 = 0.832803 |

We can then apply this procedure to another data sample and start using nonparametric statistical methods.

There are variations on this procedure for special circumstances such as handling ties, using a reverse ranking, and using a fractional rank score, but the general properties hold.

The SciPy library provides the rankdata() function to rank numerical data, which supports a number of variations on ranking.

The example below demonstrates how to rank a numerical dataset.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

from numpy.random import rand from numpy.random import seed from scipy.stats import rankdata # seed random number generator seed(1) # generate dataset data = rand(1000) # review first 10 samples print(data[:10]) # rank data ranked = rankdata(data) # review first 10 ranked samples print(ranked[:10]) |

Running the example first generates a sample of 1,000 random numbers from a uniform distribution, then ranks the data sample and prints the result.

|

1 2 3 4 |

[4.17022005e-01 7.20324493e-01 1.14374817e-04 3.02332573e-01 1.46755891e-01 9.23385948e-02 1.86260211e-01 3.45560727e-01 3.96767474e-01 5.38816734e-01] [408. 721. 1. 300. 151. 93. 186. 342. 385. 535.] |

Working with Ranked Data

There are statistical tools that you can use to check if your sample data fits a given distribution.

Normality Testing

For example, if we take nonparametric data as data that does not look Gaussian, then you can use statistical methods that quantify how Gaussian a sample of data is and use nonparametric methods if the data fails those tests.

Three examples of statistical methods for normality testing, as it is called, are:

- Shapiro-Wilk test.

- Kolmogorov-Smirnov test.

- Anderson-Darling test

For examples of using these methods, see the tutorial:

Once you have decided to use nonparametric statistics, you must then rank your data.

In fact, most of the tools that you use for inference will perform the ranking of the sample data automatically. Nevertheless, it is important to understand how your sample data is being transformed prior to performing the tests.

In applied machine learning, there are two main types of questions that you may have about your data that you can address with nonparametric statistical methods.

Relationship Between Variables

Methods for quantifying the dependency between variables are called correlation methods.

Two nonparametric statistical correlation methods that you can use are:

- Spearman’s rank correlation coefficient.

- Kendall rank correlation coefficient.

For examples of using these methods, see the tutorial:

Compare Sample Means

Methods for quantifying whether the mean between two populations is significantly different are called statistical significance tests.

Four nonparametric statistical significance tests that you can use are:

- Mann-Whitney U Test.

- Wilcoxon Signed-Rank Test.

- Kruskal-Wallis H Test.

- Friedman Test.

For examples of using these methods, see the tutorial:

Extensions

This section lists some ideas for extending the tutorial that you may wish to explore.

- List three examples of when you think you might need to use non-parametric statistical methods in an applied machine learning project.

- Develop your own example to demonstrate the capabilities of the rankdata() function.

- Write your own function to rank a provided univariate dataset.

If you explore any of these extensions, I’d love to know.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Tutorial

- A Gentle Introduction to Normality Tests in Python

- How to Calculate Nonparametric Rank Correlation in Python

- How to Calculate Nonparametric Statistical Hypothesis Tests in Python

Books

API

Articles

- Parametric statistics on Wikipedia

- Nonparametric statistics on Wikipedia

- Ranking on Wikipedia

- Rank correlation on Wikipedia

- Normality test on Wikipedia

Summary

In this tutorial, you discovered nonparametric statistics and their role in applied machine learning.

Specifically, you learned:

- The difference between parametric and nonparametric data.

- How to rank data in order to discard all information about the data’s distribution.

- Example of statistical methods that can be used for ranked data.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Thanks for the usefull sharing and valuable resources. In future, recommended to give examples of real case scenarios such as in medical field for cancer detection.

Thanks for the suggestion.

Thanks for the clarity of this primer. It reassured me that using ranking to transform some BOD:TOC data in environmental analyses in order to develop a site-specific equation was the smart thing to do for my NPDES permit.

Thanks again.

I’m happy it helped.

I seriously have no idea what you are talking about. This was not as gentle an introduction as I had hoped. I obviously need this dumbed down even further.

Perhaps start here:

https://machinelearningmastery.com/start-here/#statistical_methods

I understood a bit, but not fully convinced about parametric data. Can u just explain further with examples.

Parametric data just means it fits a distribution we know/understand and that distribution has parameters that we know will influence the structure in predictable ways.

Does that help?

Good morning Jason.

If I understand correctly, Ranking Data procedure shall convert a DataFrame with several columns of floating point numbers and N rows into a table with columns containing all integers in range [1,N]. (as you shown in example with 1 column/variable and 1000 rows).

However I do not get the part about performing normality tests on those ranked columns. Because – if I understand correctly – making ranking keeps only the numbers order, but removes the rest information about shape of distribution. Then how any normality test would work with such an input?

Best regards!

Yes.

They test whether each distribution ranks examples the same way, or not.

Hi,

Is there a way where we can do shapiro wilk test purely in python without using scipy similar to the way you have articles on implementing student t test. Is there any source code reference available, it would be helpful if you could provide links if available.

You, you would have to code it yourself I believe.

I don’t have an implementation, sorry.