Optimization is the problem of finding a set of inputs to an objective function that results in a maximum or minimum function evaluation.

It is the challenging problem that underlies many machine learning algorithms, from fitting logistic regression models to training artificial neural networks.

There are perhaps hundreds of popular optimization algorithms, and perhaps tens of algorithms to choose from in popular scientific code libraries. This can make it challenging to know which algorithms to consider for a given optimization problem.

In this tutorial, you will discover a guided tour of different optimization algorithms.

After completing this tutorial, you will know:

- Optimization algorithms may be grouped into those that use derivatives and those that do not.

- Classical algorithms use the first and sometimes second derivative of the objective function.

- Direct search and stochastic algorithms are designed for objective functions where function derivatives are unavailable.

Kick-start your project with my new book Optimization for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

How to Choose an Optimization Algorithm

Photo by Matthewjs007, some rights reserved.

Tutorial Overview

This tutorial is divided into three parts; they are:

- Optimization Algorithms

- Differentiable Objective Function

- Non-Differential Objective Function

Optimization Algorithms

Optimization refers to a procedure for finding the input parameters or arguments to a function that result in the minimum or maximum output of the function.

The most common type of optimization problems encountered in machine learning are continuous function optimization, where the input arguments to the function are real-valued numeric values, e.g. floating point values. The output from the function is also a real-valued evaluation of the input values.

We might refer to problems of this type as continuous function optimization, to distinguish from functions that take discrete variables and are referred to as combinatorial optimization problems.

There are many different types of optimization algorithms that can be used for continuous function optimization problems, and perhaps just as many ways to group and summarize them.

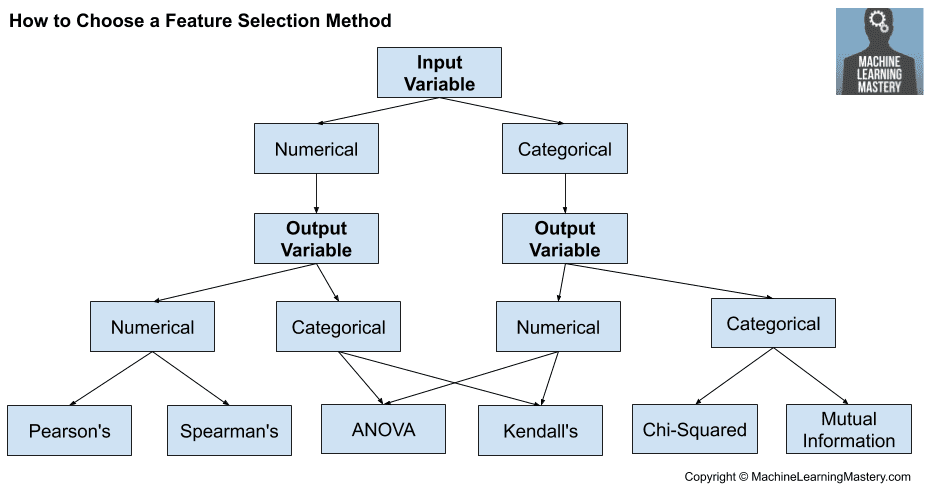

One approach to grouping optimization algorithms is based on the amount of information available about the target function that is being optimized that, in turn, can be used and harnessed by the optimization algorithm.

Generally, the more information that is available about the target function, the easier the function is to optimize if the information can effectively be used in the search.

Perhaps the major division in optimization algorithms is whether the objective function can be differentiated at a point or not. That is, whether the first derivative (gradient or slope) of the function can be calculated for a given candidate solution or not. This partitions algorithms into those that can make use of the calculated gradient information and those that do not.

- Differentiable Target Function?

- Algorithms that use derivative information.

- Algorithms that do not use derivative information.

We will use this as the major division for grouping optimization algorithms in this tutorial and look at algorithms for differentiable and non-differentiable objective functions.

Note: this is not an exhaustive coverage of algorithms for continuous function optimization, although it does cover the major methods that you are likely to encounter as a regular practitioner.

Want to Get Started With Optimization Algorithms?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Differentiable Objective Function

A differentiable function is a function where the derivative can be calculated for any given point in the input space.

The derivative of a function for a value is the rate or amount of change in the function at that point. It is often called the slope.

- First-Order Derivative: Slope or rate of change of an objective function at a given point.

The derivative of the function with more than one input variable (e.g. multivariate inputs) is commonly referred to as the gradient.

- Gradient: Derivative of a multivariate continuous objective function.

A derivative for a multivariate objective function is a vector, and each element in the vector is called a partial derivative, or the rate of change for a given variable at the point assuming all other variables are held constant.

- Partial Derivative: Element of a derivative of a multivariate objective function.

We can calculate the derivative of the derivative of the objective function, that is the rate of change of the rate of change in the objective function. This is called the second derivative.

- Second-Order Derivative: Rate at which the derivative of the objective function changes.

For a function that takes multiple input variables, this is a matrix and is referred to as the Hessian matrix.

- Hessian matrix: Second derivative of a function with two or more input variables.

Simple differentiable functions can be optimized analytically using calculus. Typically, the objective functions that we are interested in cannot be solved analytically.

Optimization is significantly easier if the gradient of the objective function can be calculated, and as such, there has been a lot more research into optimization algorithms that use the derivative than those that do not.

Some groups of algorithms that use gradient information include:

- Bracketing Algorithms

- Local Descent Algorithms

- First-Order Algorithms

- Second-Order Algorithms

Note: this taxonomy is inspired by the 2019 book “Algorithms for Optimization.”

Let’s take a closer look at each in turn.

Bracketing Algorithms

Bracketing optimization algorithms are intended for optimization problems with one input variable where the optima is known to exist within a specific range.

Bracketing algorithms are able to efficiently navigate the known range and locate the optima, although they assume only a single optima is present (referred to as unimodal objective functions).

Some bracketing algorithms may be able to be used without derivative information if it is not available.

Examples of bracketing algorithms include:

- Fibonacci Search

- Golden Section Search

- Bisection Method

Local Descent Algorithms

Local descent optimization algorithms are intended for optimization problems with more than one input variable and a single global optima (e.g. unimodal objective function).

Perhaps the most common example of a local descent algorithm is the line search algorithm.

- Line Search

There are many variations of the line search (e.g. the Brent-Dekker algorithm), but the procedure generally involves choosing a direction to move in the search space, then performing a bracketing type search in a line or hyperplane in the chosen direction.

This process is repeated until no further improvements can be made.

The limitation is that it is computationally expensive to optimize each directional move in the search space.

First-Order Algorithms

First-order optimization algorithms explicitly involve using the first derivative (gradient) to choose the direction to move in the search space.

The procedures involve first calculating the gradient of the function, then following the gradient in the opposite direction (e.g. downhill to the minimum for minimization problems) using a step size (also called the learning rate).

The step size is a hyperparameter that controls how far to move in the search space, unlike “local descent algorithms” that perform a full line search for each directional move.

A step size that is too small results in a search that takes a long time and can get stuck, whereas a step size that is too large will result in zig-zagging or bouncing around the search space, missing the optima completely.

First-order algorithms are generally referred to as gradient descent, with more specific names referring to minor extensions to the procedure, e.g.:

- Gradient Descent

- Momentum

- Adagrad

- RMSProp

- Adam

The gradient descent algorithm also provides the template for the popular stochastic version of the algorithm, named Stochastic Gradient Descent (SGD) that is used to train artificial neural networks (deep learning) models.

The important difference is that the gradient is appropriated rather than calculated directly, using prediction error on training data, such as one sample (stochastic), all examples (batch), or a small subset of training data (mini-batch).

The extensions designed to accelerate the gradient descent algorithm (momentum, etc.) can be and are commonly used with SGD.

- Stochastic Gradient Descent

- Batch Gradient Descent

- Mini-Batch Gradient Descent

Second-Order Algorithms

Second-order optimization algorithms explicitly involve using the second derivative (Hessian) to choose the direction to move in the search space.

These algorithms are only appropriate for those objective functions where the Hessian matrix can be calculated or approximated.

Examples of second-order optimization algorithms for univariate objective functions include:

- Newton’s Method

- Secant Method

Second-order methods for multivariate objective functions are referred to as Quasi-Newton Methods.

- Quasi-Newton Method

There are many Quasi-Newton Methods, and they are typically named for the developers of the algorithm, such as:

- Davidson-Fletcher-Powell

- Broyden-Fletcher-Goldfarb-Shanno (BFGS)

- Limited-memory BFGS (L-BFGS)

Now that we are familiar with the so-called classical optimization algorithms, let’s look at algorithms used when the objective function is not differentiable.

Non-Differential Objective Function

Optimization algorithms that make use of the derivative of the objective function are fast and efficient.

Nevertheless, there are objective functions where the derivative cannot be calculated, typically because the function is complex for a variety of real-world reasons. Or the derivative can be calculated in some regions of the domain, but not all, or is not a good guide.

Some difficulties on objective functions for the classical algorithms described in the previous section include:

- No analytical description of the function (e.g. simulation).

- Multiple global optima (e.g. multimodal).

- Stochastic function evaluation (e.g. noisy).

- Discontinuous objective function (e.g. regions with invalid solutions).

As such, there are optimization algorithms that do not expect first- or second-order derivatives to be available.

These algorithms are sometimes referred to as black-box optimization algorithms as they assume little or nothing (relative to the classical methods) about the objective function.

A grouping of these algorithms include:

- Direct Algorithms

- Stochastic Algorithms

- Population Algorithms

Let’s take a closer look at each in turn.

Direct Algorithms

Direct optimization algorithms are for objective functions for which derivatives cannot be calculated.

The algorithms are deterministic procedures and often assume the objective function has a single global optima, e.g. unimodal.

Direct search methods are also typically referred to as a “pattern search” as they may navigate the search space using geometric shapes or decisions, e.g. patterns.

Gradient information is approximated directly (hence the name) from the result of the objective function comparing the relative difference between scores for points in the search space. These direct estimates are then used to choose a direction to move in the search space and triangulate the region of the optima.

Examples of direct search algorithms include:

- Cyclic Coordinate Search

- Powell’s Method

- Hooke-Jeeves Method

- Nelder-Mead Simplex Search

Stochastic Algorithms

Stochastic optimization algorithms are algorithms that make use of randomness in the search procedure for objective functions for which derivatives cannot be calculated.

Unlike the deterministic direct search methods, stochastic algorithms typically involve a lot more sampling of the objective function, but are able to handle problems with deceptive local optima.

Stochastic optimization algorithms include:

- Simulated Annealing

- Evolution Strategy

- Cross-Entropy Method

Population Algorithms

Population optimization algorithms are stochastic optimization algorithms that maintain a pool (a population) of candidate solutions that together are used to sample, explore, and hone in on an optima.

Algorithms of this type are intended for more challenging objective problems that may have noisy function evaluations and many global optima (multimodal), and finding a good or good enough solution is challenging or infeasible using other methods.

The pool of candidate solutions adds robustness to the search, increasing the likelihood of overcoming local optima.

Examples of population optimization algorithms include:

- Genetic Algorithm

- Differential Evolution

- Particle Swarm Optimization

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

- Algorithms for Optimization, 2019.

- Essentials of Metaheuristics, 2011.

- Computational Intelligence: An Introduction, 2007.

- Introduction to Stochastic Search and Optimization, 2003.

Articles

Summary

In this tutorial, you discovered a guided tour of different optimization algorithms.

Specifically, you learned:

- Optimization algorithms may be grouped into those that use derivatives and those that do not.

- Classical algorithms use the first and sometimes second derivative of the objective function.

- Direct search and stochastic algorithms are designed for objective functions where function derivatives are unavailable.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Why just using Adam is not an option? How often do you really need to choose a specific optimizer?

Great question!

Adam is great for training a neural net, terrible for other optimization problems where we have more information or where the shape of the response surface is simpler.

It is critical to use the right optimization algorithm for your objective function – and we are not just talking about fitting neural nets, but more general – all types of optimization problems.

https://stackoverflow.com/questions/68072013/custom-loss-function-not-differentiable

Can anyone answer this question?

Excellent information.

Sir my question is about which optimization algorithm is more suitable to optimize portfolio of stock Market

I don’t know about finance, sorry. And I don’t believe the stock market is predictable:

https://machinelearningmastery.com/faq/single-faq/can-you-help-me-with-machine-learning-for-finance-or-the-stock-market

Perhaps formate your objective function and perhaps start with a stochastic optimization algorithm.

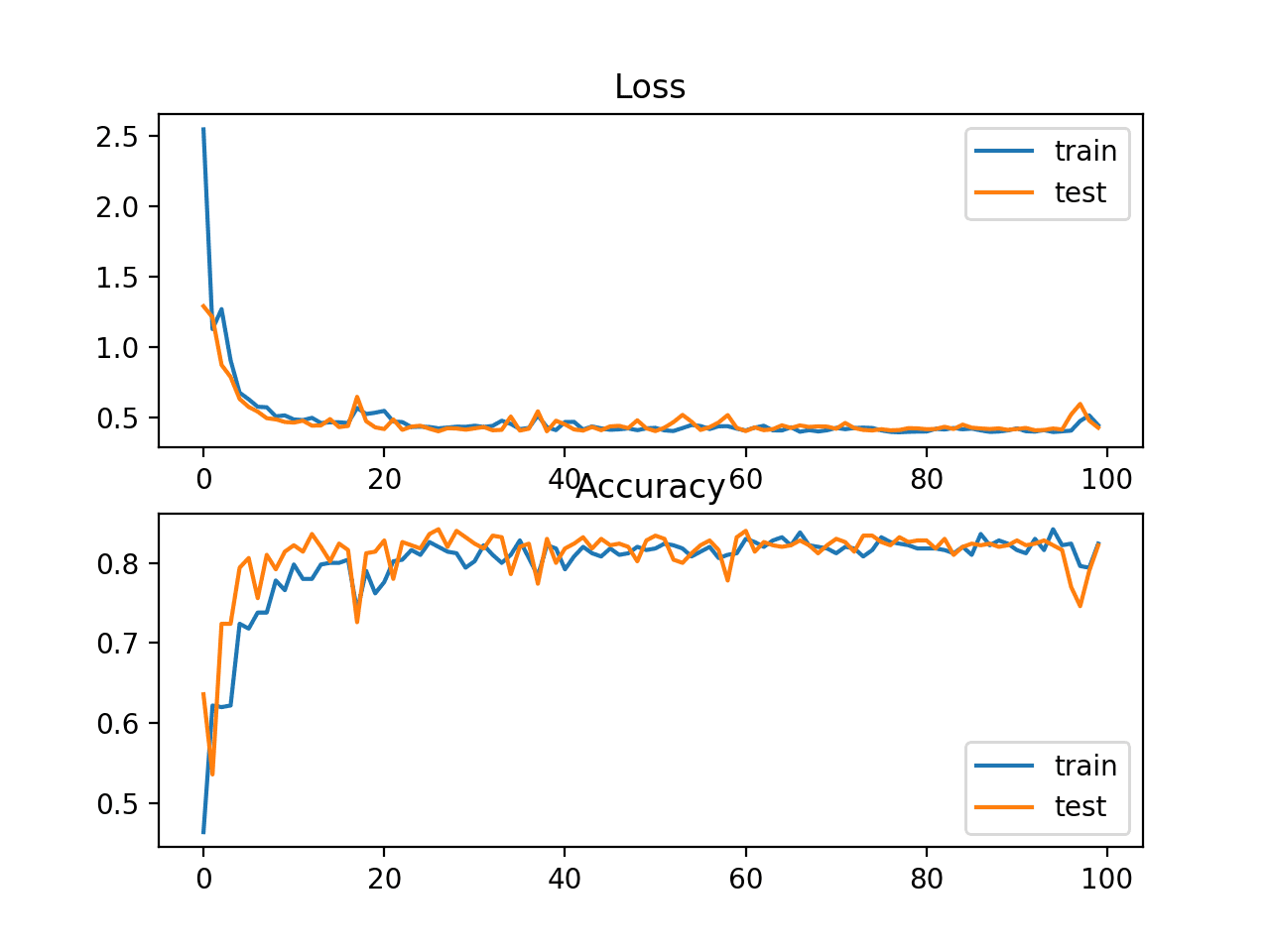

I am using transfer learning from my own trained language model to another classification LSTM model. The SGD optimizer served well in the language model but I am having hard time in the RNN classification model to converge with different optimizers and learning rates with them, how do you suggest approaching such complex learning task?

Thank you for the article!

Good question, I recommend the tutorials here to diagnoise issues with the learning dynamics of your model and techniques to try:

https://machinelearningmastery.com/start-here/#better

I read this tutorial and ended up with list of algorithm names and no clue about pro and contra of using them, their complexity. I is just fake.

Knowing how an algorithm works will not help you choose what works best for an objective function.

Knowing it’s complexity won’t help either.

Not sure how it’s fake exactly – it’s an overview. Perhaps the resources in the further reading section will help go find what you’re looking for.

Can you please run the algorithm in filled funtion method for global optimaization code in Python?

Hi Nashwa…Please let us know what your findings are from your suggestion!

Not fake, but less details u may say

Summarised course on Optim Algo in one step,.. for details

Read books

Agreed.

I have tutorials on each algorithm written and scheduled, they’ll appear on the blog over coming weeks.

Hello.

Can you please run the algorithm Differential Evolution code in Python?

Like code feature importance score?

Yes, I have a few tutorials on differential evolution written and scheduled to appear on the blog soon.

And a Jacobian Matrix too, since you defined a Hessian Matrix. I was just wondering.

Sure:

https://en.wikipedia.org/wiki/Jacobian_matrix_and_determinant

Sir, I want to make a project on “Multiobject Optimization Problem in Agricultural production”. So which optimization algorithm will be best for my work?

Perhaps you can test a suite of algorithms and discover what works well or best for your specific problem.

Very useful overview

Thank u

You’re welcome.

I wrote a package called HumpDay that provides Elo ratings for sixty optimization strategies, drawn from all the popular python optimization packages.

https://www.microprediction.com/blog/humpday

Well done!

Thanks for sharing.

Hi Jason,

I have some numeric features and a numeric output known as cost of the operation for each record in my dataset. How can I minimize the cost here?

What do you assume with the data? If we assume there is a function (e.g. linear) between feature and output, you can first build the function and then minimize it. If there is some randomness, may be you need some preprocessing to the feature first.

Tnx Adrian, The problem is how to make that objective function which no idea linear or not. All we have some examples of features affecting the cost (y) and need to minimize the y by optimizing the features coefficients.

I thought to somehow using the fitted model as objective function and optimize the model to minimize the y.

Yes, you need to assume something here before you optimize. In the extreme case, if I assume your feature and the output are totally unrelated, then you have nothing to optimize.

Features are are correlated with the output and no dependency between pair features. The question is how can we minimize output y where we don’t have the objective function and constrains and hopefully by optimizing the input features alone!

I have a question, optimization will be one of my tasks after I simulate an industrial process,

so I have the operational parameters (decision) and the final yield (objective), (I can generate data for the different scenarios of the decision to get data for the objectives, by trial and error for traning a model).

Then I would like to build a machine learning model to use it to optimize/decide the best operational parameters.

I will use metaheuristic models, but I have a difficulty to know how to decide which algorithm?… I hope you can give me some details and advises.

Hi Nigro…The following may be of some help to you:

https://machinelearningmastery.com/combined-algorithm-selection-and-hyperparameter-optimization/

Dear James,

Thanks alot for your recommendation, I am totally beginner tho, so if I understood correct that means, the optimization alogirthm basically comes later after building a supervised machine learning model using e.g., polynomial regression. After that, the optimization algorithm is coupled. Frankly speaking, I feel I am missing the point here 🙂

For example for the optimization, I will use GA (Genetic alogirthm), but this is only to optimize, while for the the supervised machine learning, I would need a suitable fit for the input data (variables/decisions – Operational parameters), which means I have to select among the supervised ML tools, like Linear regression, logistic, polynomial, etc.

My question is now, what are the right steps to do that?

I really appreciate your help and your effort guys.

Hi Nigro…Please clarify any specific questions so that I may better assist you.

1- How optimization alogirthm can be coupled with machine learning models? (yet every one of them has it is own alogirthms)

2- Let’s say, GA is specified for the optimization, which one comes first building a ML model or implimenting GA? If so, how to choose a ML algorithm suitable for the multiobjective optimization GA.

3- What would be the logical steps to design an ML model capable of optimizing multi objectives?

Thanks alot

Niro

Hi Nigogo…the following would be helpful:

https://machinelearningmastery.com/optimization-for-machine-learning-crash-course/

Very much informative article. Can you suggest me which kind of algorithm should be used for a convex optimization problem along with constraints?

Hi Jack…You may want to investigate Bayesian Optimization for this problem type.

https://machinelearningmastery.com/what-is-bayesian-optimization/

Hi

I want to use hybrid optimization for the CNN model. Can you suggest any hybrid optimization method that work well. I have read many articles with hybrid optimization. But I don’t know how to choose two optimization method for hybrid. Any criteria for hybrid?

Hi Jeba…The following paper is a great introduction to this topic. It also provides some excellent resources to continue your research.

https://arxiv.org/ftp/arxiv/papers/2203/2203.00869.pdf

Amazing work. Now, for a little bit of context before I pose my question, if I may: I am a chemist working with formulation prototypes, calibrations of laboratory instruments and validation of analytical procedures, all this in R&D and SQC industrial environments. As you can guess, these products and the measuring devices with which we check their quality, need a lot of fine tuning. The field that takes care of these tasks goes by the name of Chemometrics, which as I am sure you know, is what gave birth to the Partial Least Squares regression methodology, way back. That’s where I am coming from. And so, we formulation-design chemists are obsessed with reaching and discovering specifications for the highest quality in the least amount time, the least effort and the least resources. In one word, efficiency. That’s our culture and the base of the whole business. But for our taste, Design of Experiments and Response Surface Methodologies are too limited, because of the statistical independence assumption of the data. In reality, chemicals mixed in a reactor are intertwined with each other by nature. You can up to 20 ingredients and at least 10 specification parameters. And mathematically that translates into super high multicollinearity which renders classical Response Surface Methods, useless. The regression models that DoE and RSM produce are not to be trusted. Hence PLS comes to the rescue. Problem is, once we get the model, the discovery of optimal specifications, i.e., the function’s maxima and minima, depends on “black box” software providers. And it’s great. Until it’s not. So here’s my question to you: I’d very much want to learn and explore, at least a little, how to explore the options and test and compare and select among those algorithms that best describe the specifications of our products and basically, see what each has to show. I work with R because it’s so easy for me and I’m so comfortable. But it now seems like I’ll need to switch to Python, if I have to. And it has to be with PLS. (ANNs are not an option because our industry frowns upon it.) So what do you think? In your opinion, which algorithm and even better, what libraries would be appropriate? Great work! Thank you for your time.

Hi Victor…Thank you for your feedback! The following location is a great starting for your machine learning journey with our content.

https://machinelearningmastery.com/start-here/