The promise of deep learning in the field of computer vision is better performance by models that may require more data but less digital signal processing expertise to train and operate.

There is a lot of hype and large claims around deep learning methods, but beyond the hype, deep learning methods are achieving state-of-the-art results on challenging problems. Notably, on computer vision tasks such as image classification, object recognition, and face detection.

In this post, you will discover the specific promises that deep learning methods have for tackling computer vision problems.

After reading this post, you will know:

- The promises of deep learning for computer vision.

- Examples of where deep learning has or is delivering on its promises.

- Key deep learning methods and applications for computer vision.

Kick-start your project with my new book Deep Learning for Computer Vision, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

A Gentle Introduction to the Promise of Deep Learning for Computer Vision

Photo by osamu okamoto, some rights reserved.

Overview

This tutorial is divided into three parts; they are:

- Promises of Deep Learning

- Types of Deep Learning Networks Models

- Types of Computer Vision Problems

Promises of Deep Learning

Deep learning methods are popular, primarily because they are delivering on their promise.

That is not to say that there is no hype around the technology, but that the hype is based on very real results that are being demonstrated across a suite of very challenging artificial intelligence problems from computer vision and natural language processing.

Some of the first large demonstrations of the power of deep learning were in computer vision, specifically image recognition. More recently in object detection and face recognition.

In this post, we will look at five specific promises of deep learning methods in the field of computer vision.

In summary, they are:

- The Promise of Automatic Feature Extraction. Features can be automatically learned and extracted from raw image data.

- The Promise of End-to-End Models. Single end-to-end models can replace pipelines of specialized models.

- The Promise of Model Reuse. Learned features and even entire models can be reused across tasks.

- The Promise of Superior Performance. Techniques demonstrate better skill on challenging tasks.

- The Promise of General Method. A single general method can be used on a range of related tasks.

We will now take a closer look at each.

There are other promises of deep learning for computer vision; these were just the five that I chose to highlight.

What do you think the promise of deep learning is for computer vision?

Let me know in the comments below.

Promise 1: Automatic Feature Extraction

A major focus of study in the field of computer vision is on techniques to detect and extract features from digital images.

Extracted features provide the context for inference about an image, and often the richer the features, the better the inference.

Sophisticated hand-designed features such as scale-invariant feature transform (SIFT), Gabor filters, and histogram of oriented gradients (HOG) have been the focus of computer vision for feature extraction for some time, and have seen good success.

The promise of deep learning is that complex and useful features can be automatically learned directly from large image datasets. More specifically, that a deep hierarchy of rich features can be learned and automatically extracted from images, provided by the multiple deep layers of neural network models.

They have deeper architectures with the capacity to learn more complex features than the shallow ones. Also the expressivity and robust training algorithms allow to learn informative object representations without the need to design features manually.

— Object Detection with Deep Learning: A Review, 2018.

Deep neural network models are delivering on this promise, most notably demonstrated by the transition away from sophisticated hand-crafted feature detection methods such as SIFT toward deep convolutional neural networks on standard computer vision benchmark datasets and competitions, such as the ImageNet Large Scale Visual Recognition Competition (ILSVRC).

ILSVRC over the past five years has paved the way for several breakthroughs in computer vision. The field of categorical object recognition has dramatically evolved […] starting from coded SIFT features and evolving to large-scale convolutional neural networks dominating at all three tasks of image classification, single-object localization, and object detection.

— ImageNet Large Scale Visual Recognition Challenge, 2015.

Want Results with Deep Learning for Computer Vision?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Promise 2: End-to-End Models

Addressing computer vision tasks traditionally involved using a system of modular models.

Each model was designed for a specific task, such as feature extraction, image alignment, or classification. The models are used in a pipeline with a raw image at one end and an outcome, such as a prediction, at the other end.

This pipeline approach can and is still used with deep learning models, where a feature detector model can be replaced with a deep neural network.

Alternately, deep neural networks allow a single model to subsume two or more traditional models, such as feature extraction and classification. It is common to use a single model trained directly on raw pixel values for image classification, and there has been a trend toward replacing pipelines that use a deep neural network model where a single model is trained end-to-end directly.

With the availability of so much training data (along with an efficient algorithmic implementation and GPU computing resources) it became possible to learn neural networks directly from the image data, without needing to create multi-stage hand-tuned pipelines of extracted features and discriminative classifiers.

— ImageNet Large Scale Visual Recognition Challenge, 2015.

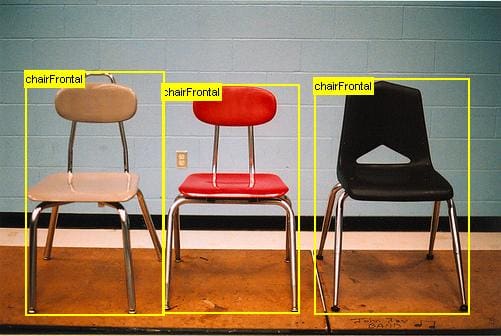

A good example of this is in object detection and face recognition where initially superior performance was achieved using a deep convolutional neural network for feature extraction only, where more recently, end-to-end models are trained directly using multiple-output models (e.g. class and bounding boxes) and/or new loss functions (e.g. contrastive or triplet loss functions).

Promise 3: Model Reuse

Typically, the feature detectors prepared for a dataset are highly specific to that dataset.

This makes sense, as the more domain information that you can use in the model, the better the model is likely to perform in the domain.

Deep neural networks are typically trained on datasets that are much larger than traditional datasets, e.g. millions or billions of images. This allows the models to learn features and hierarchies of features that are general across photographs, which is itself remarkable.

If this original dataset is large enough and general enough, then the spatial hierarchy of features learned by the pretrained network can effectively act as a generic model of the visual world, and hence its features can prove useful for many different computer vision problems, even though these new problems may involve completely different classes than those of the original task.

— Page 143, Deep Learning with Python, 2017.

For example, it is common to use deep models trained in the large ImageNet dataset, or a subset of this dataset, directly or as a starting point on a range of computer vision tasks.

… it is common to use the features from a convolutional network trained on ImageNet to solve other computer vision tasks

— Page 426, Deep Learning, 2016.

This is called transfer learning, and the use of pretrained models that can take days and sometimes weeks to train has become standard practice.

The pretrained models can be used to extract useful general features from digital images and can also be fine-tuned, tailored to the specifics of the new task. This can save a lot of time and resources and result in very good results almost immediately.

A common and highly effective approach to deep learning on small image datasets is to use a pretrained network.

— Page 143, Deep Learning with Python, 2017.

Promise 4: Superior Performance

An important promise of deep neural networks in computer vision is better performance.

It is the dramatically better performance with deep neural networks that has been a catalyst for the growth and interest in the field of deep learning. Although the techniques have been around for decades, the spark was the outstanding performance by Alex Krizhevsky, et al. in 2012 for image classification.

The current intensity of commercial interest in deep learning began when Krizhevsky et al. (2012) won the ImageNet object recognition challenge …

— Page 371, Deep Learning, 2016.

Their deep convolutional neural network model, at the time called SuperVision, and later referred to as AlexNet, resulted in a leap in classification accuracy.

We also entered a variant of this model in the ILSVRC-2012 competition and achieved a winning top-5 test error rate of 15.3%, compared to 26.2% achieved by the second-best entry.

— ImageNet Classification with Deep Convolutional Neural Networks, 2012.

The technique was then adopted for a range of very challenging computer vision tasks, including object detection, which also saw a large leap in model performance over then state-of-the-art traditional methods.

The first breakthrough in object detection was the RCNN which resulted in an improvement of nearly 30% over the previous state of the art.

— A Survey of Modern Object Detection Literature using Deep Learning, 2018.

This trend of improvement has continued year-over-year on a range of computer vision tasks.

Performance has been so dramatic that tasks previously thought not easily addressable by computers and used as CAPTCHA to prevent spam (such as predicting whether a photo is of a dog or cat) are effectively “solved” and models on problems such as face recognition achieve better-than-human performance.

We can observe significant performance (mean average precision) improvement since deep learning entered the scene in 2012. The performance of the best detector has been steadily increasing by a significant amount on a yearly basis.

— Deep Learning for Generic Object Detection: A Survey, 2018.

Promise 5: General Method

Perhaps the most important promise of deep learning is that the top-performing models are all developed from the same basic components.

The impressive results have come from one type of network, called the convolutional neural network, comprised of convolutional and pooling layers. It was specifically designed for image data and can be trained on pixel data directly (with some minor scaling).

Convolutional networks provide a way to specialize neural networks to work with data that has a clear grid-structured topology and to scale such models to very large size. This approach has been the most successful on a two-dimensional, image topology.

— Page 372, Deep Learning, 2016.

This is different from the broader field that may have required specialized feature detection methods developed for handwriting recognition, character recognition, face recognition, object detection, and so on. Instead, a single general class of model can be configured and used across each computer vision task directly.

This is the promise of machine learning in general; it is impressive that such a versatile technique has been discovered and demonstrated for computer vision.

Further, the model is relatively straightforward to understand and to train, although may require modern GPU hardware to train efficiently on a large dataset, and may require model hyperparameter tuning to achieve bleeding-edge performance.

Types of Deep Learning Networks Models

Deep Learning is a large field of study, and not all of it is relevant to computer vision.

It is easy to get bogged down in specific optimization methods or extensions to model types intended to lift performance.

From a high-level, there is one method from deep learning that deserves the most attention for application in computer vision. It is:

- Convolutional Neural Networks (CNNs).

The reason that CNNs are the focus of attention for deep learning models is that they were specifically designed for image data.

Additionally, both of the following network types may be useful for interpreting or developing inference models from the features learned and extracted by CNNs; they are:

- Multilayer Perceptrons (MLP).

- Recurrent Neural Networks (RNNs).

The MLP or fully-connected type neural network layers are useful for developing models that make predictions given the learned features extracted by CNNs. RNNs, such as LSTMs, may be helpful when working with sequences of images over time, such as with video.

Types of Computer Vision Problems

Deep learning will not solve computer vision or artificial intelligence.

To date, deep learning methods have been evaluated on a broader suite of problems from computer vision and achieved success on a small set, where success suggests performance or capability at or above what was previously possible with other methods.

Importantly, those areas where deep learning methods are showing the greatest success are some of the more end-user facing, challenging, and perhaps more interesting problems.

Five examples include:

- Optical Character Recognition.

- Image Classification.

- Object Detection.

- Face Detection.

- Face Recognition.

All five tasks are related under the umbrella of “object recognition,” which refers to tasks that involve identifying, localizing, and/or extracting specific content from digital photographs.

Most deep learning for computer vision is used for object recognition or detection of some form, whether this means reporting which object is present in an image, annotating an image with bounding boxes around each object, transcribing a sequence of symbols from an image, or labeling each pixel in an image with the identity of the object it belongs to.

— Page 453, Deep Learning, 2016.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Books

- Deep Learning, 2016.

- Deep Learning with Python, 2017.

Papers

- ImageNet Large Scale Visual Recognition Challenge, 2015.

- ImageNet Classification with Deep Convolutional Neural Networks, 2012.

- Object Detection with Deep Learning: A Review, 2018.

- A Survey of Modern Object Detection Literature using Deep Learning, 2018.

- Deep Learning for Generic Object Detection: A Survey, 2018.

Summary

In this post, you discovered the specific promises that deep learning methods have for tackling computer vision problems.

Specifically, you learned:

- The promises of deep learning for computer vision.

- Examples of where deep learning has or is delivering on its promises.

- Key deep learning methods and applications for computer vision.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Do you see a time when image classification will be done on the capture device, for example the camera, and encoded as additional META type data (as in the Exif header)? It seems to me this would be an ideal time to bring that discussion to the foreground.

Rather than worrying about deep learning algorithms, and additional training requirements, and discovering this later though carefully modeled ML/DL, perhaps the lions share of this could be done when the image is actually captured.

What are your thoughts on that?

Yes, cameras like the new google pixel phone are mostly software already.

The problem does not feel easier at capture time – if that is what you mean.

sir i need a help from you. i need source code for detecting diabetic retinopathy using convolutional neural networks.

Sounds like a great project.

What problem are you having specifically?

Hello Jason, First of all am new to Deep Learning, and I have to say you bring the topic out very nice and clear, Am currently trying to solve the problem of removing ring artifacts from CT images and I intend to do so using deep learning method, as you have mentioned the types of DL models where CNN is for images, May I know where U-Net falls is it part of CNN?

will appreciate thanks

That sounds like a great problem.

U-Net is a model architecture.

A U-Net is used for image generation/synthesis. Typically as part of an image translation type model.

Thanks prof for this great info, Am a student of Master degree. Interested in sign language recognition, could i use CNN model for both hand recognition and facial expression to give more accurate results and get most important features for every sign? I need recommendation for and CNN model.

thanks

Perhaps you can find a model already trained for this purpose and reuse it or retrain it?

Otherwise perhaps start with a vgg model or similar and use transfer learning to adapt it for your new dataset.

Dear prof.jason,

Thanks for your useful introduction. Im phd student, and attend to work on microwave imaging include imaging head to detect and classified brain stroke and determine it position. The plan is use artificial neural network and deep learning to deal with the backscattered signals from human model to find the above requirements information for brain stroke so my question is how can we do that

Thanks

Hi Ammar…While we cannot speak to a specific methodology for your project, we can help address questions regarding our content. I would recommend that you clearly establish the goals of your models…classification, regression, time-series forecasting, etc…

Once your goals are established, you can establish a suitable path here to improve your skills:

https://machinelearningmastery.com/start-here/